The race to build the smartest AI is getting complicated. Benchmarks designed to measure the coding prowess of cutting-edge models, like agentic coding benchmarks such as SWE-bench and Terminal-Bench, often show top models separated by mere percentage points. These scores are treated as gospel for deciding which AI to deploy, but new research reveals a significant flaw: the underlying infrastructure can distort results more than the models themselves.

Internal experiments found that simply changing the server resources allocated to resource configuration impact, specifically on Terminal-Bench 2.0, created a 6-percentage-point difference in scores. This gap is wider than the margin separating leading AI models.

Beyond Static Scores

Unlike traditional benchmarks that score output directly, agentic coding evaluations involve complex, multi-turn interactions. AI models write code, run tests, and install dependencies within a dynamic environment. This means the runtime isn't just a passive box; it's an active participant in the problem-solving process.

Two AIs running the same task aren't necessarily taking the same test if their resource budgets and time limits differ. Even benchmark developers are acknowledging this. Terminal-Bench 2.0 now suggests per-task CPU and RAM recommendations, but simply suggesting resources isn't the same as enforcing them consistently, and how they're enforced matters.

The Kubernetes Conundrum

Researchers running Terminal-Bench 2.0 on Google Kubernetes Engine noticed discrepancies between their scores and the official leaderboard, alongside a high rate of infrastructure-related failures—up to 6% of tasks failed due to pod errors, unrelated to the AI's coding ability.

The issue stemmed from resource enforcement. Their Kubernetes setup treated resource specifications as both a guaranteed minimum and a hard kill limit. This left no headroom for temporary resource spikes. A momentary surge in memory could crash a container that would have otherwise succeeded.

The Terminal-Bench leaderboard, by contrast, uses a different sandboxing provider that is more lenient, allowing temporary over-allocation. This favors infrastructural stability but might mask true model limitations.

Quantifying the Infrastructure Effect

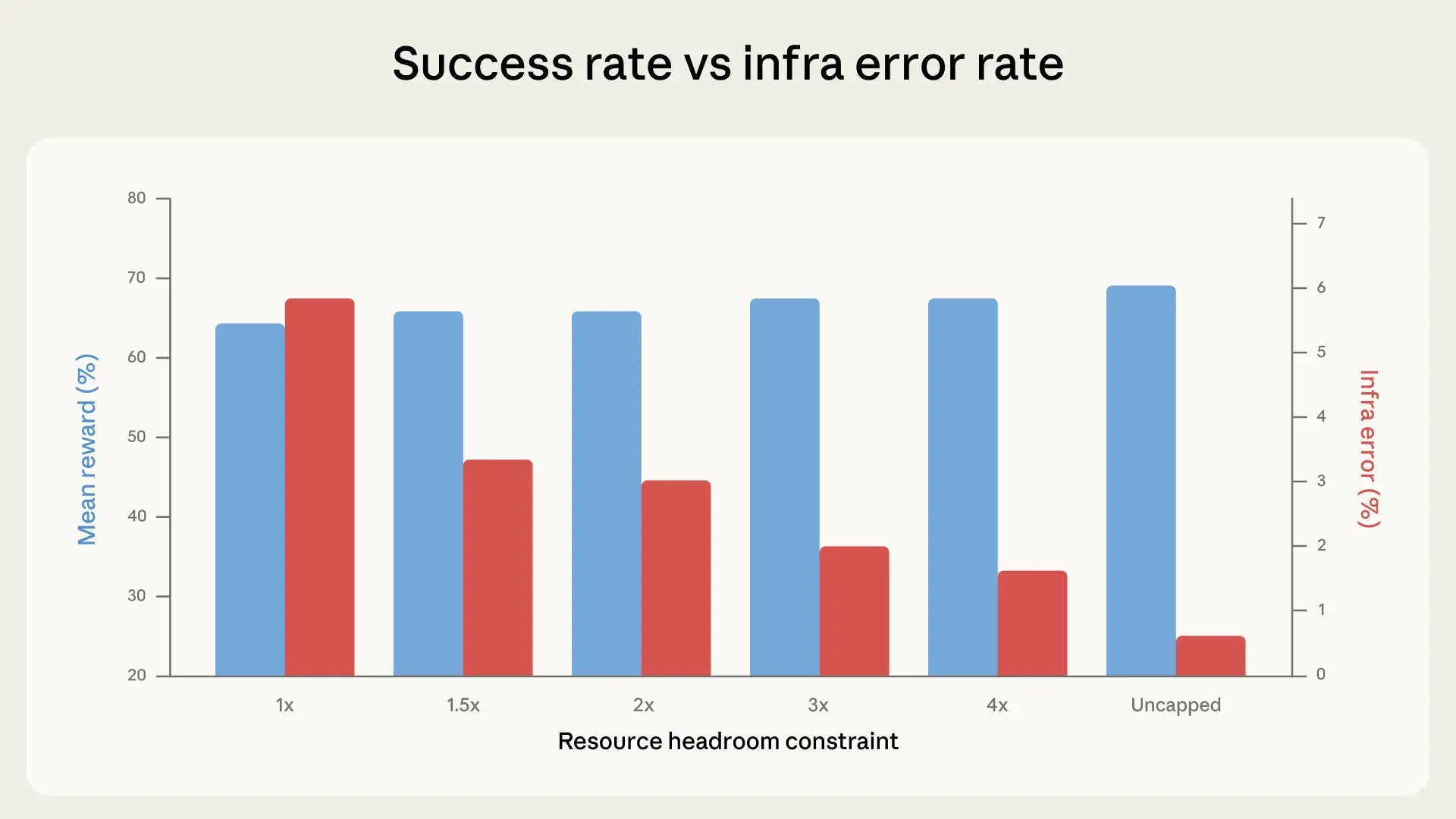

To measure the impact, researchers tested Terminal-Bench 2.0 across six different resource configurations, from strict enforcement to completely uncapped resources, keeping the AI model, harness, and tasks identical.

Success rates climbed with increased resource headroom. Infrastructure errors dropped significantly, from 5.8% with strict enforcement down to 0.5% when uncapped. The jump in success rates became more pronounced above a 3x resource allocation.

Between 3x and uncapped resources, success rates jumped nearly 4 percentage points while infrastructure errors decreased an additional 1.6 percentage points. This suggests that the extra resources allowed the AI to tackle more complex approaches, like installing large dependencies or running memory-intensive tests.

When Limits Change the Game

Up to roughly 3x the recommended resources, additional headroom primarily fixed infrastructure reliability issues, preventing spurious crashes. Above this threshold, however, the extra resources actively helped the AI solve problems it couldn't before.

This means tight resource limits can inadvertently reward efficiency, while generous limits favor AIs that can leverage more computational power. An AI that writes lean code will excel under strict constraints, while one that uses brute force with heavy tools will do better with ample resources.

Consider the `bn-fit-modify` task. Some models attempt to install a large Python data science stack. Under generous limits, this works. Under strict limits, the container runs out of memory during installation, before the AI even writes code. A leaner strategy exists, but the resource configuration dictates which approach succeeds.

Across Benchmarks and Beyond

This effect was replicated across different Anthropic models and also observed, though to a lesser extent, on SWE-bench. Increasing RAM on SWE-bench tasks led to higher scores, indicating that resource allocation isn't neutral even on less demanding benchmarks.

Other factors like time limits, cluster health, and even network bandwidth can also introduce variance. Agentic evaluations are end-to-end system tests, and any component can act as a confounder. Pass rates have even been anecdotally observed to fluctuate with the time of day due to API latency variations.

Recommendations for Rigor

Ideally, evals should run on identical hardware. Practically, this is difficult. Researchers recommend that benchmarks specify both guaranteed resource allocations and hard kill limits separately, rather than a single value.

This provides containers with breathing room to avoid unwarranted crashes while still enforcing a ceiling. A 3x ceiling over per-task specs, for example, significantly reduced infrastructure errors while keeping score lifts within noise margins, largely neutralizing the infrastructure confounder without removing meaningful resource pressure.

The implications are significant. A few-point lead on a leaderboard might reflect genuine AI capability or simply better-resourced infrastructure. Without standardized and transparent reporting of setup configurations, interpreting benchmark results requires skepticism.

For AI labs, resource configuration must be a first-class experimental variable. For benchmark maintainers, specifying enforcement methodology is key. For consumers of these results, small score differences should be viewed with caution until the evaluation setup is fully documented and matched.