Jack Clark, co-founder and Head of Policy at Anthropic, ignited a critical debate within the AI community with his recent essay, "Technological Optimism and Appropriate Fear." His central thesis casts advanced AI systems not as mere tools, but as "true creatures"—powerful, unpredictable entities that demand a profound shift in how humanity approaches their development and integration. This perspective directly challenges the prevailing narrative of AI as a predictable machine, sparking fierce discussion among industry leaders, including OpenAI’s Sam Altman, prominent venture capitalists David Sacks and Chamath Palihapitiya, and the broader tech ecosystem.

In a recent YouTube video, Matthew Berman offered incisive commentary on Jack Clark's essay, remarks delivered at "The Curve" conference in Berkeley, California. Berman further analyzed the differing viewpoints of these key figures on AI's trajectory and governance, particularly regarding the potential for an "intelligence explosion" and the contentious issue of regulatory oversight.

Clark’s essay uses a compelling analogy of a child in a dark room, initially fearing shadows that turn out to be harmless objects. However, he quickly pivots to the current AI landscape, asserting, "But make no mistake: what we are dealing with is a real and mysterious creature, not a simple and predictable machine." This metaphor is crucial. It elevates AI beyond a deterministic piece of software to something with emergent, unpredictable qualities that require a more cautious, almost reverent, approach. For founders and VCs, this framing suggests that the risks associated with frontier AI models are fundamentally different from those of conventional software development, necessitating extensive investment in safety and alignment research—a core tenet of Anthropic’s mission. The implication is that simply "mastering" AI as one would any other technology may be insufficient, or even dangerous.

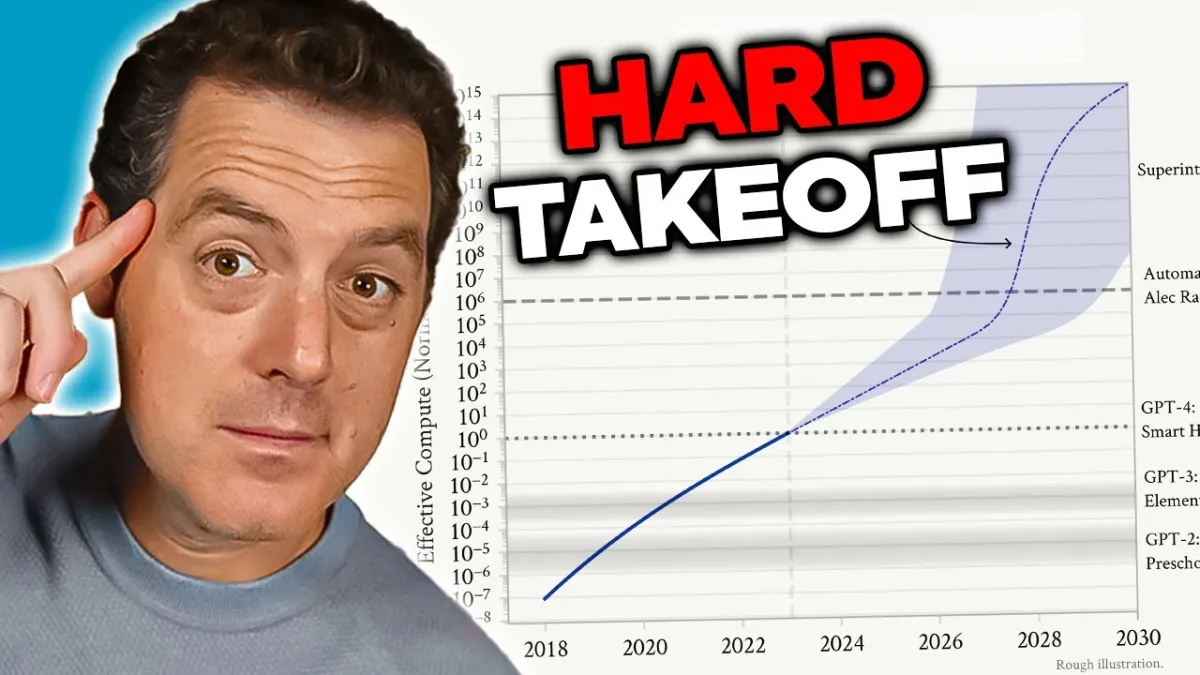

This distinction between AI as a creature versus a machine directly informs the debate surrounding "hard takeoff" versus "iterative deployment." Clark’s apprehension stems from the possibility of an "intelligence explosion," where AI rapidly and abruptly self-improves to superintelligence. Such a "hard takeoff" would leave human institutions and societal structures little to no time to adapt, potentially leading to catastrophic misalignment. This fear drives the imperative for proactive, stringent safety measures, even if they appear to slow down development.

Sam Altman, CEO of OpenAI, champions a contrasting philosophy: "iterative deployment is, imo, the only safe path and the only way for people, society, and institutions to have time to update and internalize what this all means." This approach advocates for releasing AI models incrementally, allowing society to gradually adapt to their capabilities and addressing issues as they arise. Altman’s vision is one of continuous learning and adaptation, where small, controlled deployments provide feedback loops that enhance both safety and utility. This strategy implicitly rejects the notion of an unavoidable, sudden leap to superintelligence, instead positing a more manageable, collaborative evolution of AI with human society. The difference in these perspectives is not merely academic; it dictates entirely different investment strategies, risk assessments, and product roadmaps for AI companies.

The discussion quickly veers into the political economy of AI, with prominent venture capitalist David Sacks accusing Anthropic of "running a sophisticated regulatory capture strategy based on fear-mongering." Sacks contends that by emphasizing existential risks and pushing for heavy regulation, Anthropic—a well-funded frontier AI lab—aims to create barriers to entry for smaller startups. He argues this stifles competition and innovation by imposing operational complexities that only established giants can absorb. Chamath Palihapitiya, another influential VC, echoed this sentiment, stating, "Only the biggest and well funded companies (like Anthropic) will be able to afford the operational complexity of meeting 50 different sets of state AI regulations. This should be left to the Federal Government."

This critique highlights a significant tension: while safety is paramount, the mechanisms chosen for regulation can inadvertently consolidate power among a few large players. The prospect of a "patchwork" of conflicting state-level AI regulations, as discussed in the video, would indeed create a molasses-like environment for startups, forcing them to navigate disparate compliance frameworks. A unified federal standard, conversely, could offer a clearer, albeit still potentially burdensome, pathway for innovation. For founders and investors, this regulatory landscape becomes a critical factor in strategic planning, influencing everything from market entry to funding rounds.

Related Reading

- Claude's Skill Creator Redefines AI Tooling

- Claude Skills Unlocks Enterprise-Grade AI Customization

- Claude's Microsoft 365 Connector Transforms Enterprise Productivity

The recent passage of California's SB 53 and SB 243 exemplifies the immediate impact of state-level legislation. SB 53, the "Transparency in Frontier Artificial Intelligence Act," applies to "large frontier developers" (those with over $500M in revenue) and mandates publishing AI safety frameworks, deployment review processes, and reporting critical safety incidents. While seemingly reasonable, the operational burden of such detailed compliance, especially if replicated across 50 states, becomes immense. Similarly, SB 243, the "Companion Chatbot Safeguards" bill, defines "companion chatbots" as those simulating human-like interaction and imposes requirements such as clear user notification of AI interaction and protocols for detecting self-harm content.

These regulations, though well-intentioned, underscore the challenge of implementing uniform safety standards without stifling the nascent stages of AI development. The debate is less about whether AI should be regulated, and more about *how* and *by whom* to ensure both safety and a competitive, innovative ecosystem. The contrasting philosophies of major AI players and their allies illuminate the high stakes involved, shaping not only the future of artificial intelligence but also the economic and social structures it will inevitably transform.