OpenAI today launched a research preview of GPT‑5.3-Codex‑Spark, a stripped-down version of its larger GPT‑5.3‑Codex model. This new iteration is the company's first AI specifically engineered for real-time coding assistance, marking a significant step in its collaboration with Cerebras, announced earlier this year.

Codex‑Spark is built for speed, optimized to deliver near-instantaneous responses on ultra-low latency hardware. It boasts over 1000 tokens per second, a critical metric for interactive coding where immediate feedback is essential.

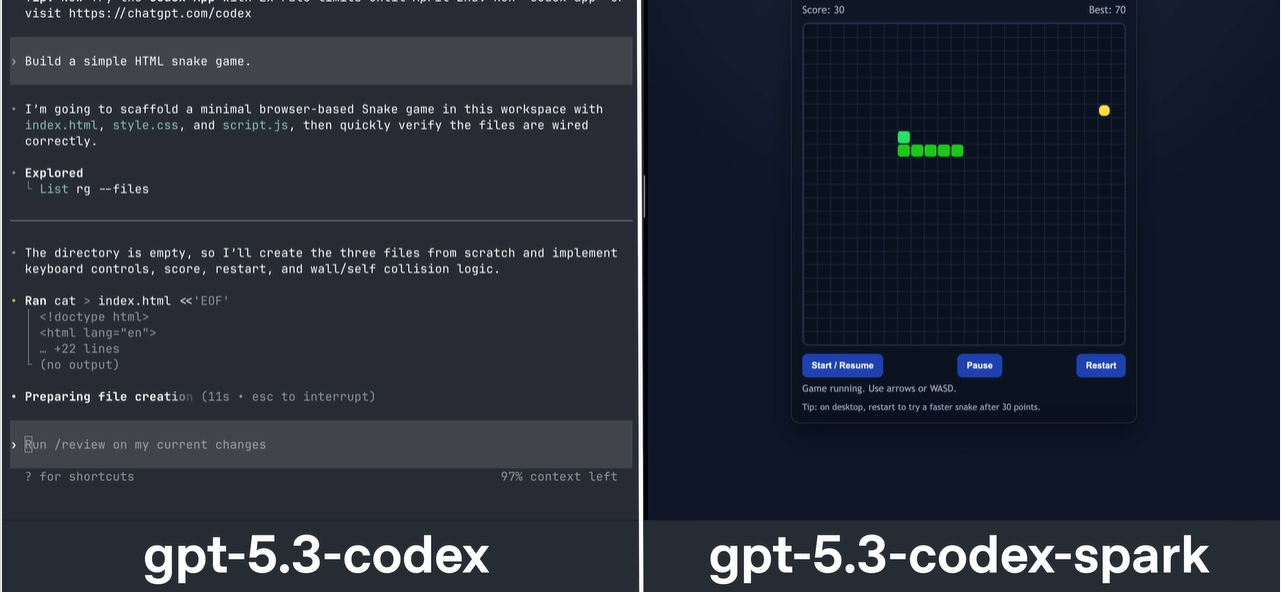

A New Mode for Codex

While OpenAI’s larger frontier models excel at complex, long-running autonomous tasks, Codex‑Spark targets immediate, interactive coding. Developers can use it for quick edits, logic refactoring, or interface adjustments, seeing results instantly.

This dual capability means Codex now supports both ambitious, multi-day projects and rapid, in-the-moment development. OpenAI plans to gather developer feedback to refine the model and expand access.

The research preview offers a 128k context window and is text-only. Usage will have separate rate limits during this phase, with potential queuing during peak demand to ensure reliability.

Speed Meets Intelligence

Codex‑Spark prioritizes low latency for interactive coding sessions. Developers can collaborate with the model in real-time, redirecting its work and iterating rapidly. Its default behavior is lightweight, focusing on minimal, targeted edits rather than automatic test execution.

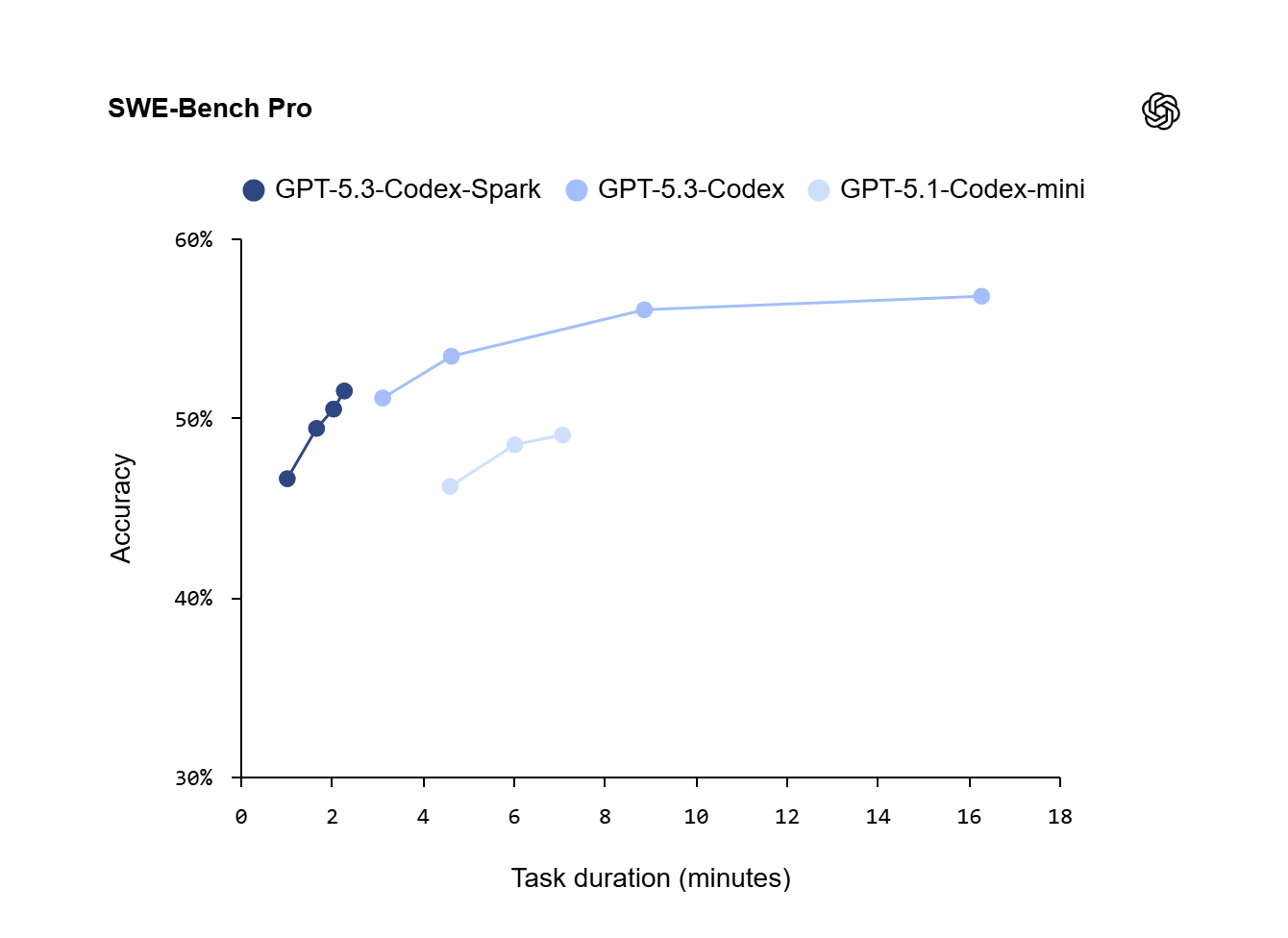

Performance Benchmarks

On benchmarks like SWE‑Bench Pro and Terminal‑Bench 2.0, which evaluate software engineering capabilities, GPT‑5.3‑Codex‑Spark shows strong performance. It completes tasks in a fraction of the time compared to its predecessor, GPT‑5.3‑Codex.

The model's speed is a result of both AI optimization and underlying infrastructure improvements. OpenAI has reduced end-to-end latency across the response pipeline, streamlining streaming, rewriting inference stack components, and optimizing session initialization for faster first token display.

These optimizations include an 80% reduction in client/server roundtrip overhead and a 50% decrease in time-to-first-token, enabled by a persistent WebSocket connection that will soon be standard for all models.

Powered by Cerebras

Codex‑Spark runs on Cerebras' Wafer Scale Engine 3, an AI accelerator designed for high-speed inference. This hardware provides a latency-first serving tier for Codex, complementing OpenAI's existing GPU infrastructure.

This partnership integrates Cerebras' low-latency capabilities into OpenAI's production serving stack. As Sean Lie, CTO and Co-Founder of Cerebras, stated, "What excites us most about GPT‑5.3‑Codex‑Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible."

While GPUs remain foundational for broad usage and cost-effectiveness, Cerebras hardware excels in demanding low-latency workflows. Combining both GPU and Cerebras can yield optimal performance for specific tasks.

Availability and Future Plans

GPT‑5.3‑Codex‑Spark is currently available as a research preview for ChatGPT Pro users via the Codex app, CLI, and VS Code extension. Separate rate limits apply due to the specialized hardware.

OpenAI is also providing API access to a select group of design partners. Broader access will expand as the integration is refined under real-world workloads. The model is text-only with a 128k context window, but future iterations will include larger models, longer contexts, and multimodal capabilities.

Codex‑Spark includes the same safety training as mainline models. Evaluations indicate it does not pose a significant risk in cybersecurity or biology domains.

The Future of Coding Assistants

Codex‑Spark represents a shift towards a two-mode Codex experience: long-horizon reasoning and real-time collaboration. OpenAI envisions these modes eventually blending, allowing users to engage in interactive loops while background agents handle complex tasks.

As AI models grow more powerful, interaction speed becomes paramount. Ultra-fast inference, as demonstrated by Codex‑Spark, promises a more natural and powerful development experience, accelerating the creation of software.