Meta has unveiled Segment Anything Model 3 (SAM 3), a unified foundational model that finally brings robust, open-vocabulary language understanding to visual segmentation and tracking.

The original Segment Anything Model (SAM) was a watershed moment for computer vision, allowing users to instantly mask any object in an image using simple visual prompts like points or bounding boxes. Now, Meta is pushing the technology into the realm of true multimodal understanding with Segment Anything Model 3 (SAM 3), a unified system for detection, segmentation, and tracking that responds directly to complex text prompts.

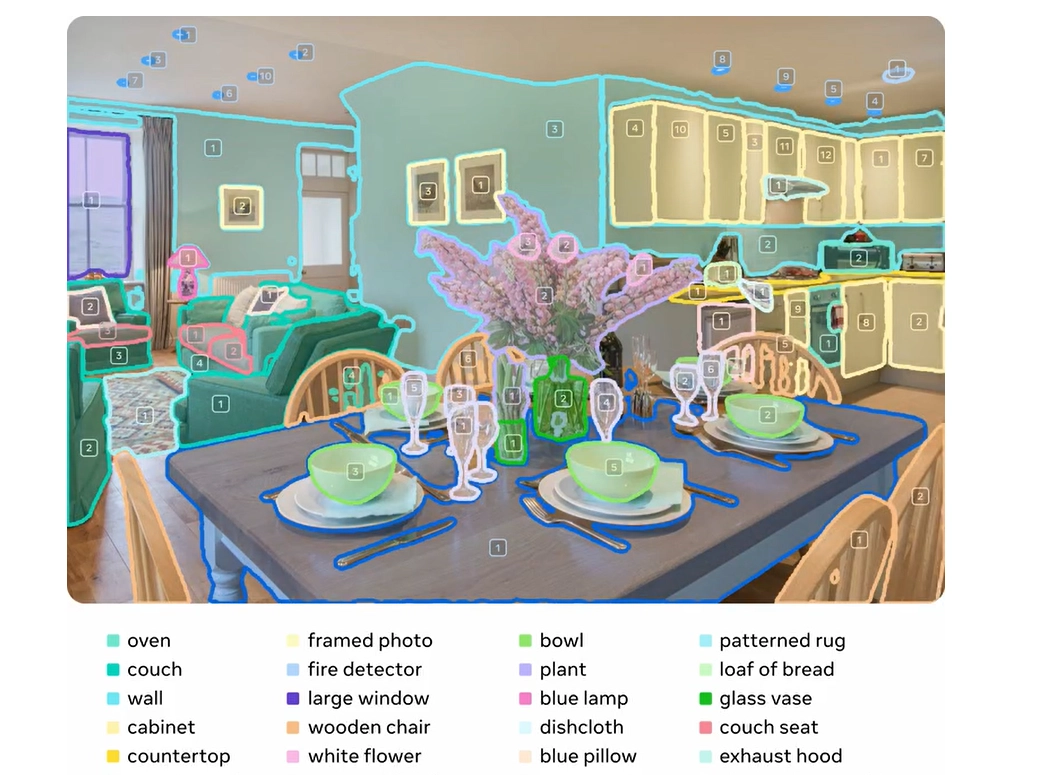

This release is arguably the most significant advancement in the SAM lineage since its inception. SAM 3 overcomes the core limitation of its predecessors: the inability to handle open-vocabulary concepts defined by language. Traditional models could segment a "person," but they struggled with nuanced requests like "the striped red umbrella" or "people sitting down, but not holding a gift box in their hands." SAM 3 solves this by introducing promptable concept segmentation, accepting short noun phrases or image exemplars to define the target concept.

The results are substantial. According to Meta’s internal benchmarks, SAM 3 delivers a 2x gain over existing systems—including foundational models like Gemini 2.5 Pro and specialist baselines like OWLv2—on the new Segment Anything with Concepts (SA-Co) evaluation dataset. This performance leap is achieved by building on the Meta Perception Encoder and leveraging a sophisticated hybrid data engine that uses Llama-based AI annotators alongside human reviewers, dramatically speeding up the creation of high-quality training data.

From Research to Consumer Apps

Unlike many foundational models that remain locked in the lab, Meta is immediately integrating SAM 3 across its consumer ecosystem, signaling a major shift in how users will interact with media creation tools.

For creators, SAM 3 will soon power new effects in Instagram’s video creation app, Edits. This allows users to apply dynamic effects to specific people or objects in a video with a single tap, simplifying what was previously a complex, frame-by-frame editing workflow. Similar creation experiences are also coming to Vibes on the Meta AI app and meta.ai on the web, enabling advanced visual remixing.

The utility extends beyond creative media. Meta is simultaneously launching SAM 3D, a suite of models focused on 3D object and human reconstruction from a single image. Both SAM 3 and SAM 3D are powering the new View in Room feature on Facebook Marketplace. This feature allows users to visualize home decor items, like a lamp or a table, accurately placed and scaled within their own physical spaces before purchase—a crucial step toward grounded, real-world AI applications.

To ensure this technology is immediately accessible, Meta is also debuting the Segment Anything Playground. This platform allows anyone, regardless of technical expertise, to upload media and experiment with SAM 3’s capabilities, offering templates for practical tasks like pixelating faces or license plates, as well as fun video edits like adding motion trails or spotlight effects. This move mirrors the strategy used for Llama, ensuring rapid community adoption and feedback.

The architectural achievement of SAM 3 is its ability to unify disparate tasks. The model successfully integrates detection, segmentation, and tracking without the task conflicts that typically plague unified models. For instance, while tracking requires features that distinguish one instance from another, concept detection requires features that generalize across all instances of a concept. SAM 3 manages this balance, sustaining near real-time performance for tracking approximately five concurrent objects on an H200 GPU.

Meta is also making a significant contribution to open science, releasing the SAM 3 model weights, fine-tuning code, and the SA-Co benchmark. Furthermore, they collaborated with conservation groups to release SA-FARI, a publicly available video dataset of over 10,000 camera trap videos annotated using SAM 3, aimed at accelerating wildlife monitoring and conservation efforts.

While SAM 3 represents a significant leap, Meta acknowledges its current limitations. The model still struggles with highly complex, long phrases—such as "the second to last book from the right on the top shelf"—or highly specialized, fine-grained concepts like "platelet" in medical imagery. However, Meta notes that when SAM 3 is paired with a multimodal large language model (MLLM), it can successfully tackle complex reasoning queries, demonstrating its potential as a powerful perception tool for future AI agents.

The release of Segment Anything Model 3 solidifies Meta’s position at the forefront of foundational vision research. By unifying language, segmentation, and tracking, and immediately integrating these capabilities into consumer products, Meta is setting a new standard for how AI understands and modifies the visual world.