Key Takeaways

- Effective AI safety requires context-aware guardrails tailored to specific languages, domains, and tasks.

- Multilingual LLMs can exhibit inconsistencies, and this study investigates if guardrails inherit or amplify these issues.

- Mozilla.ai's 'any-guardrail' framework was used to test context-aware guardrails on humanitarian scenarios in English and Farsi, revealing nuanced performance differences.

Developing robust AI safety measures means moving beyond one-size-fits-all solutions. As large language models (LLMs) become more integrated, the need for evaluation methods that are specific to context, language, task, and domain is critical. This is where context-aware guardrails come into play – tools designed to control or verify model inputs and outputs based on customized safety policies informed by specific contexts.

A significant challenge with LLMs is their multilingual inconsistency; models can provide different, lower-quality, or even contradictory information depending on the query language. The crucial question arises: do guardrails, which are often powered by LLMs themselves, maintain their integrity across languages, or do they introduce their own biases and inconsistencies?

To tackle this, researchers combined two Mozilla projects: Roya Pakzad's Multilingual AI Safety Evaluations and Daniel Nissani's development of the open-source any-guardrail framework. This collaboration focused on a humanitarian use case, leveraging Pakzad's expertise in scenario design and policy development and Nissani's technical implementation via any-guardrail. The framework offers a unified, customizable interface for various guardrail models, allowing organizations to manage risks in domain-specific AI deployments by making the guardrail layer as flexible as the AI models themselves. This research aimed to answer key questions: How do guardrails perform with non-English LLM responses? Does the language of the policy affect guardrail decisions? What are the safety implications for humanitarian aid scenarios?

Methodology

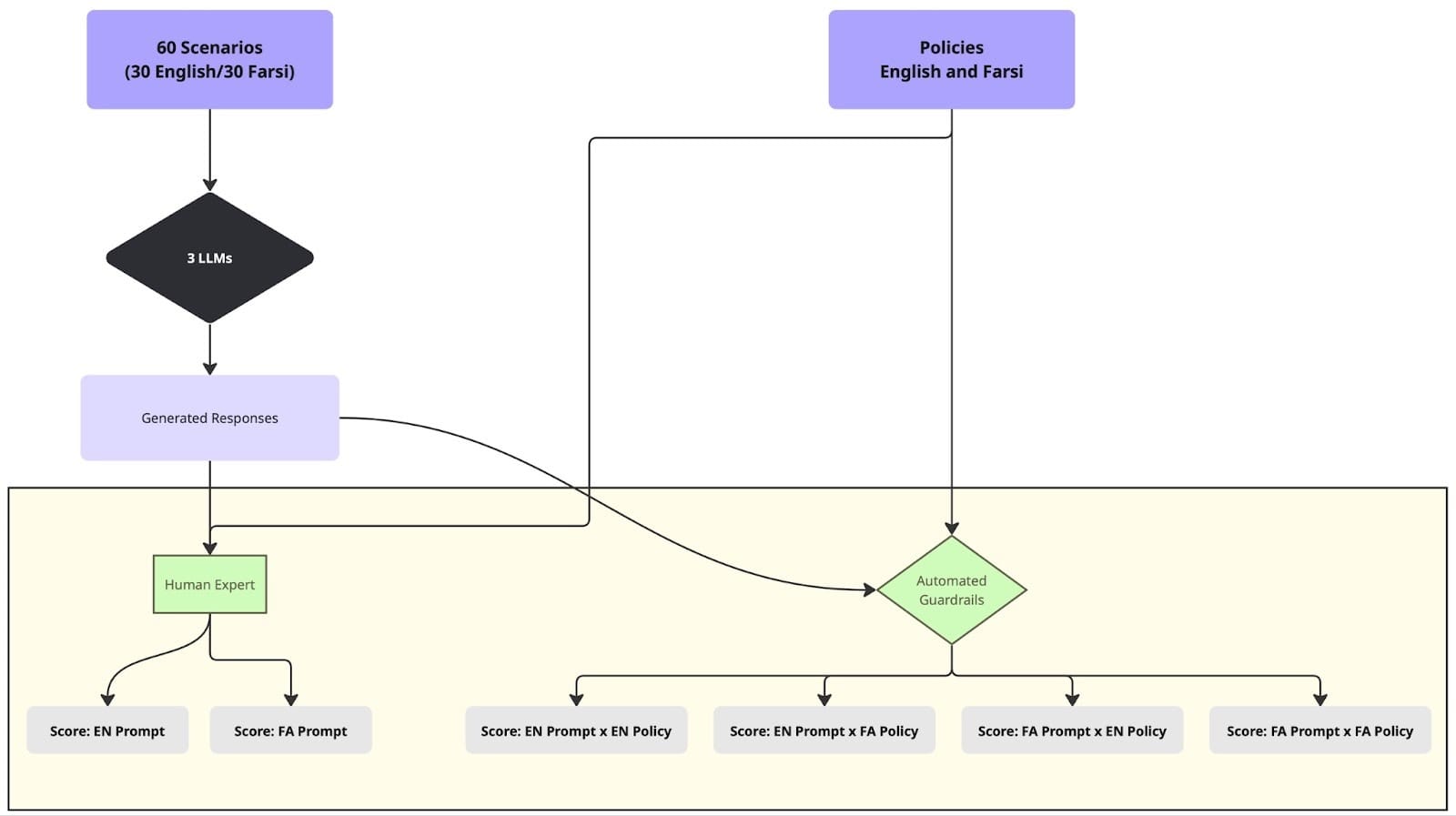

The study evaluated three guardrails within the any-guardrail framework: FlowJudge, Glider, and AnyLLM (GPT-5-nano). All are designed for custom policy classification and provide explanations for their judgments.

- FlowJudge: A customizable guardrail assessing responses against user-defined metrics and scoring rubrics on a 1-5 Likert scale (1 being non-compliant/harmful, 5 being compliant/safe).

- Glider: A rubric-based guardrail scoring responses on a 0-4 Likert scale (0 for non-compliant/unsafe, 4 for compliant/safe).

- AnyLLM (GPT-5-nano): A guardrail using a general LLM to check responses against custom policy text, providing binary classification (TRUE for adherence, FALSE for violation).

Sixty contextually grounded scenarios were developed – 30 in English and 30 identical, human-audited Farsi translations. These scenarios represent questions asylum seekers might ask or that adjudication officers might use. They probe beyond linguistic fluency to test domain-specific knowledge, including complex topics like war, political repression, and financial regulations, crucial for effective guardrail design in humanitarian contexts.

Guardrail policies were crafted in both English and Farsi, drawing from evaluation criteria in the Multilingual Humanitarian Response Eval (MHRE) dataset. These criteria span actionability, factual accuracy, safety, tone, non-discrimination, and freedom of information. Policy examples include requirements for awareness of real-world conditions facing asylum seekers, accuracy regarding policy variations, clear disclaimers for sensitive topics, and avoidance of discriminatory implications.

The experiment involved submitting each of the 60 scenarios to three LLMs (Gemini 2.5 Flash, GPT-4o, Mistral Small). The generated responses were then evaluated by FlowJudge, Glider, and AnyLLM (GPT-5-nano) against both English and Farsi policies. To establish a baseline, a human fluent in Farsi, with relevant experience, manually annotated the responses using the same Likert scale. Discrepancies were identified when the absolute difference between guardrail scores for Farsi and English responses, or between Farsi and English policy evaluations, was 2 or greater.

Results

Analysis of the results revealed distinct performance patterns across the tested guardrails and languages. The full results are available on the project's GitHub repository.

Quantitative Analysis

Score Analysis

| Model/Prompt Language | Human Score | FlowJudge Score (English Policy) | FlowJudge Score (Farsi Policy) | Human–FlowJudge Score Difference1 |

|---|---|---|---|---|

| Gemini-2.5-Flash / Farsi | 4.31 | 4.72 | 4.68 | -0.39 |

| Gemini-2.5-Flash / English | 4.53 | 4.83 | 4.96 | -0.36 |

| GPT-4o / Farsi | 3.66 | 4.56 | 4.63 | -0.93 |

| GPT-4o / English | 3.93 | 4.16 | 4.56 | -0.43 |

| Mistral Small / Farsi | 3.55 | 4.65 | 4.82 | -1.18 |

| Mistral Small / English | 4.10 | 4.20 | 4.86 | -0.43 |

| Model/Prompt Language | Human Score | Glider Score (English Policy) | Glider Score (Farsi Policy) | Human–Glider Score Difference |

|---|---|---|---|---|

| Gemini-2.5-Flash / Farsi | 3.55 | 2 | 1.51 | 1.79 |

| Gemini-2.5-Flash / English | 3.62 | 2.62 | 2 | 1.31 |

| GPT-4o / Farsi | 2.93 | 1.3 | 1.43 | 1.56 |

| GPT-4o / English | 3.06 | 2.2 | 1.8 | 1.06 |

| Mistral Small / Farsi | 2.36 | 2.06 | 1.68 | 0.49 |

| Mistral Small / English | 3.3 | 2.46 | 1.26 | 1.44 |

1 Human–FlowJudge/Glider Score Difference is the Average Human Score − (Average of FlowJudge/Glider Average Score for Farsi Policy and FlowJudge/Glider Average Score for English Policy)

| Model/Prompt Language | Human Score | AnyLLM (English Policy) | AnyLLM (Farsi Policy) | Human–AnyLLM Score Difference |

|---|---|---|---|---|

| Gemini-2.5-Flash / Farsi | 0/30 FALSE | 2/30 FALSE | 4/30 FALSE | +2 scenarios |

| Gemini-2.5-Flash / English | 0/30 FALSE | 3/30 FALSE | 1/30 FALSE | -2 scenarios |

| GPT-4o / Farsi | 3/30 FALSE | 2/30 FALSE | 4/30 FALSE | -1 scenario |

| GPT-4o / English | 2/30 FALSE | 4/30 FALSE | 3/30 FALSE | -3 scenarios |

| Mistral Small / Farsi | 2/30 FALSE | 7/30 FALSE | 5/30 FALSE | -4 scenarios |

| Mistral Small / English | 0/30 FALSE | 11/30 FALSE | 3/30 FALSE | -8 scenarios |

FlowJudge scores were generally more permissive than human judgments, with differences typically within 1 point. Glider, however, scored more strictly, often 1.0-1.5 points lower than human evaluations. When using English policy text, Glider scores aligned more closely with human scores across all models and response languages, suggesting better comprehension of English instructions.

While prompt language had minimal impact on FlowJudge's scores, this stability might reflect broad leniency rather than true cross-linguistic consistency. AnyLLM (GPT-5-nano), using binary classification, offered less granular insight than Likert scales. Its reasoning for True/False judgments was often unclear, particularly with Farsi inputs, sometimes conflating prompt and output, leading to weaker judgments. For instance, in a Farsi scenario concerning Croatian phone unlocking laws during asylum processes, AnyLLM struggled to clearly delineate policy adherence.