The long-standing debate in AI development over LoRA vs full fine-tuning may finally be settled. A new paper from researchers at Thinking Machines, titled "LoRA Without Regret," provides a clear playbook showing that the popular, efficient fine-tuning method can match the performance of its resource-intensive counterpart. This effectively gives developers a green light to adopt the faster, cheaper method without sacrificing model quality.

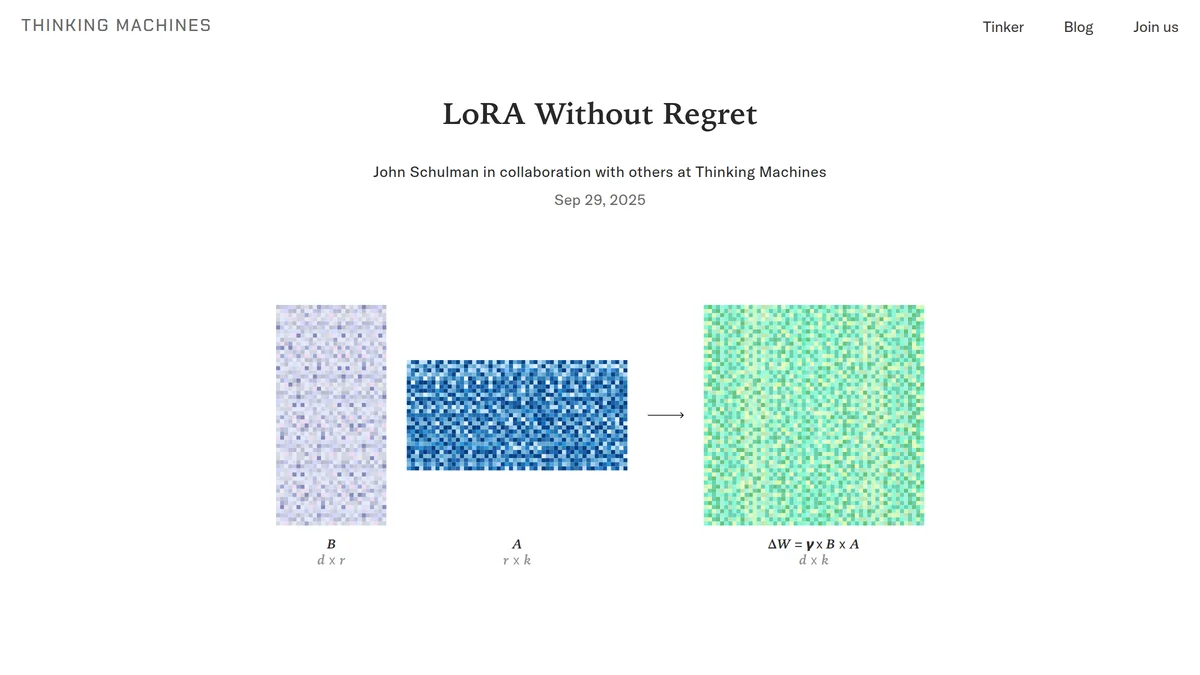

For years, developers have chosen Low-Rank Adaptation (LoRA) for its operational benefits. It allows a single base model to serve multiple custom versions simultaneously (multi-tenant serving) and drastically reduces memory requirements for training. But a nagging question always remained: were they trading peak performance for convenience? The consensus was that LoRA was a compromise, often underperforming full fine-tuning (FullFT), where every parameter in a model is updated.

The new research systematically dismantles that assumption, demonstrating that when used correctly, LoRA achieves the same sample efficiency and ultimate performance as FullFT in most post-training scenarios.

The 'No-Regret' LoRA Playbook

The researchers found that two key conditions must be met for LoRA to equal FullFT. First, and most critically, LoRA must be applied to *all* weight matrices in the model, not just the attention layers as was common practice. The paper shows that applying LoRA to the MLP layers—the model's main computational workhorses—is essential for performance. Attention-only LoRA significantly underperformed, even when given a comparable number of trainable parameters.

Second, the LoRA adapter must have sufficient capacity, or "rank," for the size of the training dataset. When the rank is too low for the amount of new information being learned, performance suffers. But for typical instruction-tuning and reasoning datasets, the study found that LoRA has more than enough capacity to match FullFT's learning curve.

One of the most surprising findings relates to reinforcement learning (RL). The paper reveals that RL requires very little capacity to learn effectively. LoRA matched FullFT's performance in complex math reasoning tasks even with a rank as low as 1. The authors argue this is because RL provides far less information per training example than supervised learning, meaning a tiny adapter is sufficient to absorb the new policy. This could dramatically lower the cost of training AI agents.

The paper also demystifies hyperparameter tuning, a common headache for LoRA users. It confirms that the optimal learning rate for LoRA is consistently about 10 times higher than for FullFT. This simple rule of thumb removes a major barrier to adoption, making it easier for teams to switch from full fine-tuning without extensive trial and error.

For the AI industry, these findings are significant. They validate LoRA not as a "good enough" alternative, but as a first-class, production-ready technique for model customization. By providing a clear, evidence-backed guide, Thinking Machines has effectively ended the LoRA vs full fine-tuning debate, paving the way for more efficient and accessible AI development.