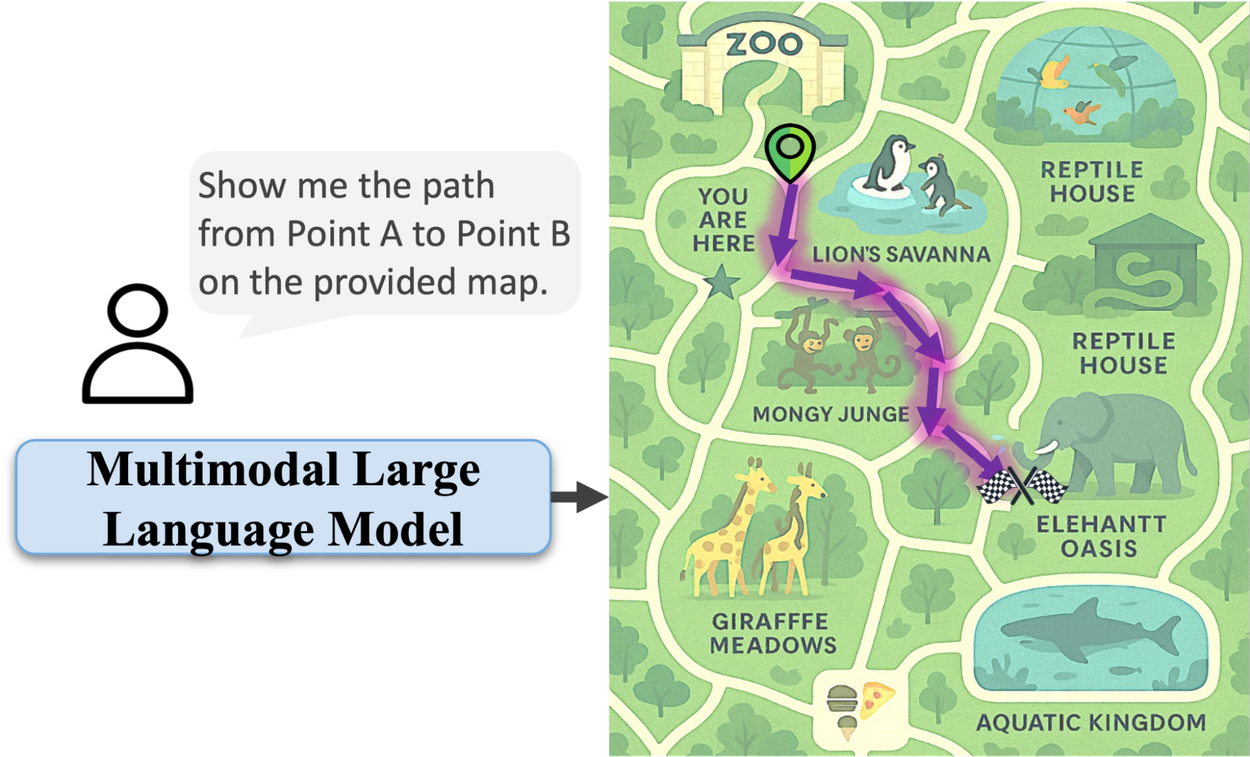

For all their impressive advances, AI models often falter when it comes to a seemingly simple human task: reading a map. While they can identify objects in an image, understanding the geometric and topological relationships needed to navigate from point A to point B remains a significant hurdle. This gap highlights a core limitation: AI excels at recognition but struggles with spatial reasoning.

The Map Navigation Challenge

Multimodal large language models (MLLMs) can identify a zoo, but tracing a path within it often proves difficult. They might draw lines through enclosures or gift shops, failing to grasp environmental constraints. This isn't a failure of vision, but a lack of understanding of how spaces connect and how movement is constrained.

The root cause is a data deficit. MLLMs learn from vast datasets, but these rarely contain explicit examples of navigation rules—that paths must be connected, that walls are impassable, or that routes are ordered sequences. Manually annotating millions of paths with pixel-level accuracy is impractical, and proprietary map data is often inaccessible for research.

MapTrace: A Synthetic Solution

Google researchers propose synthetic data generation as the key. Their MapTrace system automates the creation of maps and annotated routes, circumventing the need for real-world data collection. This pipeline allows for fine-grained control over data diversity and complexity, ensuring generated paths adhere to intended routes and respect environmental boundaries.

The four-stage pipeline uses AI models extensively:

- Map Generation: LLMs create detailed prompts for diverse map types (e.g., shopping malls, theme parks), which are then rendered into images by text-to-image models.

- Path Identification: An AI "Mask Critic" analyzes candidate paths generated by pixel clustering, verifying they represent realistic, connected walkable areas.

- Graph Construction: Traversable areas are converted into a navigable graph, mapping intersections and paths computationally.

- Path Validation: An AI "Path Critic" reviews algorithmically generated shortest paths, ensuring they are logical and human-like, before the AI map navigation capability is finalized.

This process yielded a dataset of 2 million annotated map images. While minor text rendering issues persist, the focus remains on path fidelity.

Proven Results

Fine-tuning MLLMs, including Gemma 3 27B and Gemini 2.5 Flash, on a subset of this synthetic data significantly improved their performance on the MapBench benchmark. The NDTW metric, measuring path-tracing error, saw substantial reductions, with Gemini 2.5 Flash dropping from 1.29 to 0.87. Crucially, the success rate—the percentage of valid, parsable paths generated—also increased across models, demonstrating greater reliability.

These gains validate the core hypothesis: spatial reasoning is teachable. Through targeted, synthetically generated data, AI can acquire the skills to interpret and navigate complex spatial layouts, a crucial step for more intuitive navigation tools, advanced robotics, and enhanced accessibility applications.