Serving large language models like GPT-OSS is a costly affair, especially when they're designed to generate lengthy reasoning traces for better answers. Now, researchers have developed a solution: gpt-oss-puzzle-88B, a derivative of the gpt-oss-120B model optimized for inference efficiency.

Cutting Down the Fat

The core challenge is balancing answer quality, which often requires more tokens, with the escalating costs of serving those tokens. The team behind gpt-oss-puzzle-88B applied a post-training neural architecture search framework called Puzzle to achieve this balance.

Their approach involved several key techniques. They implemented heterogeneous Mixture-of-Experts (MoE) expert pruning, a method that intelligently removes less crucial parts of the MoE layers. This is a significant development in Mixture-of-Experts optimization, building on advancements seen in models like NVIDIA Mistral 3: Enterprise AI Gets a MoE Boost and general NVIDIA Dynamo AI Inference Scales Data Center AI.

Additionally, they selectively replaced full-context attention mechanisms with more efficient window attention, particularly beneficial for long-context reasoning. FP8 KV-cache quantization was used to further reduce memory usage, and post-training reinforcement learning was employed to fine-tune accuracy.

Tangible Speedups

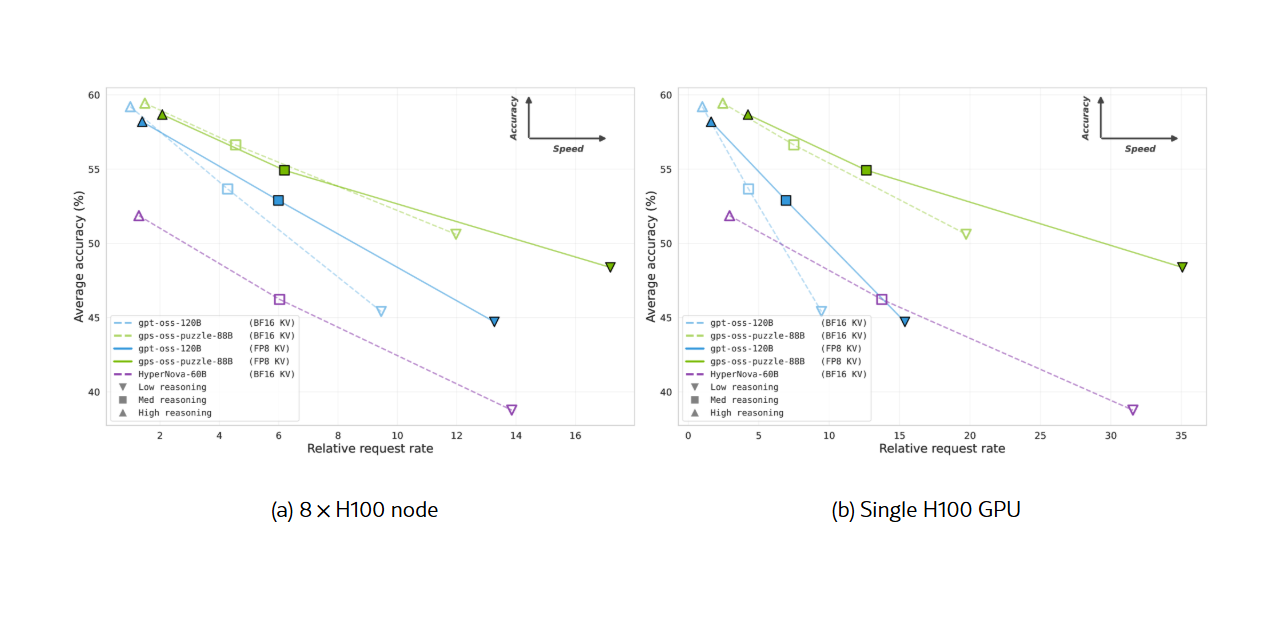

The results are impressive. On an 8x H100 node, gpt-oss-puzzle-88B achieved 1.63x throughput speedup in long-context settings and 1.22x in short-context settings. On a single NVIDIA H100 GPU, the speedup reached 2.82x.

Crucially, these speedups don't come at the cost of accuracy. Gpt-oss-puzzle-88B matches or slightly exceeds the parent model's accuracy across various benchmarks, maintaining its ability to trade cost for quality by adjusting reasoning effort. This means users can get faster responses or spend the gains on more detailed reasoning without a hit to quality.