The AI coding assistant race has a dirty little secret: the smartest models are often painfully slow. While tools like Devin can perform marathon coding sessions, the initial, simple act of finding the right files in a codebase can take minutes, completely shattering a developer's focus. Cognition AI, the company behind Devin, is now tackling this "Semi-Async Valley of Death" head-on with a new approach called Fast Context Retrieval.

In a blog post published today, the company introduced SWE-grep and SWE-grep-mini, a pair of specialized models designed for one task: finding relevant code, fast. These models power a new subagent in their Windsurf IDE product called Fast Context, which Cognition claims can match the retrieval accuracy of frontier models in a tenth of the time.

This isn't just about making things a little quicker. Cognition argues that the initial context-gathering phase can consume over 60% of an agent's first turn. This delay, they say, is where developers lose their "flow state," that elusive zone of deep concentration. Their stated goal is to keep agent interactions under a five-second "flow window."

The company is moving away from the two dominant methods for code search. The first, embedding-based RAG, is fast but can be inaccurate for complex queries. The second, traditional agentic search, is more flexible but gets bogged down in dozens of slow, sequential tool calls.

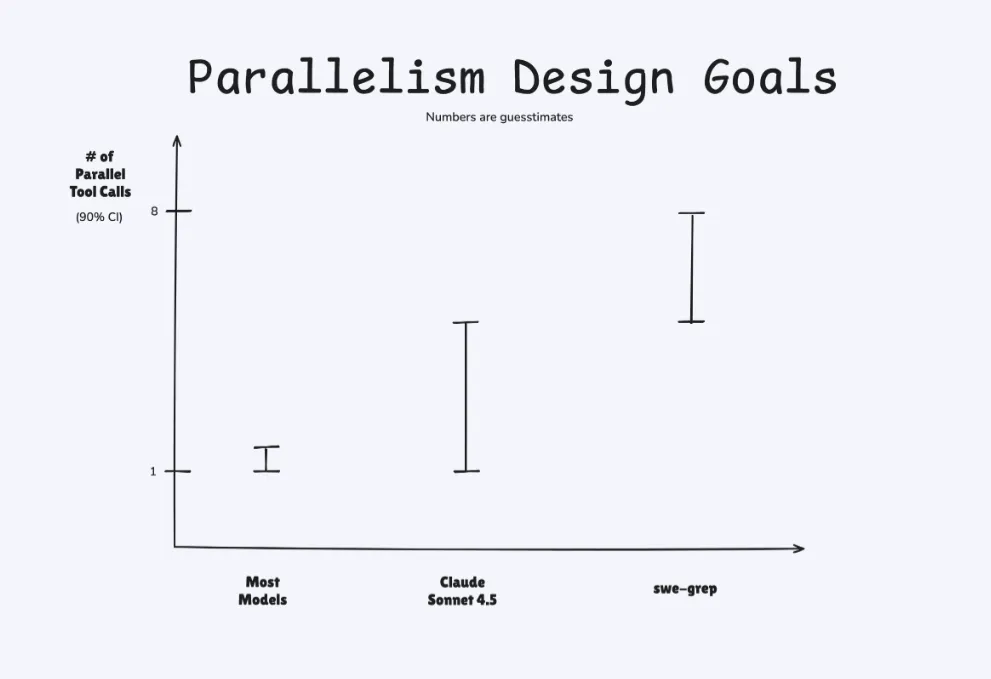

Cognition's solution is a brute-force application of parallelism. Instead of one tool call at a time, SWE-grep is trained using Reinforcement Learning (RL) to execute up to eight parallel searches (like `grep` and `glob`) simultaneously. By limiting the process to just four of these highly-parallel turns, the agent can explore multiple paths through a codebase at once, dramatically cutting down latency.

The Specialized Agent Playbook

The real trick here is specialization. Instead of using a single, massive model for everything, Cognition is delegating the "grunt work" of searching to a smaller, faster, purpose-built agent. This saves the context window and "intelligence" of the main coding agent for the actual task of writing and editing code, preventing what they call "context pollution" from irrelevant search results.

To achieve this speed, Cognition combined three key elements. First, they used a modified RL policy gradient algorithm to train the SWE-grep models to effectively use this high degree of parallelism. Second, they optimized the underlying tool calls for maximum speed. Finally, they partnered with inference provider Cerebras to serve the models at blistering speeds—claiming over 2,800 tokens per second for SWE-grep-mini, which they state is 20 times faster than Anthropic's Haiku.

According to their internal benchmarks on a dataset called the Cognition CodeSearch Eval, SWE-grep is an order of magnitude faster than unnamed "frontier models" while maintaining comparable accuracy. They also show that integrating Fast Context into their larger Cascade agent reduces the end-to-end time on difficult SWE-Bench tasks. To back up these claims, the company has launched a public playground where users can pit a standalone Fast Context agent against stock versions of Claude Code and the Cursor CLI.

This move signals a potential shift in the AI developer tool space. While the industry has been captivated by the long-term autonomy of agents like Devin, Cognition is making a significant investment in the moment-to-moment user experience. By creating a specialized subagent for fast context retrieval, they're acknowledging that for most daily tasks, speed isn't a feature—it's the entire product. The challenge for competitors will now be to prove their monolithic, do-it-all agents can be both brilliant and fast enough to keep up.