Reinforcement learning (RL) is the engine powering the next generation of large language models, from DeepSeek-R1 to the rumored GPT-5. It is the primary method used to refine an LLM’s complex reasoning skills after pretraining. But RL has a fundamental, inherited flaw that is now hitting the ceiling of LLM performance: exploration collapse.

When training an LLM on complex tasks like math or physics, the policy quickly finds one reliable way to solve the problem—say, using the quadratic formula—and then stops exploring alternative, potentially more elegant or robust strategies, like factoring. This premature convergence, known as policy collapse, means models get better at finding *one* correct answer (improving pass@1) but fail to maintain strategic diversity, limiting their ability to solve problems when multiple attempts are needed (pass@k).

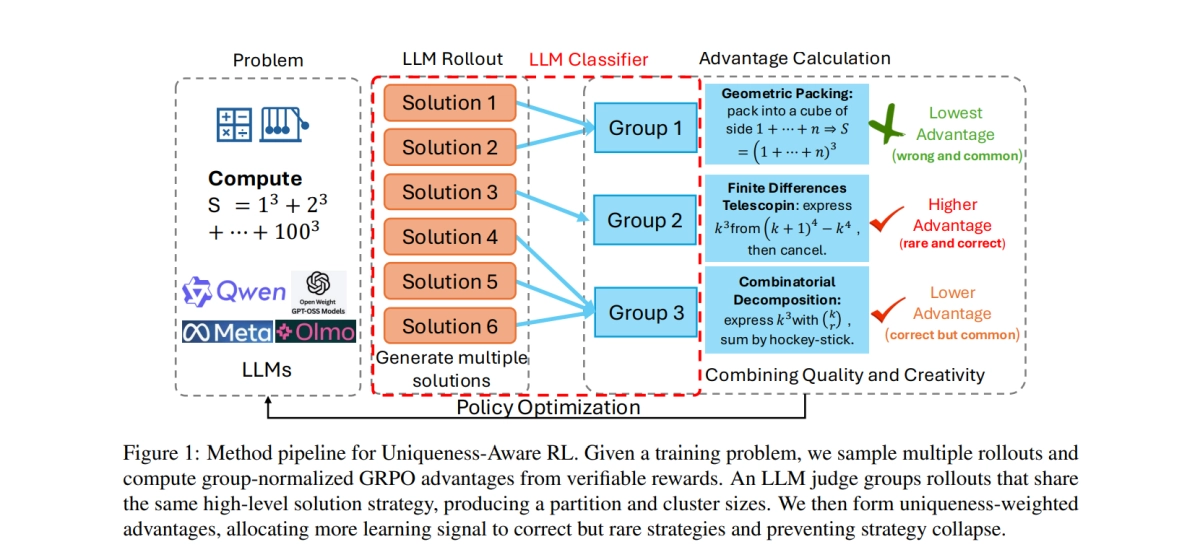

A new paper from researchers at MIT, NUS, Yale, and NTU proposes a solution: Uniqueness-Aware Reinforcement Learning. Instead of trying to fix diversity at the token level—which often just results in the same strategy being expressed with different wording—Uniqueness-Aware RL targets diversity at the high-level strategy.

The core idea is simple: reward the rare.

How Uniqueness-Aware RL rewards creativity

The traditional RL approach for LLMs, such as Group Relative Policy Optimization (GRPO), samples several solutions (rollouts) for a single problem and rewards them based on correctness. Uniqueness-Aware RL adds a crucial intermediary step: an LLM-based judge.

This judge is prompted to analyze all sampled rollouts for a given problem and cluster them based on their high-level solution strategy. It must ignore superficial differences—like variable names or algebraic presentation—and focus on the core logical approach. For instance, if a model is solving $x^2 - 5x + 6 = 0$, the judge distinguishes between a solution using the quadratic formula and one using factorization.

Once the strategies are clustered, the system calculates a uniqueness weight for each correct solution. If a correct solution belongs to a small cluster (a rare strategy), it receives an amplified advantage during policy optimization. If it belongs to a large cluster (a common, dominant strategy), its reward is downweighted. Incorrect solutions remain penalized regardless of their uniqueness.

This mechanism explicitly incentivizes the policy to allocate probability mass across multiple distinct solution strategies, rather than concentrating all effort on the single most probable path.

The results, tested across challenging benchmarks in mathematics (AIME, HLE), physics (OlympiadBench), and medicine (MedCaseReasoning), show that Uniqueness-Aware RL consistently improves pass@k performance, especially when sampling budgets are large (up to k=256). Crucially, the approach sustains higher policy entropy throughout training, meaning the model remains creatively flexible instead of becoming strategically rigid.

This is a significant technical breakthrough for the industry. As LLMs are increasingly deployed for complex, high-stakes reasoning—from scientific discovery to clinical diagnosis—users rely on the model’s ability to generate multiple, distinct, and robust lines of reasoning. Uniqueness-Aware RL provides a necessary mechanism to ensure that post-training refinement does not inadvertently kill the model’s creative problem-solving capacity.