Thinking Machines Lab, a quiet but influential player in AI research, has issued a public "Tinker call for projects," inviting the broader machine learning community to leverage its Tinker platform for novel research and practical applications. Launched to empower builders and researchers with flexible model training, Tinker is now opening its doors wider, seeking submissions for featured projects that could shape the next wave of AI development.

Thinking Machines Lab aims to regularly showcase "coolest projects" on its blog, pushing for transparency and diligent work over mere novelty. This is a clear signal that the lab is betting on community-driven innovation to stress-test and expand the capabilities of its Tinker platform.

Submissions are broad, ranging from reimplementations of existing research and original ML studies to AI-enabled research in other domains, custom model prototypes, and even infrastructure contributions. The emphasis, however, is firmly on rigor: clear evaluations, crisp charts, raw output examples, and transparent comparisons to alternative approaches are paramount. This focus on verifiable results is a refreshing counter-narrative to the hype that often surrounds AI advancements.

The Frontier of AI Research: Where Tinker Wants to Go

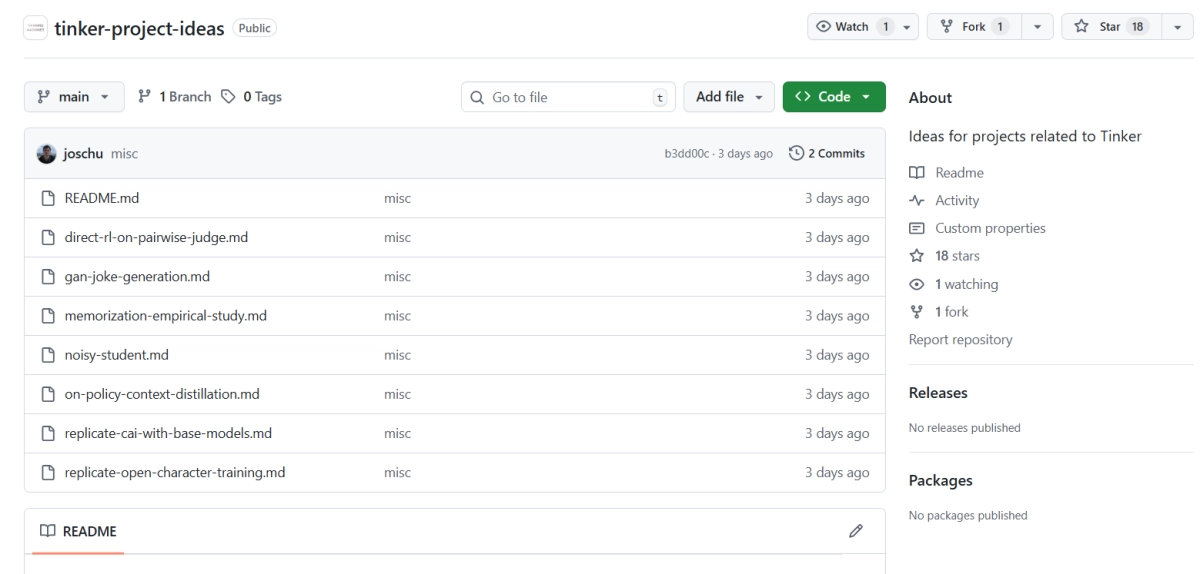

Beyond general guidelines, Thinking Machines Lab has outlined specific research directions it's particularly keen to see explored. These aren't just academic curiosities; they address fundamental challenges in AI. For instance, replicating Constitutional AI from a base model aims to disentangle the impact of ethical frameworks from underlying instruction-tuned models, a crucial step for building more robust and fair AI. Similarly, exploring RLVR with Noisy student could unlock more efficient ways to leverage vast unlabeled datasets for large language models, a significant resource optimization challenge.

Other suggested projects include on-policy context distillation for more effective student model training, an RL memory test to empirically validate information acquisition rates, and direct RL on pairwise judges to streamline reward model training. The call also encourages replicating Open Character Training and even a GAN for jokes, highlighting a blend of foundational research and creative application.