Microsoft Research has unveiled OptiMind, a specialized 20-billion parameter small language model (SLM) engineered to solve one of the most persistent bottlenecks in enterprise data science: converting natural language business problems into precise mathematical optimization algorithms. This development signals a critical shift in small language model optimization, proving that highly focused, expert-aligned training can deliver performance that rivals or exceeds much larger general-purpose systems. The immediate benefit is accelerating complex decision-making, potentially cutting weeks of specialized modeling work down to minutes.

Optimization models are foundational to modern enterprise planning, governing everything from supply chains to financial logistics, yet the translation process requires specialized expertise that is scarce and time-consuming. According to the announcement, this translation demands expressing decisions, constraints, and objectives in rigorous mathematical terms, a task that can take experts days or weeks to complete for complex problems. OptiMind’s value proposition is clear: democratizing access to high-level optimization by allowing domain experts to describe problems in plain English while ensuring sensitive business data remains local due to the model's compact footprint.

The Strategy: Expert Alignment in Small Language Model Optimization

The core technical breakthrough was not scaling the model size, but rigorously improving the training data quality. Microsoft researchers found that 30 to 50 percent of existing public optimization benchmarks were flawed, containing incomplete or incorrect solutions that lead to unreliable model behavior. By developing a systematic approach combining automation with expert review, OptiMind was trained on a meticulously curated, expert-verified dataset, drastically reducing the model's tendency to hallucinate unreliable formulations relative to comparison models.

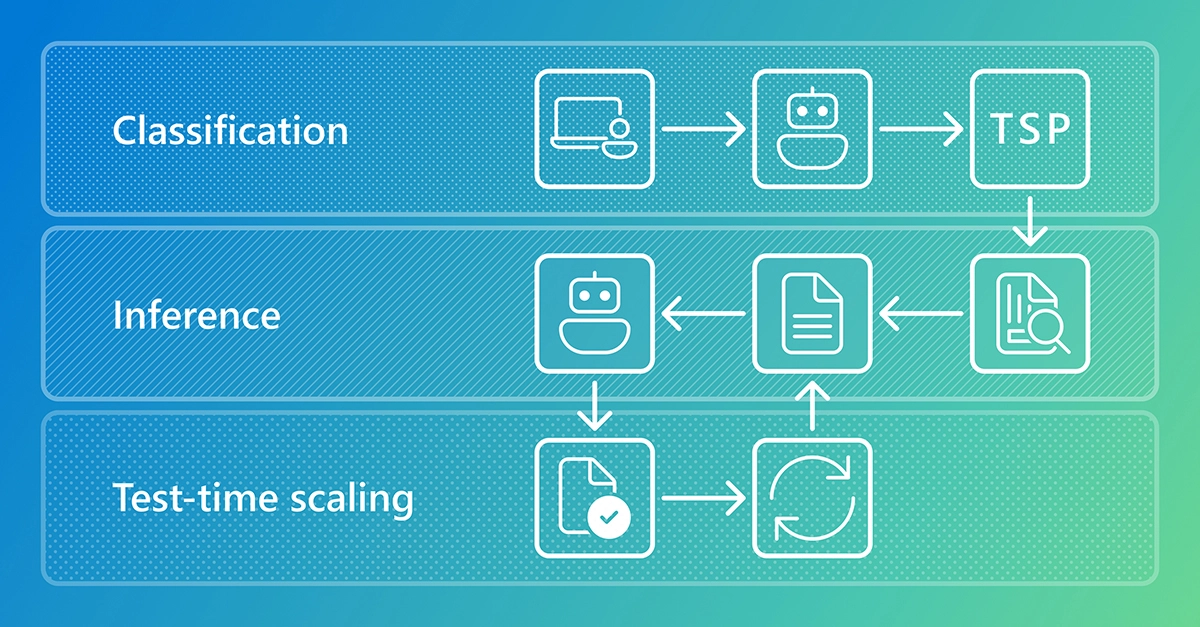

OptiMind further enhances reliability through structured inference guided by expert reasoning. During use, the model first classifies the problem type, such as scheduling or network design, and then applies domain-specific hints that act as internal self-checks before generating the final solution. This layered approach, which includes generating intermediate reasoning steps and selecting the most frequent solution from multiple attempts, allows the 20B parameter model to match or surpass the accuracy of systems with significantly higher parameter counts. This methodology confirms that structured reasoning is a more effective lever than sheer size for achieving accuracy in specialized domains.

The evaluation results are compelling: after correcting the flaws in standard benchmarks, OptiMind improved accuracy by approximately 10 percent over the base model and outperformed all other open-source models under 32 billion parameters. This project validates the principle that domain-specific small language model optimization, driven by high-quality data and structured reasoning, is often superior to brute-force scaling for specialized tasks. As Microsoft releases OptiMind experimentally, the industry should watch how this expert-aligned architecture influences future SLM development, particularly as the team explores reinforcement learning and automated hint generation for continuous autonomous improvement.