Over a million businesses are betting on AI, but a significant number are struggling to translate that investment into reliable value. According to OpenAI, the gap between ambition and execution is bridged by a rigorous, often overlooked process: evaluation frameworks, or "evals."

OpenAI, which uses evals extensively internally, is now urging business leaders to adopt these methods, arguing that they are the natural successor to traditional KPIs and OKRs in the age of probabilistic systems. The core message is simple: If you can’t define what "great" means for your specific workflow, you won't achieve it.

The company distinguishes between its internal "frontier evals"—used by researchers to measure general model performance—and "contextual evals," which are tailored to a specific organization’s product or internal workflow. It’s these contextual evals that are now mandatory for enterprise success. They function much like product requirement documents, turning abstract business goals (like "Convert qualified inbound emails into scheduled demos") into explicit, measurable system behaviors.

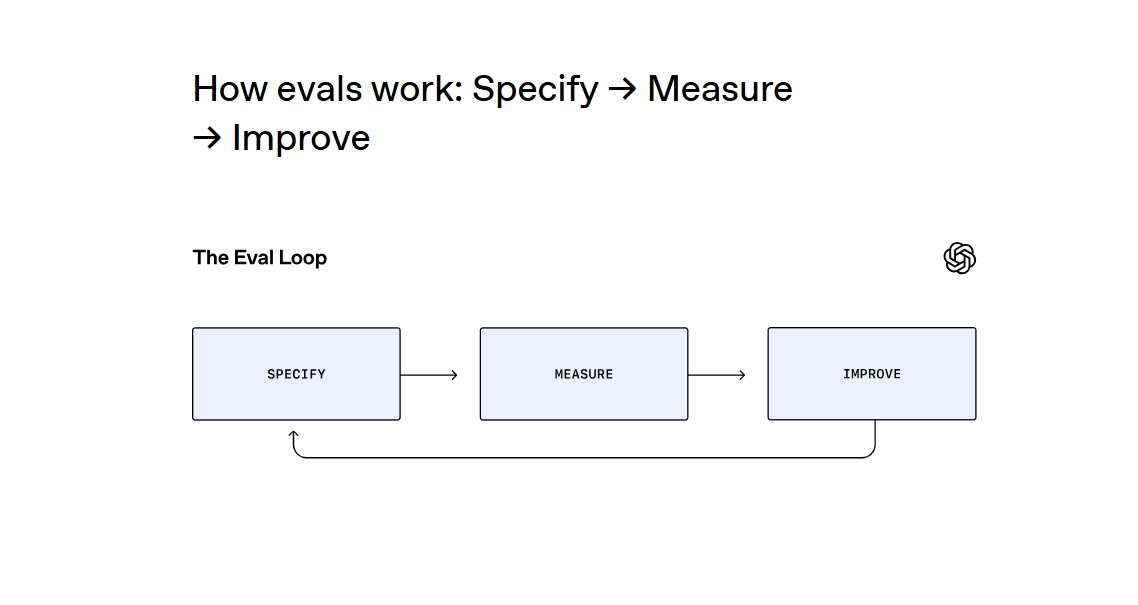

The Specify, Measure, Improve Loop

The framework OpenAI proposes is a continuous, three-step loop: Specify, Measure, and Improve.

Specify: This step is inherently cross-functional, requiring a mix of technical staff and domain experts (e.g., sales experts for a sales automation tool). This team must define the end-to-end workflow, identify every decision point, and create a "golden set" of examples—a living reference of what "great" looks like. Crucially, this process is iterative. Early error analysis, reviewing 50 to 100 outputs, helps create a taxonomy of specific failure modes that the system must track and eliminate.

Measure: Measurement must happen in a dedicated test environment that mirrors real-world conditions, including edge cases that are rare but costly if mishandled. While traditional business metrics still apply, new metrics often need to be invented. OpenAI notes that while LLM graders (AI models that grade other AI outputs) can scale testing, human domain experts must remain in the loop to regularly audit the graders and review logs. Evals don't stop at launch; they must continuously measure real outputs generated from real inputs.

Improve: The final step is establishing a data flywheel. By logging inputs, outputs, and outcomes, and routing ambiguous or costly cases to expert review, organizations can continuously refine prompts, adjust data access, and update the eval itself. This iterative loop yields a large, differentiated, context-specific dataset that is hard to copy, creating a compounding competitive advantage.

For business leaders, this shift means management skills are now AI skills. Working with probabilistic systems demands a deeper consideration of trade-offs—balancing velocity against reliability, and deciding when precision is non-negotiable. Robust Business AI evaluation frameworks are no longer optional; they are the unique differentiator in a world where foundational models are increasingly commoditized.