NVIDIA is making a profound impact on the future of autonomous driving AI with its latest open-source advancements unveiled at NeurIPS, signaling a significant industry shift. The company introduced NVIDIA DRIVE Alpamayo-R1 (AR1), heralded as the world's first industry-scale open reasoning vision language action (VLA) model specifically engineered for autonomous vehicles. This pivotal development signals a critical evolution towards more human-like decision-making capabilities in self-driving systems, moving beyond mere reactive pattern recognition to proactive, contextual understanding, a long-sought goal in the AV sector.

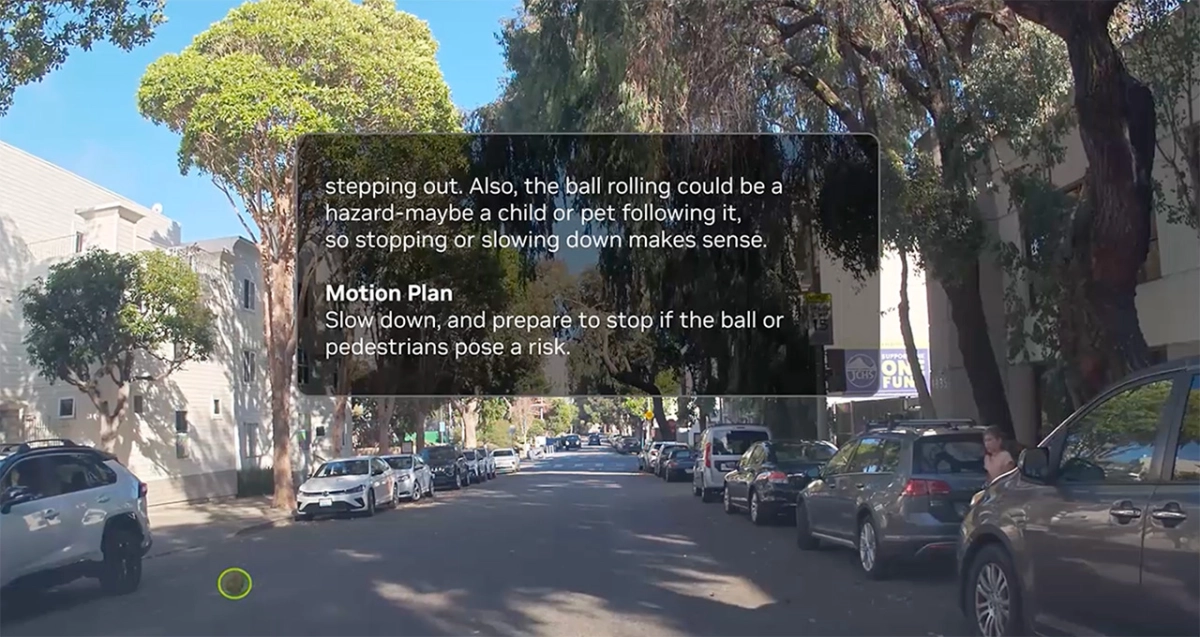

AR1 represents a substantial architectural leap for NVIDIA autonomous driving AI by deeply integrating chain-of-thought reasoning directly into the vehicle's path planning, a methodology previously confined to large language models. This sophisticated approach allows an autonomous vehicle to systematically break down highly complex and ambiguous scenarios, such as navigating a pedestrian-heavy intersection, responding to an unexpected lane closure, or maneuvering around a double-parked vehicle in a bike lane. According to the announcement, this infusion of "common sense" enables AVs to drive more like humans, making nuanced decisions with greater safety and precision, which is absolutely fundamental for achieving true Level 4 autonomy. By considering all possible trajectories and using contextual data to choose the optimal route, AR1 moves AVs closer to intuitive, reliable operation in unpredictable real-world environments, addressing a critical bottleneck in current AV development.

The strategic decision to make AR1 an open-foundation model, based on NVIDIA Cosmos Reason, significantly accelerates research and development in NVIDIA autonomous driving AI by fostering a collaborative ecosystem. Researchers can now customize and benchmark this advanced VLA model for their own non-commercial use cases, fostering a collaborative environment that is crucial for rapid innovation and diverse application. The immediate availability of AR1 on platforms like GitHub and Hugging Face, alongside a subset of its training data in the NVIDIA Physical AI Open Datasets, democratizes access to cutting-edge AV technology for a broader scientific community. Furthermore, the release of the AlpaSim framework allows for robust evaluation and comparison, while reinforcement learning has already demonstrated significant improvements in AR1's reasoning capabilities post-training, highlighting its adaptability.

Beyond AR1, the broader NVIDIA Cosmos ecosystem provides a comprehensive and expanding toolkit essential for physical AI development, directly benefiting NVIDIA autonomous driving AI across multiple fronts. The Cosmos Cookbook offers developers step-by-step recipes for everything from meticulous data curation and synthetic data generation to advanced model evaluation, streamlining the entire development lifecycle for complex AV systems. Critical innovations like LidarGen, the first world model capable of generating highly realistic lidar data for AV simulation, and Omniverse NuRec Fixer, which near-instantly addresses artifacts in neurally reconstructed data, are vital for creating robust and high-fidelity testing environments. These tools collectively enhance the ability to train and refine autonomous systems in diverse, high-fidelity virtual worlds, drastically reducing the need for costly and time-consuming real-world testing.

Democratizing Advanced AV Development

NVIDIA's unwavering commitment to open-source development, a strategy independently recognized by Artificial Analysis's Openness Index for its permissibility and transparency, is a powerful catalyst for progress across the entire NVIDIA autonomous driving AI landscape. By openly sharing foundational models, datasets, and frameworks, NVIDIA actively invites a global community of researchers and developers to contribute, test, and build upon its cutting-edge work, fostering a virtuous cycle of innovation. This collaborative ethos is already yielding tangible results, with numerous ecosystem partners including Voxel51, 1X, Figure AI, Gatik, Oxa, and PlusAI actively leveraging Cosmos World Foundation Models for their latest physical AI applications, demonstrating real-world adoption. This democratization of advanced AI models and development tools is absolutely essential for rapid iteration, validation, and ultimately, the widespread adoption of safer and more reliable autonomous systems across various industries.

This strategic push towards open, reasoning-based models marks a pivotal and transformative moment for the autonomous driving industry, moving beyond incremental improvements. The ability for autonomous vehicles to not merely react but to genuinely "think" through complex scenarios, much like an experienced human driver, directly addresses a core challenge that has long hindered the widespread deployment of self-driving technology. NVIDIA's comprehensive strategy, combining groundbreaking AI research with a robust and expanding open-source ecosystem, firmly positions it as a central enabler for the next generation of intelligent autonomous vehicles. The emphasis on explainable reasoning, collaborative development, and high-fidelity simulation promises to significantly accelerate the journey towards truly intelligent, safe, and widely adopted NVIDIA autonomous driving AI, setting a new standard for the industry.