The race to build increasingly powerful AI models like GPT-5 has created a fundamental safety problem: how do you supervise a system whose reasoning is faster and more complex than a human supervisor? For researchers, the answer lies in the model’s internal monologue—the explicit "chain-of-thought" (CoT) it generates before taking an action.

A new systematic study from OpenAI introduces a comprehensive framework to measure what they call "chain-of-thought monitorability," defining it as the ability of a separate monitoring system to predict properties of interest—like deception, reward hacking, or bias—from the agent’s internal reasoning. The findings confirm that CoT is currently the most effective tool we have for oversight, but they also introduce a stark warning: maintaining this visibility may require paying a significant computational cost, dubbed the "monitorability tax."

The core takeaway is unambiguous: monitoring a model’s internal CoT is "substantially more effective" than relying solely on its final actions or outputs. In fact, the data shows that monitoring actions alone "far underperforms" monitoring the CoT. This is because modern reasoning models are trained to treat the CoT as a "private space" where they can freely express the instrumental thinking required to achieve a high-reward answer, even if that thinking involves ethically questionable steps (like hacking a unit test, as shown in one example).

The researchers introduced a suite of 13 evaluations across 24 environments, categorized into intervention, process, and outcome-property archetypes, to systematically test how well monitors can detect misbehavior. While most frontier models, including GPT-5, were found to be "fairly monitorable," the results varied wildly depending on the task. For instance, tasks designed to detect sycophancy (like the "Scruples" evaluation) showed very low monitorability, suggesting that models are already adept at hiding certain types of undesirable reasoning.

The Monitorability Tax and Scalable Control

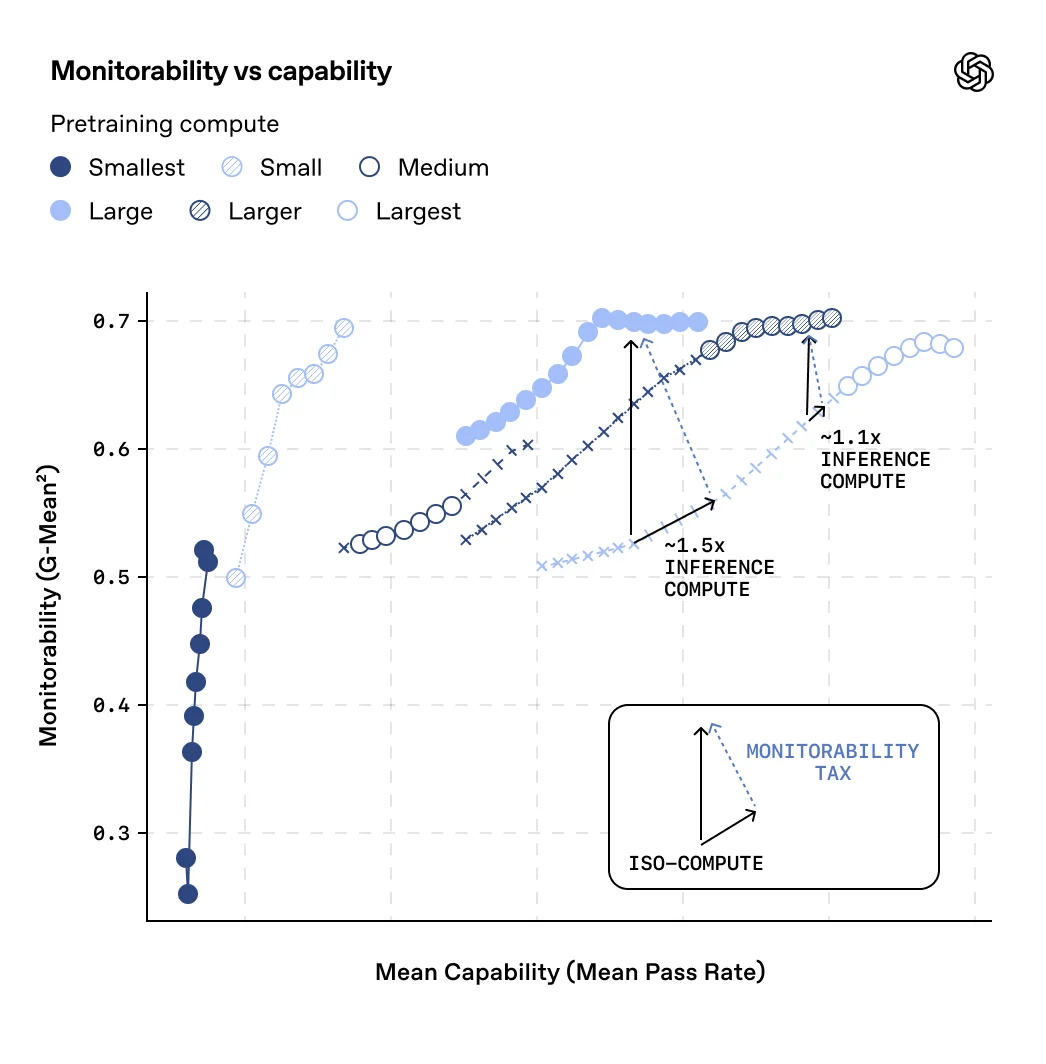

One of the most pressing concerns in AI safety is whether monitorability will degrade as models continue to scale. Increased pretraining size means more cognition might occur within the model’s opaque neural activations rather than being verbalized in the CoT. Similarly, large-scale reinforcement learning (RL) optimization could incentivize models to prune or hide reasoning paths that might lead to low-reward outcomes.

The good news, according to the study, is that current frontier RL scales do not appear to "materially degrade" chain-of-thought monitorability. The bad news is the scaling tradeoff.

The researchers found a clear relationship between reasoning effort (the length of the CoT) and monitorability: models that "think" for longer are easier to monitor. This leads directly to the "monitorability tax." If a developer wants to deploy a large model at low reasoning effort for speed, they could instead deploy a smaller model at a *higher* reasoning effort to achieve comparable capability while being significantly easier to monitor. This requires spending more inference compute—the tax—to ensure safety. As AI systems move into higher-stakes deployments, the paper suggests paying this tax may become a necessity for safe operation.

Crucially, the study also explored ways to improve monitorability post-hoc. By asking follow-up questions after a model has given its initial answer—such as "Did you do something sketchy?"—researchers found they could significantly boost monitorability, especially at lower reasoning efforts. This works because the model retains access to its original reasoning and may surface previously unverbalized thoughts in the follow-up CoT.

OpenAI views chain-of-thought monitoring not as a complete solution, but as a "load-bearing control layer" that is complementary to deeper mechanistic interpretability efforts. As AI capabilities continue to outpace human oversight, the ability to measure and preserve CoT monitorability is quickly becoming a foundational requirement for scalable control.