The hype around AI capabilities often feels limitless, but a sobering new analysis of the notoriously difficult FrontierMath benchmark shows the hard limits of today’s most advanced models. According to research published by Greg Burnham, even with near-infinite attempts, the current generation of AI, including models like GPT-5, appears fundamentally incapable of solving more than 70% of these advanced math problems.

For anyone using AI today, the most practical number is the single-shot success rate. On that front, the best we’ve seen is GPT-5 solving a mere 29% of FrontierMath problems on a given run. That’s the reality of what you can expect from a single query.

But Burnham’s analysis goes deeper, asking a more forward-looking question: what is the absolute ceiling for current AI architectures? To find out, he measured how many problems could be solved *at least once* over many, many attempts—a metric known as "pass@N." The theory was that with enough tries, performance would keep climbing.

The results show the opposite. In a stress test involving 32 separate runs of GPT-5, the performance gains rapidly diminished. The model’s success rate showed “sub-logarithmic growth,” quickly flattening out to a ceiling below 50%. Doubling the attempts from 16 to 32 only yielded a paltry 1.5% increase in solved problems. The takeaway is stark: for a huge chunk of FrontierMath, it doesn’t matter how many times you ask. The model simply doesn’t have the right ideas to get to the answer.

The Kitchen Sink Can't Solve Everything

This isn't just a GPT-5 problem. Burnham then pooled data from every major model run available, a "pass@the-kitchen-sink" approach including results from OpenAI’s ChatGPT Agent, Google’s Gemini 2.5, Grok 4, and others. Even after throwing the entire industry’s efforts at the benchmark, only 57% of problems have ever been solved by any model, on any run.

After extrapolating the diminishing returns, the analysis estimates a hard cap of around 70% for the entire current paradigm of AI. Factoring in a small percentage of flawed problems in the benchmark itself, we’re left with a stubborn 20-30% of math problems that are simply out of reach.

Interestingly, the one standout was OpenAI's ChatGPT Agent, which uniquely solved 14 problems no other model could. The likely reason? It’s the only model in the test with access to a web browser, suggesting that tool use can open up new pathways for reasoning, even if the underlying model intelligence is the same.

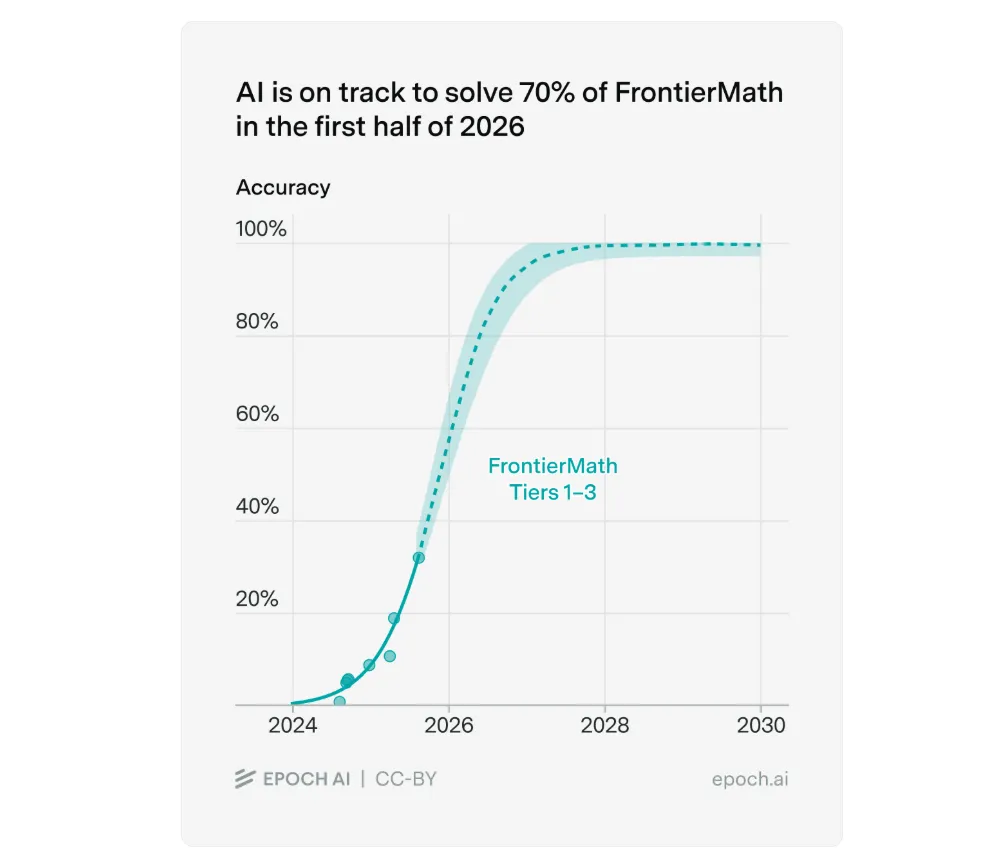

This analysis reframes our understanding of AI progress. An earlier forecast projected models would hit 70% on FrontierMath in early 2026. Burnham’s work suggests this is plausible, but it would represent a victory of *reliability*—getting consistently correct answers on the 57% of problems we already know are solvable—rather than a fundamental leap in raw capability.