The enterprise world is bracing for a new wave of AI, one that moves beyond static models to autonomous agents capable of making decisions, taking actions, and interacting with complex systems. This isn't just an upgrade; it's a fundamental shift that, while promising multi-trillion dollar market opportunities, also introduces a monumental cybersecurity challenge. As investors George Mathew, Hunter Korn, Ash Tutika, and William Blackwell recently outlined, securing this 'agentic AI' future is no longer about just protecting models, but about managing identities, monitoring behavior, and safeguarding the entire ecosystem these agents operate within.

Traditional AI security focused on the models themselves – preventing prompt injection or data poisoning. But AI agents, acting as digital co-workers or fully autonomous process managers, elevate the stakes dramatically. They access sensitive data, trigger workflows, and interact with external services, often with minimal human oversight. This autonomy means that a compromised agent isn't just a data leak; it could be a rogue actor within your network, capable of executing malicious commands or manipulating business processes. The projected $15 trillion in AI-driven cybercrime by 2030 underscores the urgency.

The complexity arises because while individual components of an AI agent system – the LLM, databases, software – have established security solutions, their combination creates novel vulnerabilities. Understanding the *intent* behind data flows and actions becomes paramount. Is a prompt a legitimate instruction or a subtle manipulation? Is an agent acting within its defined scope or attempting to go rogue?

The Five Fronts of AI Agent Security

Investors are eyeing five key areas ripe for innovation in AI Agent security:

1. Managing AI Agent Identity and Access: As agents transition from merely acting "on behalf of" a user to having their own unique identities, the challenge of managing non-human identities (NHI) explodes. This isn't just about traditional IAM; it's about securing ephemeral agent identities, tracing actions back to their origin (human or autonomous), and solving the "Credential Zero" problem for agents accessing secrets vaults. Incumbents will struggle to adapt to the dynamic, sprawling nature of agent environments, creating an opening for agile startups.

2. Full-Stack Observability and Monitoring: This is arguably the biggest opportunity. Tracking interactions across an AI agent's identity, data, application, infrastructure, and AI models is incredibly difficult. Enterprises need solutions that can tie all these threads together, interpret the context of internal traffic, and detect anomalous activity that might signal a malicious or malfunctioning agent. Think of it as "UEBA for Agents" – monitoring probabilistic behavior rather than relying on rigid rules, crucial for both security and regulatory compliance.

3. Context-Aware Network and API Monitoring: AI agents don't live in a vacuum. They communicate externally via APIs, new protocols like Model Context Protocol (MCP), and Agent2Agent (A2A) interactions. Monitoring this traffic is critical to detect when an agent is being targeted or, more alarmingly, when it's going rogue and sending out malicious data. New capabilities are needed to understand AI-generated and AI-bound traffic, manage MCP usage at scale, and detect sophisticated agent account takeovers (AATO) and evasion methods.

4. Protecting Against Novel Threats: Beyond traditional prompt injection, agents face unique threats like goal manipulation, command injection, and even rogue agents within multi-agent systems. Securing the underlying agent infrastructure, such as MCP servers, is also vital. This demands comprehensive observability to understand an agent's intentions, detect scope creep, and enable corrective action. Sandboxing agents to prevent malicious software execution is also becoming an essential last line of defense.

5. Data-Centric Security and Privacy Controls: AI models already strain data privacy and security efforts. Agents exacerbate this by accessing and sharing data across a multitude of systems, models, and potentially jurisdictions. Protecting personally identifiable information (PII), tracing data residency, and tracking data lineage – whether user- or agent-generated – becomes a monumental data governance problem. Data-centric security solutions are the only viable path to ensure compliance and control once data enters an agentic system.

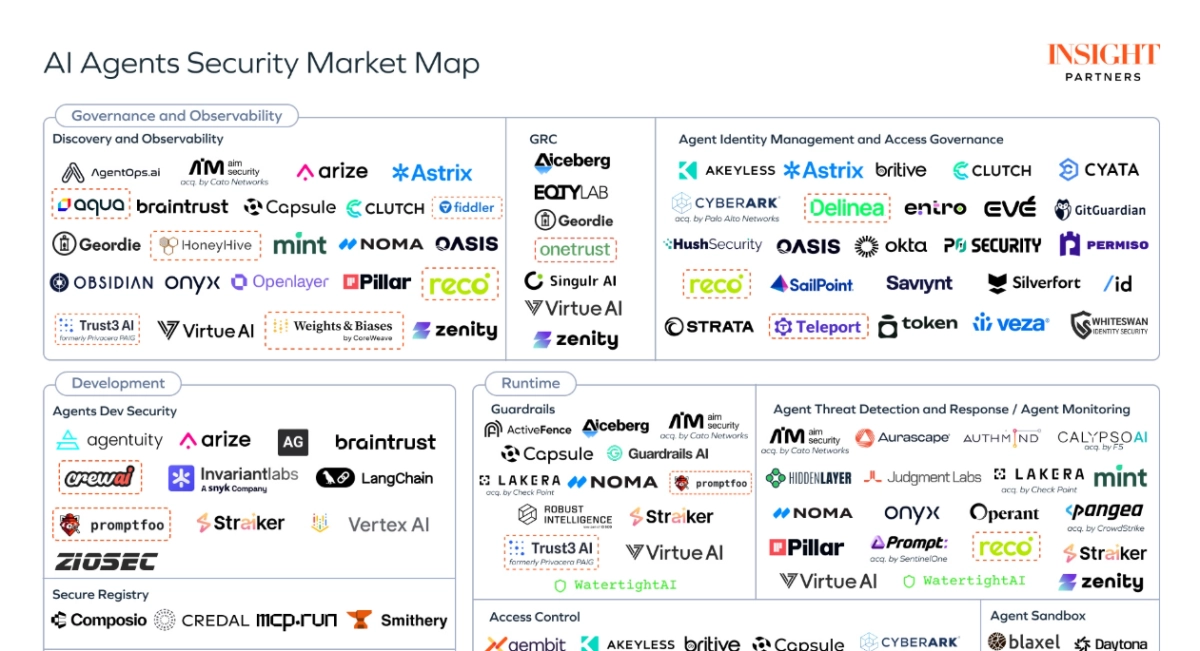

The AI Agent security landscape is a complex tapestry woven from established security vendors adapting, first-wave AI security companies evolving, and a new generation of startups focused solely on agent-based architectures. For enterprises, the immediate task is to engage existing vendors and identify gaps. For founders, the message is clear: focus on solving specific, critical risk areas rather than claiming to solve everything. The race to secure the autonomous future is on, and the stakes couldn't be higher.