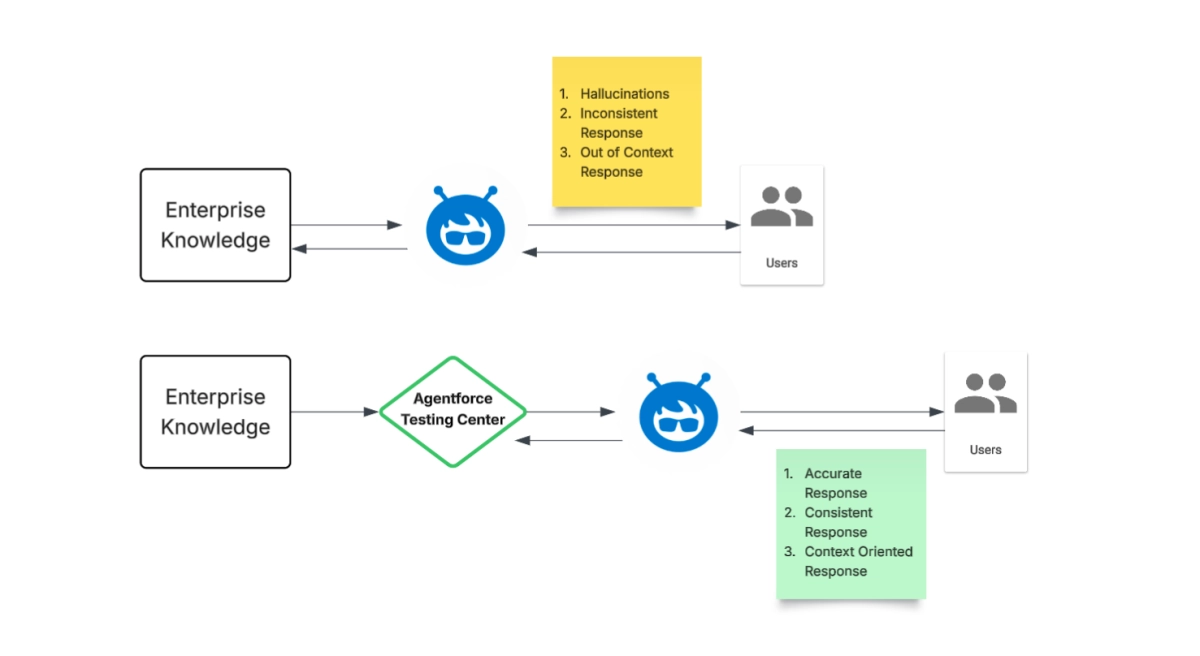

The rapid evolution of AI agents demands robust validation, a challenge Agentforce addresses with its new Testing Center. This platform offers a dedicated sandbox environment for rigorous offline AI agent evaluation, ensuring conversational AI systems deliver accurate, efficient, and reliable responses before deployment. This strategic move underscores a critical industry shift towards proactive quality assurance in AI development, mitigating risks and enhancing user trust from the outset.

The imperative for offline testing reflects a mature approach to AI lifecycle management, embracing the "shift left" principle common in traditional software engineering. By identifying and rectifying issues early, organizations can prevent knowledge gaps, inconsistent responses, and vulnerabilities to complex queries like prompt injection. Crucially, this controlled environment allows developers to catch agent hallucinations and failures to adapt to changing contexts, which are common pitfalls in real-world AI interactions. According to the announcement, this proactive evaluation saves significant time and resources while safeguarding reputational risk, a non-negotiable for enterprises deploying customer-facing AI.

Beyond its no-code interface, the Testing Center integrates low-code capabilities via Salesforce CLI and Agentforce DX. This dual approach empowers developers with greater control for automation, continuous integration/continuous deployment (CI/CD), and versioning. Such integration is vital for embedding repeatable, scalable testing jobs directly into the agent development and deployment pipeline, aligning AI agent development with established MLOps best practices. This signals a maturation of the AI agent landscape, moving beyond experimental deployments to enterprise-grade operationalization.

Advancing Agent Validation Through Specificity

The platform's core strength lies in its three primary use cases, each designed to tackle specific AI agent evaluation challenges. Custom evaluations move beyond generic pass/fail metrics, allowing teams to define precise criteria tailored to an agent's unique purpose. For instance, a financial services agent can be assessed not just for accuracy but also for compliance with legal guidelines, avoidance of competitor mentions, and response latency, often leveraging an LLM as a Judge to provide nuanced scores and reasoning. This capability is paramount for regulated industries, where the stakes of incorrect or non-compliant AI responses are exceptionally high.

Furthermore, the Testing Center addresses the complexities of conversational flow through context variables and conversation history. Context variables enable the simulation of an agent's internal memory, allowing developers to test how an agent maintains coherence across multiple turns based on predefined conditions, such as a lead name or email scenario. Similarly, the ability to feed previous conversational turns as input ensures agents can effectively reference and learn from earlier interactions. This is crucial for maintaining continuity and accuracy in multi-turn dialogues, preventing disjointed or repetitive responses that degrade user experience.

These capabilities collectively represent a significant leap in AI agent evaluation, moving beyond rudimentary checks to a sophisticated, context-aware validation process. The focus on simulating real-world interactions, integrating with development workflows, and enabling highly specific evaluation metrics positions the Agentforce Testing Center as an indispensable tool. It transforms AI agent development from an iterative guesswork process into a structured, data-driven discipline, ensuring agents are not just functional but truly robust, reliable, and production-ready for diverse enterprise applications.