The race to build AI products has entered a new phase, according to ICONIQ's latest report on the state of AI. What began as an experimental sprint to leverage large language models has matured into a critical challenge: scaling AI into robust, profitable offerings. This shift marks the start of the 'Execution Era' of AI, where competitive advantage hinges on disciplined development, cost management, and trustworthy deployment.

From Experimentation to Application Layer Dominance

Companies are no longer solely focused on developing foundational AI models. Instead, the emphasis has moved to the application layer, where differentiation is achieved through user experience, specialized workflows, and seamless integrations. Nearly 70% of companies are now building vertical AI applications tailored to specific industries.

The focus for AI product development is increasingly on vertical applications.

This pivot means companies are less reliant on building proprietary models, with 49% citing application-layer innovation as their primary differentiator, compared to a smaller group focusing on model development.

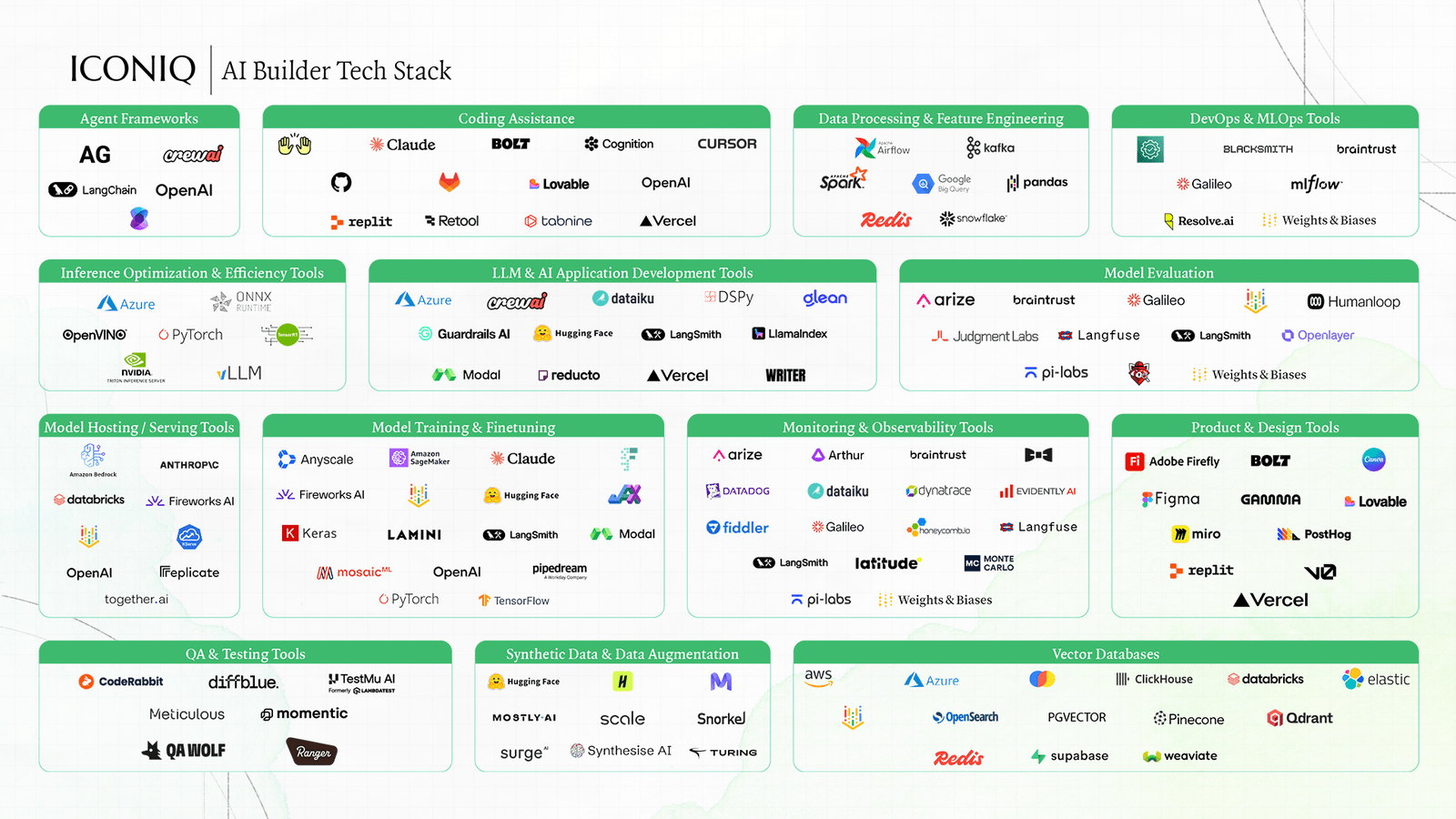

To achieve this, builders are adopting multi-model strategies, leveraging an average of 3.1 providers to balance reliability, cost, and customization. This reflects a move towards orchestration rather than allegiance to a single AI platform.

Data Readiness Remains a Bottleneck

Despite advancements, data remains a key execution hurdle. Most companies report their data foundations are only 'mostly' or 'somewhat' ready, especially at enterprise scale. This lack of readiness can impede the scaling of AI products.

In-house data engineering teams are the primary method for data preparation, a trend that has seen increased usage. Synthetic data generation is also gaining traction for expanding training data and testing edge cases.

AI Economics Take Center Stage

AI development is absorbing a larger share of R&D budgets, with high-growth companies allocating approximately 57% of their R&D to AI. This increased investment heightens scrutiny on cost structures and margins.

As AI products scale, gross margins are projected to improve, reaching an estimated 52% average in 2026. Inference costs are emerging as the dominant expense, underscoring the importance of model selection and infrastructure efficiency.

Go-to-Market and Monetization Evolve

Go-to-market strategies for AI products are becoming more complex. While sales-led motions remain common, hybrid approaches incorporating product-led elements are gaining traction. Channel partnerships are also emerging as a significant growth lever.

Monetization models are in flux.

Subscription fees are still prevalent, but usage-based and outcome-based pricing are growing. A significant portion of companies plan to adjust their AI pricing models in the next year, driven by customer demand for value-aligned pricing and margin concerns.