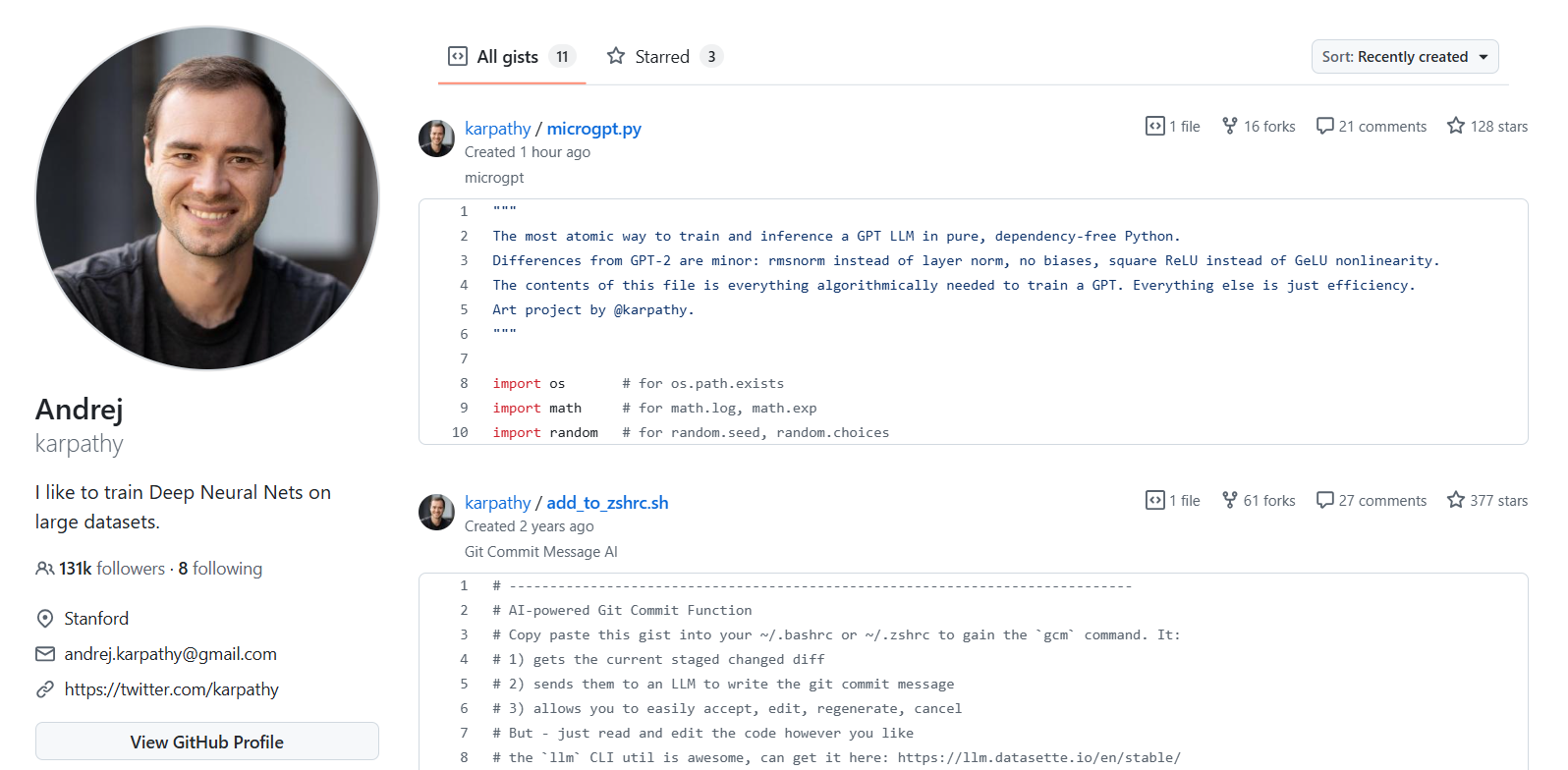

Andrej Karpathy, a prominent figure in AI research and former director of AI at Tesla, has unveiled what he calls microGPT. This project is a remarkably compact implementation of a GPT-like large language model, written entirely in pure, dependency-free Python.

Described by Karpathy as an "art project," microGPT is designed to distill the essential algorithmic components required to train and run a Transformer-based language model. It intentionally omits efficiency optimizations and framework abstractions, focusing solely on the core mechanics.

Anatomy of MicroGPT

The implementation showcases a simplified Transformer architecture. Key differences from the standard GPT-2 include the use of RMS Normalization instead of Layer Normalization, the elimination of biases, and a square ReLU nonlinearity in place of GeLU.

Karpathy's code provides a clear, albeit minimal, view of concepts like token and positional embeddings, multi-head self-attention, and the feed-forward network. It even includes a basic character-level tokenizer and an Adam optimizer, all built from scratch.

Educational Value

The primary goal of microGPT appears to be educational. By stripping away complexity, Karpathy aims to offer a transparent and accessible learning tool for understanding the inner workings of modern LLMs. This aligns with his previous educational projects, such as Unpacking the Transformer: From RNNs to AI's Cornerstone.

The project has garnered significant attention on GitHub, highlighting the community's interest in understanding the fundamental building blocks of AI. The code's elegance and clarity have been widely praised, reinforcing its status as a valuable educational resource.