In an era where digital transactions are the norm, the insidious creep of online shopping scams has escalated dramatically, now amplified by the sophisticated capabilities of artificial intelligence. WIRED’s Andrew Couts, in a recent Incognito Mode deep dive, illuminated how these deceptive practices, which accounted for a staggering $12.5 billion in losses in 2024, are becoming increasingly difficult to discern from legitimate commerce. This alarming trend is not merely an inconvenience but a significant threat to consumer trust and digital security, posing complex challenges for the tech industry, cybersecurity firms, and regulatory bodies alike.

Couts's analysis underscores a critical shift: AI has transformed the landscape of online fraud, allowing scammers to operate with unprecedented scale and realism. The core insight here is that generative AI tools have democratized the creation of highly convincing fraudulent content, from fake websites to synthetic product images, blurring the lines between genuine and deceptive online experiences. This technological leap has drastically lowered the barrier to entry for malicious actors, making scam operations more accessible and potent than ever before.

The proliferation of fake websites stands as a prime example of AI’s enabling power. "The prevalence of fake websites rose 790% in early 2025," Couts stated, attributing this surge directly to AI's ability to effortlessly craft sites that mirror legitimate businesses. Scammers leverage AI to generate URLs that are often just one character off from a trusted brand, coupled with identical site designs, rendering them virtually indistinguishable to the casual observer. Beyond simple typosquatting, sophisticated DNS spoofing techniques can redirect users from legitimate URLs to fraudulent ones, further camouflaging the deception. Once on these imposter sites, users are at the mercy of the scammer’s intent, whether it’s stealing credit card details, harvesting personal information, or simply taking money for products that will never arrive.

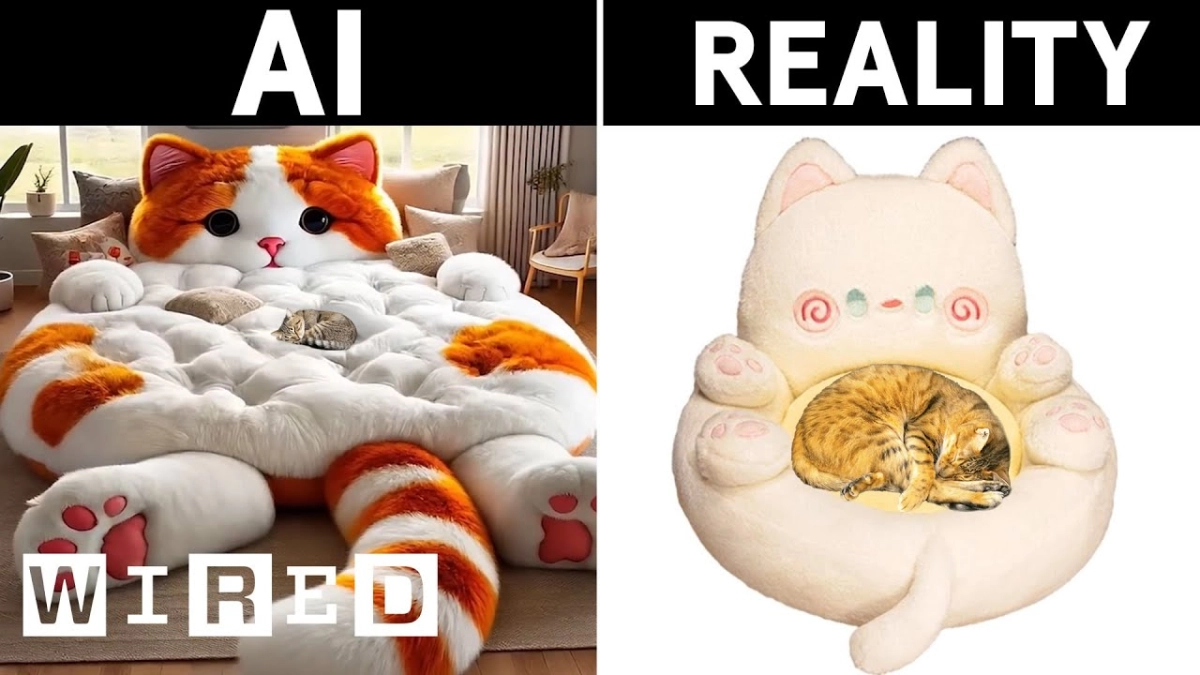

The problem extends to fake merchandise, where AI-generated images present products that either don't exist or are vastly misrepresented. Historically, scammers might have stolen existing product photos, but this was easily detectable through reverse image searches. Now, "With AI, scammers can create entirely new images of entirely new products," Couts explained. This means consumers might purchase a fantastical item, like an oversized cat-shaped bed, only to receive a miniature, poor-quality imitation, or nothing at all. The ease with which AI can render hyper-realistic, yet entirely fabricated, visuals makes it an invaluable tool for fraudsters preying on consumer desires for unique or discounted goods.

Social media platforms have become fertile ground for these AI-enhanced scams, exploiting user data and algorithmic recommendations. Fake coupons, offering "crazy deals" like 80% off high-value items, are common. Another sophisticated tactic involves "disgruntled employee" scams, where individuals purport to share secret discount codes from major retailers on platforms like TikTok or Instagram. While the codes might appear to work, the shipping costs are often inflated disproportionately, or the promised items never materialize. This highlights how AI-driven personalization, initially designed to enhance user experience, can be weaponized to target individuals with highly convincing, yet fraudulent, offers.

Shipping scams, a perennial favorite among fraudsters, have also evolved. Consumers, accustomed to frequent online purchases, readily expect package delivery alerts. Scammers exploit this by sending fake text messages from seemingly legitimate carriers like FedEx or UPS, claiming delayed packages or requesting additional information. Clicking these links often leads to phishing sites designed to steal personal data or install malware, turning an anticipated delivery into a digital nightmare. "These scams work because everybody's buying so much online all the time," Couts observed, noting the inherent trust consumers place in these notifications.

Even charitable giving is not immune. During times of crisis or around holidays, fake charities emerge, often employing AI-generated imagery and text to evoke a strong emotional response and a sense of urgency. These scams exploit human empathy, soliciting donations for non-existent causes or organizations that quickly disappear once funds are collected. The ability of AI to craft compelling narratives and visuals makes these appeals incredibly potent, deceiving well-intentioned donors.

Related Reading

- AI Fuels Dark Web Sophistication and Holiday Scams

- AI Agents Transform Holiday Shopping into a Transactional Battleground

- Holiday AI: Mainstream Utility Meets Gifting's Human Touch

Perhaps one of the most unexpected new online scams, as Couts detailed, is "brushing." This scheme begins offline with an unsolicited package arriving at a consumer's doorstep, containing an item they never ordered. The package, bearing the recipient's correct name and address, often includes a QR code. Scanning this code directs the user to a seemingly legitimate website, but it’s a trap. The true purpose of brushing is to create a fake transaction record, allowing scammers to then post fraudulent positive reviews under the recipient's name, boosting product visibility and credibility for their illicit operations. This not only compromises personal data but also corrupts the integrity of online review systems.

The battle against AI-powered online scams requires a multi-pronged approach, focusing on both technological defenses and heightened consumer awareness. While platforms and cybersecurity firms work to develop advanced AI detection systems, individuals must adopt a more skeptical posture online. This includes meticulously checking URLs for discrepancies, independently verifying retailers, especially those offering deals that seem "too good to be true," and exercising extreme caution with unsolicited links or packages. The digital landscape demands constant vigilance, as the tools that drive innovation are simultaneously being weaponized for deception.