The AI race for raw reasoning power has hit a practical limit. Users demand frontier-level intelligence—the kind that tackles complex math problems—but not at the cost of minutes-long waits or exorbitant API fees. Enter Step 3.5 Flash, a new model from the StepFun Team designed to bridge this gap.

Intelligence Density Over Brute Force

Step 3.5 Flash operates on a principle of "High-Density Intelligence." It pairs a massive 196 billion parameter foundation with a highly efficient 11 billion active parameter execution engine. This architecture allows it to compete with models like GPT-5.2 xHigh and Gemini 3.0 Pro while maintaining agility.

The Architecture: Smarter, Not Just Bigger

At its core, Step 3.5 Flash utilizes a Sparse Mixture-of-Experts (MoE) backbone. While the total parameter count is 196B, only 11B are engaged per token. This drastically reduces computational overhead.

Key innovations include:

- Hybrid Attention: A 3:1 ratio of Sliding Window Attention (SWA) and Full Attention balances local detail capture with long-range dependency understanding.

- Multi-Token Prediction (MTP-3): The model generates three tokens simultaneously, significantly accelerating output for tasks requiring long sequences.

- Balanced Routing: EP-Group Balanced Routing ensures even hardware utilization, preventing bottlenecks and expert collapse, a common issue in Sparse MoE AI development.

Training for Stability and Scale

Forged over 17.2 trillion tokens, Step 3.5 Flash prioritizes training stability. The StepFun Team developed an asynchronous metrics server to monitor training at the micro-batch level, proactively identifying and mitigating precision issues and expert collapse. This meticulous approach ensures reliability in large-scale training.

Scalable Reasoning with MIS-PO

Traditional Reinforcement Learning (RL) often suffers from gradient noise, hindering performance on complex, long-horizon tasks. Step 3.5 Flash introduces Metropolis Independence Sampling-Filtered Policy Optimization (MIS-PO).

MIS-PO filters out high-variance samples using a trust region approach. This allows the model to scale its reasoning capabilities for intricate tasks like multi-round coding sessions and scientific research without accuracy degradation.

Performance Benchmarks: The "Flash" Factor

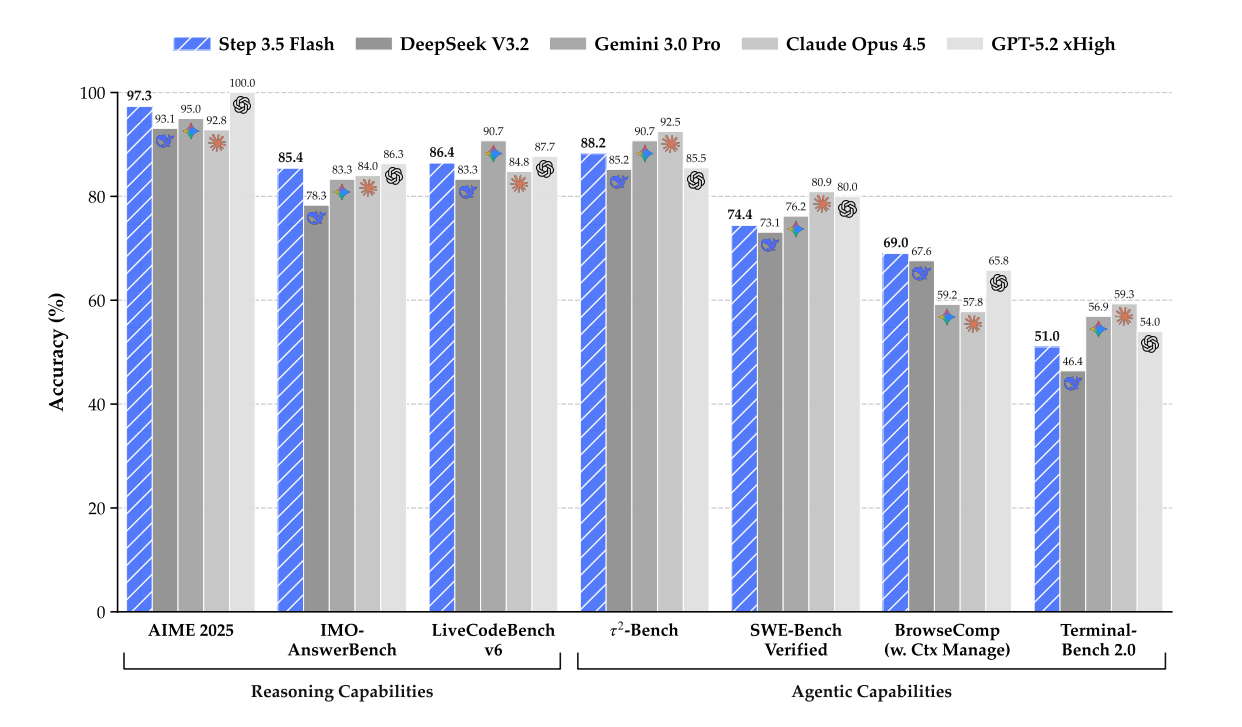

Efficiency does not come at the expense of performance. Step 3.5 Flash demonstrates strong capabilities against leading proprietary models:

- Mathematics: 85.4% on IMO-AnswerBench.

- Coding: 86.4% on LiveCodeBench-v6, surpassing larger dense models.

- Agentic Tasks: 88.2% on $ au^2$-Bench for tool use and logic.

Democratizing Frontier AI

The release of Step 3.5 Flash signals a shift from "Bigger is Better" to "Smarter is Faster." By enabling high-density intelligence that can potentially run on high-end workstations, StepFun is making advanced AI more accessible. For industrial applications requiring real-time web browsing, command execution, and code generation, this model sets a new standard.