NVIDIA’s new Blackwell GPUs just swept a new, independent AI benchmark, but the real story isn’t just about record-breaking speed. It’s about the brutal economics of running AI at scale. According to results from the new InferenceMAX v1 benchmark by SemiAnalysis, NVIDIA is making a bold claim: its hardware can deliver a 15x return on investment.

The headline number is staggering: a $5 million investment in a GB200 NVL72 system can supposedly generate $75 million in token revenue. This is the core of NVIDIA’s new pitch for the era of “AI factories,” where the focus shifts from theoretical performance to the total cost of ownership and profitability.

The InferenceMAX benchmark, released by industry analysis firm SemiAnalysis, is designed to measure this exact thing. It’s the first independent test to model the total cost of compute across diverse, real-world scenarios. As AI models evolve from simple chatbots to complex reasoning agents that use tools and generate lengthy responses, the sheer volume of tokens—and the cost to produce them—is exploding. This benchmark aims to capture that reality.

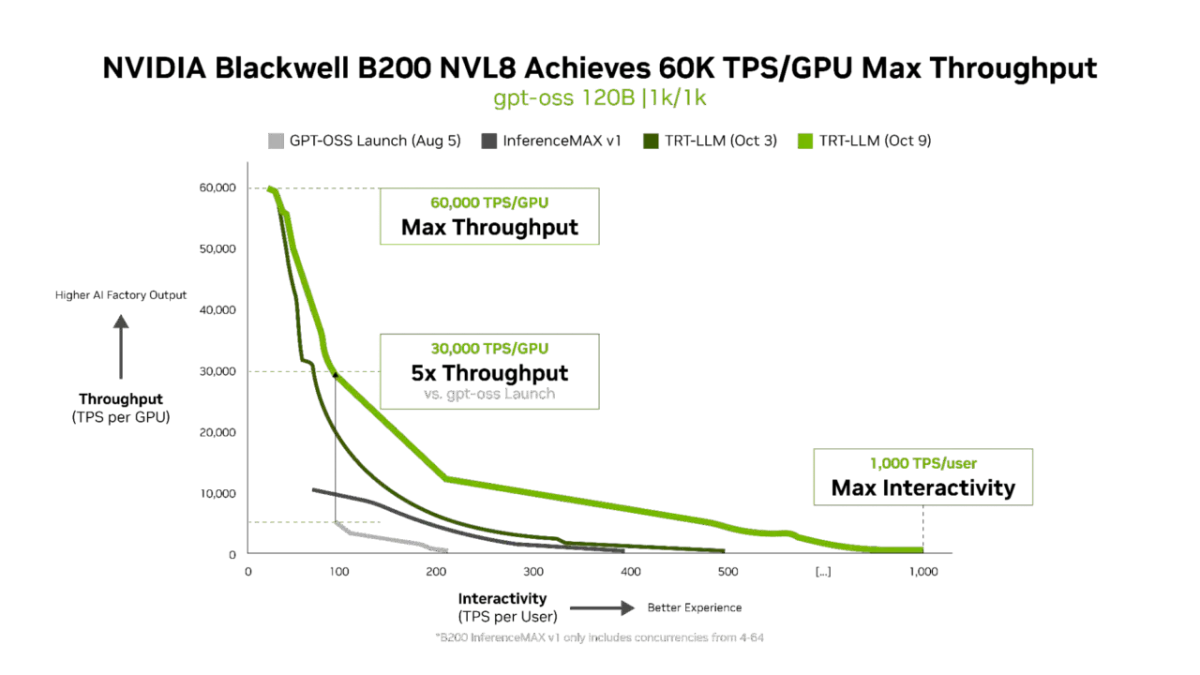

NVIDIA’s results show the B200 GPU hitting 60,000 tokens per second, but the more impactful metric might be its cost efficiency. The company claims its software optimizations have driven the cost down to just two cents per million tokens on an open-source model, a 5x reduction in just two months.

The Software Stack is Doing the Heavy Lifting

While the Blackwell silicon is impressive, these results underscore that NVIDIA’s true moat is its software. The company has more than doubled Blackwell’s performance since its launch through software updates alone, a testament to its full-stack approach.

The recently released TensorRT-LLM v1.0 is a key part of this story. Through advanced parallelization techniques that leverage the full bandwidth of its NVLink interconnects, NVIDIA has dramatically improved performance on large models like the open-source `gpt-oss-120b`. Further gains come from techniques like speculative decoding, which triples throughput by having the model predict multiple tokens at once.

This isn’t happening in a vacuum. NVIDIA highlighted deep collaborations with open-source communities like vLLM and SGLang, and model builders like Meta, OpenAI, and DeepSeek AI. By optimizing its software stack for the most popular open models, NVIDIA ensures its hardware remains the default choice for the world’s largest AI inference infrastructure.

The results against the previous generation are stark. On a dense model like Llama 3.3 70B, the B200 delivers 4x higher throughput per GPU than the H200. For power-constrained data centers, Blackwell reportedly delivers 10x the throughput per megawatt.

These benchmarks are more than just a marketing win for NVIDIA; they are a strategic effort to frame the conversation around AI infrastructure. By focusing on metrics like ROI and cost-per-token, NVIDIA is arguing that while competitors might produce a fast chip, only its integrated hardware, software, and massive developer ecosystem can deliver the economic efficiency required to run an AI factory profitably. It’s a powerful narrative that turns raw performance into a clear business case, challenging rivals to compete on the entire stack, not just the silicon.