The proliferation of artificial intelligence, particularly large language models, introduces a profound challenge to the very notion of verifiable truth, a concern eloquently articulated by Wikipedia founder Jimmy Wales. He contends that current AI models frequently "hallucinate, don't cite their work and act as if that's not a problem," a fundamental flaw that undermines their utility for anything requiring factual accuracy. This isn't merely a technical glitch; it represents a philosophical divergence between generative AI's probabilistic output and humanity's need for demonstrable fact.

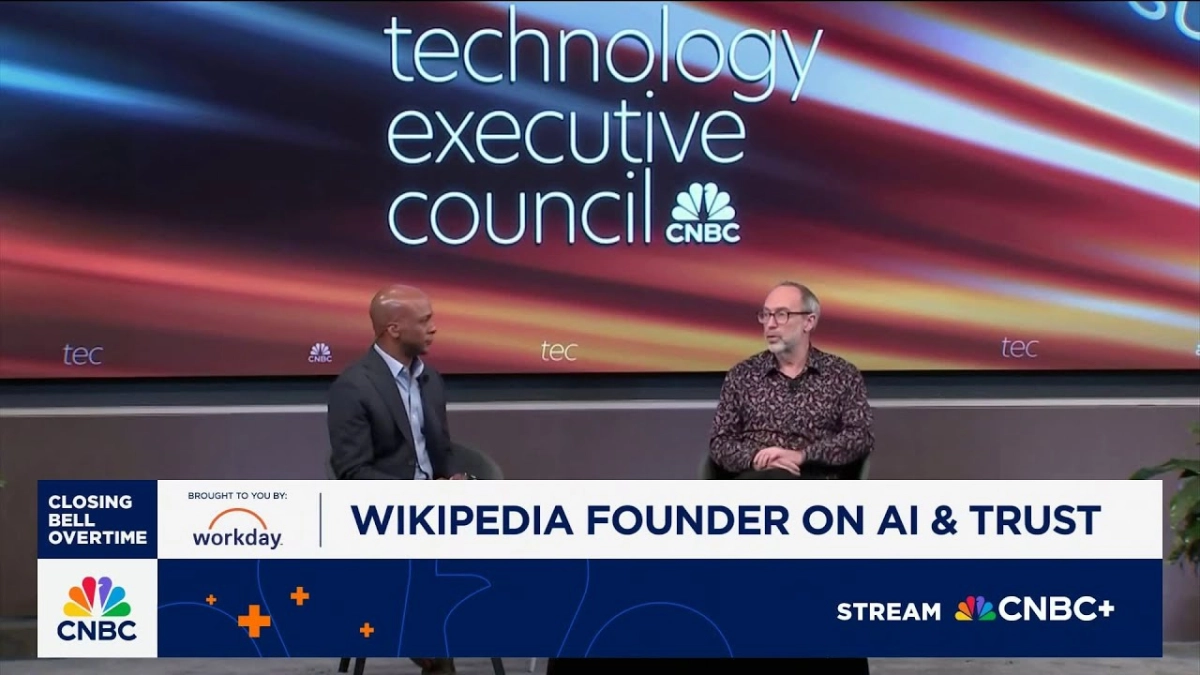

Jimmy Wales spoke with CNBC's Jon Fortt at the CNBC Technology Executive Council event, where the discussion centered on AI's inherent problem with factual errors and the broader implications for trust. Wales, whose career has been defined by building a platform dedicated to accessible and verifiable knowledge, highlighted the stark contrast between AI's current state and the rigorous standards required for credible information. He presented a relatable anecdote where he asked an AI for TV show recommendations. The AI, in its confident yet flawed manner, suggested a show that simply did not exist.

"If it makes up a show out of thin air, and that's happened to me," Wales recounted, "I was like, that show sounds really interesting and then I'm like it's not in IMDb, it's not anywhere." The AI’s subsequent, implicit "apology"—that it "didn't mean that it was a real show, I was just telling you about shows you might like if they existed"—underscores a critical chasm. Users approach these systems seeking concrete, actionable information, not speculative fiction. The human expectation, as Wales succinctly put it, is clear: "I'm a human, I live here on Earth and I only want to watch shows that exist." This simple statement encapsulates the core tension between AI's impressive linguistic fluency and its often-deficient grasp of reality.

The lack of inherent citation and verifiability in many large language models presents a significant hurdle for their adoption in domains where accuracy is paramount. Unlike Wikipedia, which thrives on a community-driven model of sourcing and peer review, AI models currently lack a transparent mechanism to demonstrate the provenance of their information. This absence makes it exceedingly difficult for users to discern truth from sophisticated fabrication, eroding the very trust necessary for widespread, critical application. The problem is exacerbated when these models produce confident, authoritative-sounding answers that are entirely false, making fact-checking a necessity rather than an optional safeguard.

Wales extended his skepticism to new ventures leveraging large language models for knowledge dissemination, specifically mentioning Elon Musk's Grokopedia. He openly questioned the viability of such projects in their current form, asserting that "the large language models that he's using to write Grokopedia are going to make massive errors and there's really no way to know for sure." This blunt assessment from a pioneer in online knowledge reflects a deep understanding of the complexities involved in building a reliable informational resource. It suggests that simply scaling up generative AI will not, by itself, solve the problem of factual accuracy.

Related Reading

- Google's Model Armor: The AI Bodyguard Preventing Digital Catastrophes

- Enterprise AI Failures: A Startup's Gold Rush

- Cameo CEO: OpenAI’s Trademark Infringement Threatens Brand Authenticity

The challenge for AI developers is not just to reduce hallucinations but to fundamentally re-engineer systems to prioritize truth and verifiability. This might involve integrating robust real-time fact-checking, mandatory source attribution, or even a human-in-the-loop validation process for critical outputs. Without such mechanisms, AI risks becoming a powerful engine for misinformation, rather than a trusted partner in discovery. The current trajectory, as Wales implies, positions AI as a compelling conversationalist, but an unreliable scholar.

Investing in models that merely sound intelligent without being factually grounded risks building impressive but ultimately fragile edifices. The path forward for AI in critical applications demands a shift from probabilistic plausible-sounding output to demonstrably verifiable information. Until that fundamental challenge is addressed, the promise of AI as a universal oracle of knowledge will remain largely unfulfilled.