Thinking Machines Lab just made a significant move to challenge OpenAI’s platform dominance. Their Tinker API is now generally available, but the real news is the introduction of an OpenAI API-compatible inference interface. This means developers can now plug-and-play Tinker models—including the new trillion-parameter Kimi K2 Thinking model—into existing infrastructure built for OpenAI.

This adoption of the de facto industry standard is a direct shot at vendor lock-in. By offering the Tinker OpenAI API compatibility, Thinking Machines drastically lowers the switching cost for developers looking for alternatives, whether for cost, performance, or specialized models. The new interface allows for quick sampling from any model by specifying a path, even while it’s still actively training.

Tinker is also scaling up its core capabilities. The new Kimi K2 Thinking model, boasting a trillion parameters, is now available for fine-tuning and is specifically engineered for complex, long chains of reasoning and tool use.

Multimodal Competition Heats Up

Beyond reasoning, Tinker has expanded into serious multimodal capabilities with the integration of Qwen3-VL (up to 235B parameters). This allows users to process images, screenshots, and diagrams by interleaving image chunks with text inputs, supporting applications like SFT and RL fine-tuning out-of-the-box.

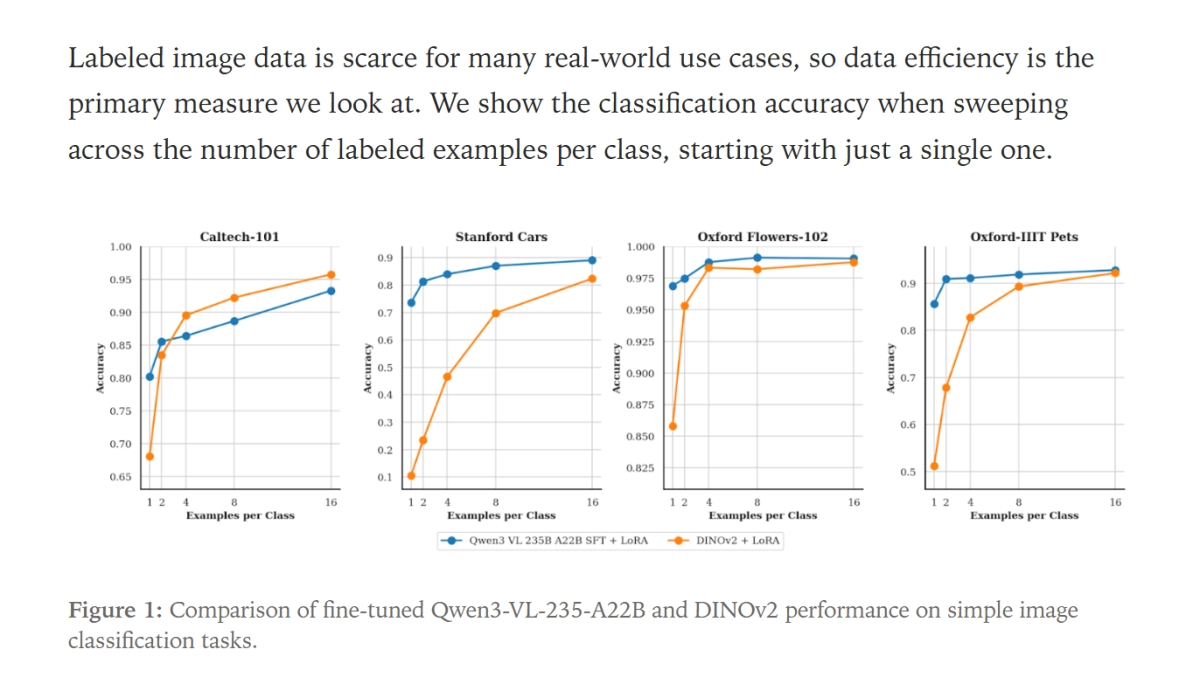

Crucially, Thinking Machines claims these Vision-Language Models (VLMs) are highly data-efficient. In internal tests showcasing the new vision capabilities, Qwen3-VL significantly outperformed traditional computer vision models like DINOv2 in low-data image classification tasks (such as identifying car models or flower species with only one example per class). This highlights the inherent advantage of VLMs: they leverage existing language context to become powerful zero-shot or few-shot learners.

Tinker is no longer a niche platform; it’s a full-stack competitor offering massive reasoning models, multimodal input, and, most importantly, the freedom to integrate without rebuilding your entire stack. (Source: Thinking Machines Lab, Dec 12, 2025)