For years, the default for structured data was clear: if it was a spreadsheet, you turned to gradient-boosted decision trees like XGBoost. This meant days spent on feature engineering and weeks tuning hyperparameters. But heading into 2026, a fundamental shift is underway, ushered in by Tabular Foundation Models (TFMs). Leading this charge is TabICLv2, a new model from Soda-Inria that redefines how we handle tabular data.

The Rise of Foundation Models for Tables

TabICLv2 isn't just an upgrade; it's a new paradigm. It employs a "Universal Prior" and a unique attention mechanism capable of handling millions of rows. The result? It outperforms heavily tuned industrial ensembles in a single, zero-shot pass.

From Weight Tuning to Prompting

Traditional machine learning trains models by adjusting weights on specific datasets. This is slow and inefficient. TabICLv2, however, is an In-Context Learner. Pre-trained on vast synthetic datasets, it learns by being prompted with examples, not retrained.

Instead of updating model weights, you provide context: training rows with features and labels, and a test row with features. The model then uses a single forward pass to predict the outcome, approximating the Bayesian posterior predictive distribution.

An Architecture Built for Scale

Transformer models typically struggle with large datasets due to quadratic attention costs. TabICLv2 tackles this with a three-stage modular pipeline:

- Column-Wise Embedding (TFcol): A Set Transformer analyzes each column independently, capturing its statistical properties. Repeated Feature Grouping prevents confusion between similar features.

- Row-Wise Interaction (TFrow): Learned [CLS] tokens aggregate column embeddings into compact row vectors, enabling efficient handling of hundreds of features.

- Dataset-Wise In-Context Learning (TFicl): The final stage where test samples attend to training samples for prediction.

QASSMax: Tackling Attention Fading

The core innovation is Query-Aware Scalable Softmax (QASSMax). This mechanism dynamically rescales queries using a logarithmic factor, ensuring the model maintains focus even with millions of rows, preventing "attention fading."

Learned from the "Hallucinated"

Remarkably, TabICLv2 was trained on zero real-world data. Its understanding of tabular data comes from millions of synthetic spreadsheets generated using Random Cauchy Graphs and eight distinct random functions, including MLPs, Tree Ensembles, and Gaussian Processes. This approach builds a "General Intelligence" for tables.

Beyond AdamW: The Muon Optimizer

Abandoning the decade-old Adam and AdamW optimizers, TabICLv2 uses Muon, a coordinate-wise optimizer. This, combined with Cautious Weight Decay, allowed the team to achieve state-of-the-art results with a mere 24.5 GPU-days on H100s.

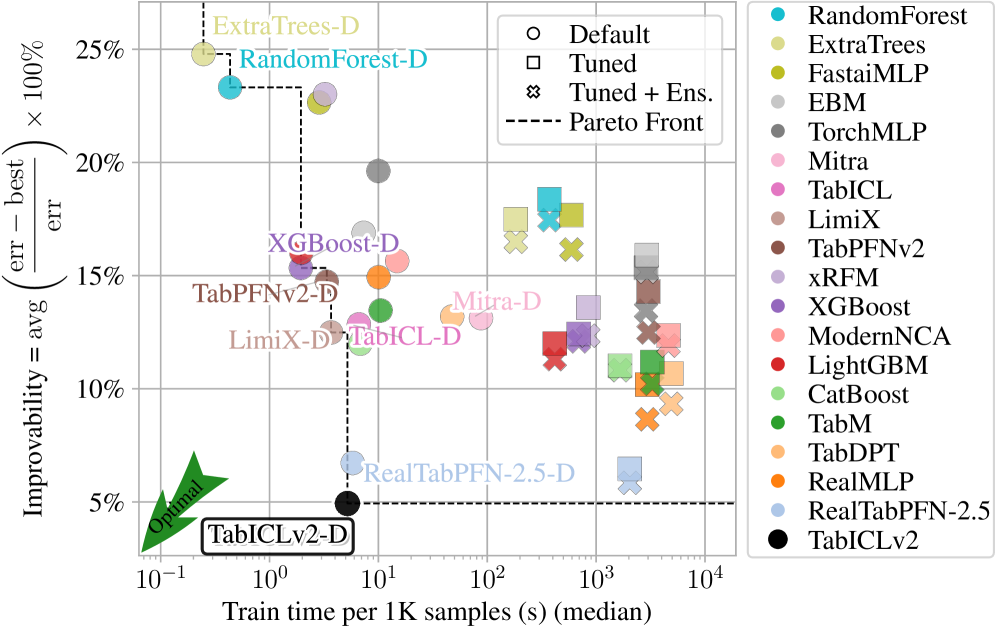

Dominating Benchmarks

On rigorous benchmarks like TabArena and TALENT, TabICLv2 didn't just compete; it dominated. It significantly outperformed tuned GBDTs and even the closed-weights leader, RealTabPFN-2.5, without any hyperparameter tuning.

| Capability | TabICLv2 (Default) | RealTabPFN-2.5 (Tuned) | GBDTs (Tuned XGB/Cat) |

|---|---|---|---|

| Training Time | Seconds (In-Context) | Minutes/Hours | Hours |

| Hyperparameter Tuning | Zero required | Extensive | Heavy |

| Max Row Support | 1,000,000+ | Struggles @ 100k+ | High |

| Speedup | 10.6x faster | Baseline | Varies |

Handling Billion-Scale Data

Memory constraints are a major hurdle for large tabular datasets. TabICLv2's Hierarchical Offloading Strategy streams data from SSDs, enabling processing of 1M rows and 500 features using only 24GB CPU RAM and 50GB GPU memory.

Probabilistic Insights

Instead of a single point estimate, TabICLv2 predicts 999 quantiles, providing a full probabilistic distribution. This allows for precise uncertainty estimates and a deeper understanding of risks.

The Era of Open Data Intelligence

With open-sourced weights and code, TabICLv2 marks the end of the "Black Box" era in tabular ML. It democratizes advanced data science, enabling anyone to achieve human-surpassing predictions from raw CSVs in seconds. The age of bespoke ML is over; the Universal Tabular Engine has arrived.