The race for the best AI coding assistant has often forced a difficult choice on developers: do you want a model that’s fast, or one that’s smart? A new company, spun out of the team behind the Devin agent, claims its new SWE-1.5 model finally eliminates that tradeoff. In a blog post, the team announced that SWE-1.5, a "frontier-size model with hundreds of billions of parameters," achieves near state-of-the-art coding performance while setting a new benchmark for speed.

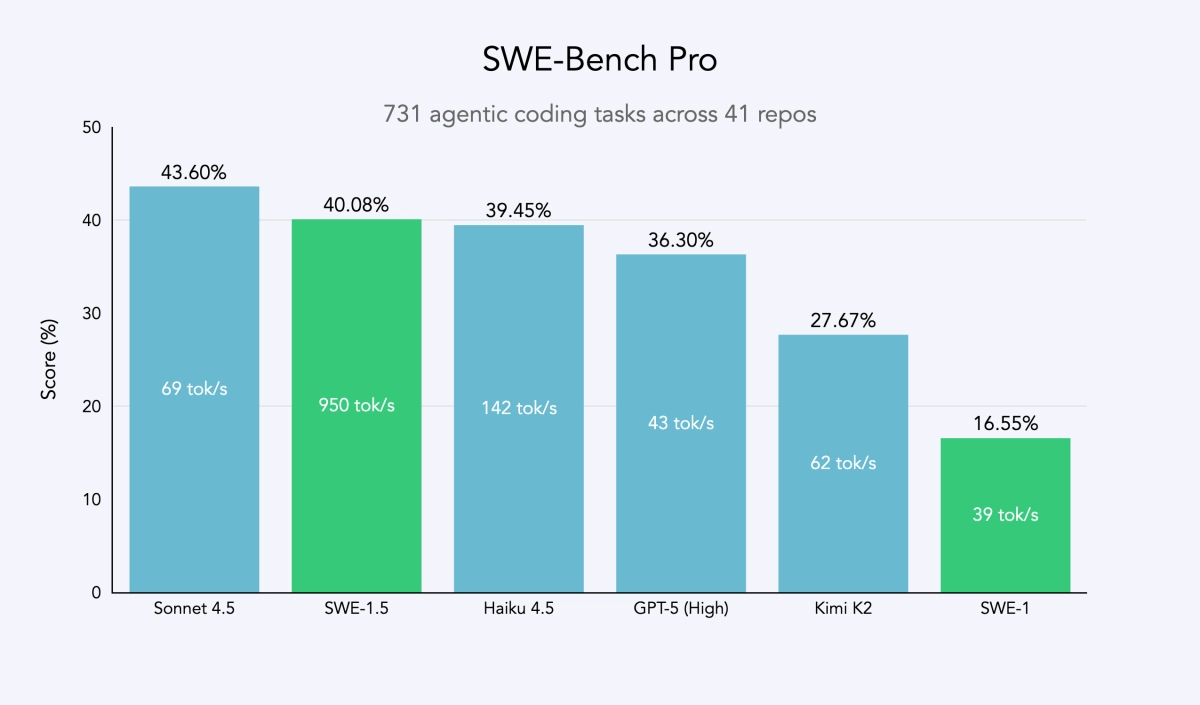

Partnering with inference provider Cerebras, the company says it can serve the SWE-1.5 model at up to 950 tokens per second. To put that in perspective, they claim it's 13 times faster than Anthropic's Sonnet 4.5 and 6 times faster than Haiku 4.5. For a developer, that’s the difference between an AI completing a task in 20 seconds versus under five — a crucial margin that keeps them in the "flow state."

More than just a model

The secret sauce, according to the team, isn’t just a better model but a reimagined stack. They argue that the model, the "agent harness" that orchestrates its actions, and the inference engine must be co-designed as a single, unified system. "Working on Devin, we often wished we could co-develop the model and the harness, and with this release we are finally able to do just that," the announcement states.

This holistic approach involved end-to-end reinforcement learning using a custom agent harness, rewriting core tools to eliminate bottlenecks, and training on NVIDIA's new GB200 NVL72 chips, making SWE-1.5 potentially the first public production model trained on the new hardware.

The team also took a novel approach to training data, moving beyond standard benchmarks like SWE-Bench, which they say have a "narrow task distribution." Instead, they created their own high-fidelity coding environments and evaluation rubrics designed by senior engineers to combat "AI slop" — code that works but is verbose or uses anti-patterns. This focus on real-world tasks and code quality, combined with the raw speed of the underlying system, is what they believe sets SWE-1.5 apart.

While the company has stopped reporting SWE-Bench scores, it claims SWE-1.5 achieves "near-frontier performance" on the more advanced SWE-Bench Pro benchmark. The SWE-1.5 model is now available in the company's Windsurf product.