The fundamental bottleneck in building physically intelligent robots has long been the data. Vision Language Models (VLMs) are typically trained on vast troves of third-person video, creating a critical viewpoint mismatch when applied to a humanoid robot operating with a head-mounted, first-person (egocentric) camera. Collecting enough high-quality, diverse robot data to fix this gap is prohibitively expensive.

Now, a team of researchers has proposed a scalable solution: the PhysBrain model, an egocentric-aware embodied brain trained almost entirely on structured human video.

The core innovation is the Egocentric2Embodiment Translation Pipeline. This system takes raw human egocentric videos—sourced from large datasets like Ego4D, BuildAI (factory work), and EgoDex (lab manipulation)—and converts them into E2E-3M, a massive dataset of 3 million structured VQA (Vision Question Answering) instances. Crucially, this process doesn't just describe the video; it enforces schema-driven, multi-level supervision covering planning decomposition, interaction mechanics, and temporal consistency, ensuring the data is reliable for embodied learning.

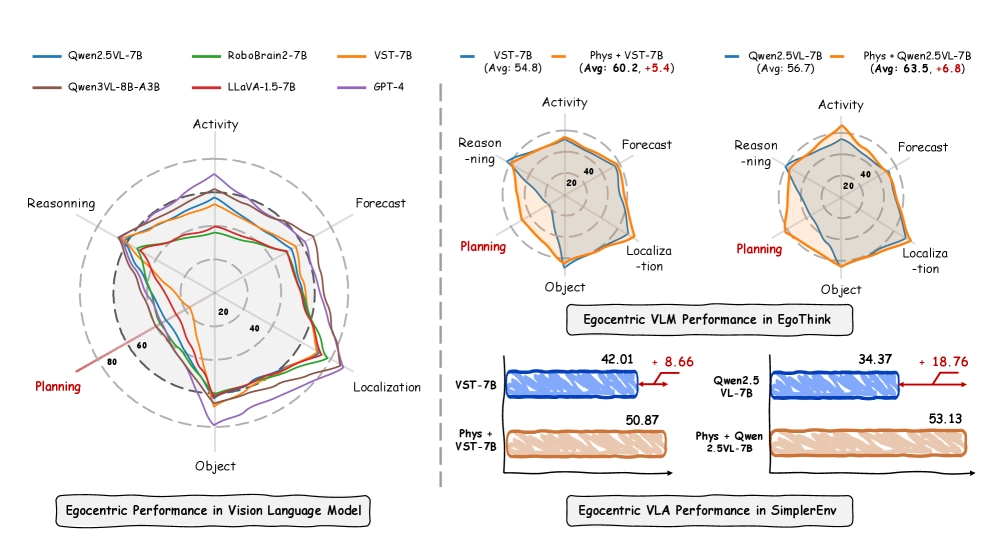

The resulting PhysBrain model, built on backbones like Qwen2.5-VL-7B, shows immediate and substantial gains in first-person understanding. When tested on the EgoThink benchmark, which measures egocentric reasoning across six dimensions, PhysBrain showed the most pronounced gains in the critical area of Planning compared to standard VLMs.

The New Scaling Law for Embodiment

The real test for any embodied brain is whether those cognitive gains translate into physical action. The researchers demonstrated that PhysBrain provides a superior egocentric initialization for downstream Vision-Language-Action (VLA) fine-tuning.

By integrating PhysBrain into two standard VLA architectures—the GR00T-style PhysGR00T and the Pi-style PhysPI—the team achieved significantly higher success rates in the SimplerEnv robot simulation environment. The PhysBrain-enhanced systems reached a 53.9% success rate, proving that better egocentric planning learned from human videos directly translates to improved downstream robot control.

This research fundamentally challenges the assumption that advancing VLA systems in egocentric settings requires extensive, costly robot data. Human first-person videos are naturally scalable, capture diverse real-world interaction contexts, and, when structured correctly, provide the necessary supervision for physical intelligence.

The PhysBrain model approach suggests a new scaling law for robotics: instead of waiting for massive, multi-institutional robot data collection efforts, the industry can leverage the existing wealth of human interaction video. This strategy is complementary to robot data—which remains vital for physical grounding—but offers a significantly faster and cheaper path to building generalized, robust embodied brains capable of handling the rapid viewpoint changes and complex contact reasoning inherent in real-world manipulation tasks. The E2E-3M dataset, bridging human experience and robot cognition, is now positioned as a foundational resource for future egocentric VLA development.