OpenAI just dropped a pair of open-source models that could fundamentally change how developers handle content moderation. The new models, dubbed gpt-oss-safeguard, aren’t your typical safety classifiers. Instead of being a black box trained on a mountain of pre-labeled data, they’re designed to reason based on a custom safety policy you write yourself.

Available on Hugging Face in 120-billion and 20-billion parameter sizes, gpt-oss-safeguard operates on a simple but powerful premise. A developer feeds the model two things at once: the content they want to check (a user comment, a full chat log, etc.) and a specific policy (e.g., “No posts that reveal spoilers for the latest season of *House of the Dragon*”). The model then uses a chain-of-thought process to reason about whether the content violates that specific rule, outputting not just a yes/no decision but also its rationale.

Traditional tools, like OpenAI’s own Moderation API, are trained to infer what’s bad based on patterns in data they’ve already seen. If you want to change the rules, you typically have to retrain the entire model, a costly and time-consuming process. With gpt-oss-safeguard, developers can iterate on their policies on the fly, simply by editing the text prompt they provide at inference.

The potential applications are broad. A video game forum could use it to specifically target and classify discussions about cheating. A product review site could write a nuanced policy to flag reviews that seem astroturfed or fake. It’s a move from a one-size-fits-all moderation hammer to a set of surgical, user-defined tools. According to OpenAI, this reasoning-based approach is particularly effective for dealing with emerging harms, highly nuanced domains, or situations where a developer lacks the massive datasets needed to train a traditional classifier.

A peek inside OpenAI's safety playbook

Gpt-oss-safeguard is an open-weight version of an internal tool called “Safety Reasoner” that has become a core component of OpenAI’s own safety stack. The company uses this exact methodology to police its most advanced systems, including the image generator Sora 2 and the upcoming GPT-5.

OpenAI reveals that in some of its recent product launches, the compute power dedicated to this kind of safety reasoning has accounted for as much as 16 percent of the total compute budget.

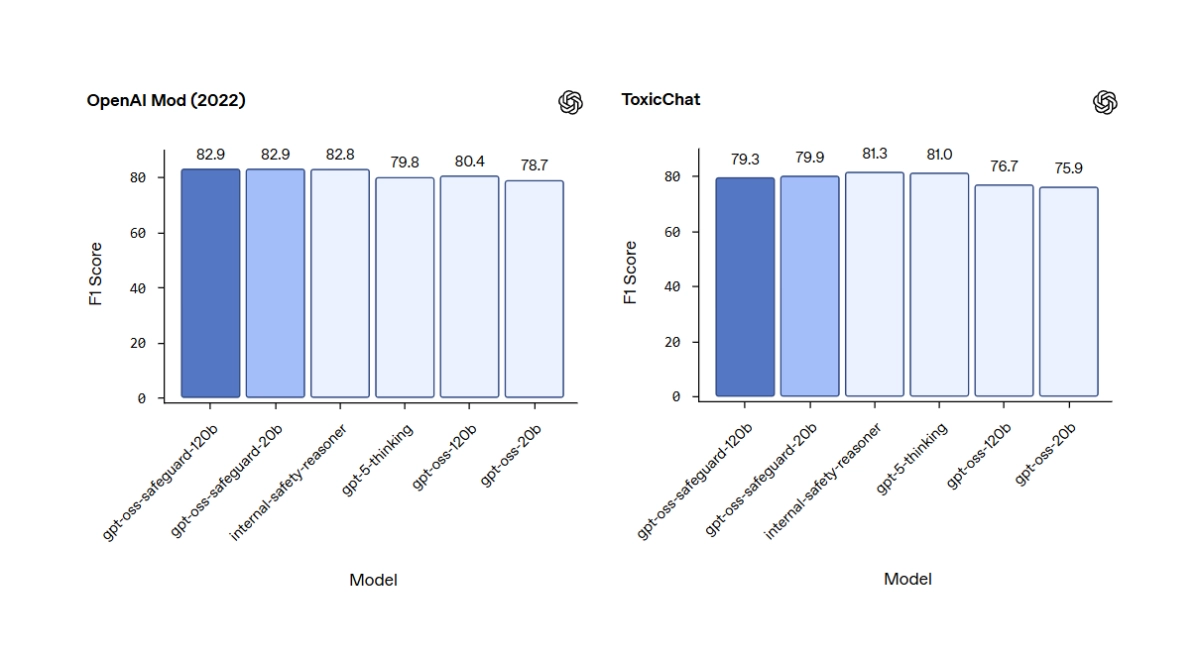

In internal tests, both the 120b and 20b versions of gpt-oss-safeguard outperformed the base gpt-oss models and, remarkably, even edged out the much larger and more capable `gpt-5-thinking` on a multi-policy accuracy benchmark. On public datasets like ToxicChat, it remained competitive with its larger, proprietary counterparts.

The company notes that for highly complex risks where tens of thousands of high-quality labeled examples are available, a traditionally trained classifier may still offer superior performance. Internally, OpenAI mitigates this by using smaller, faster models to first flag potentially problematic content, which is then passed to the more powerful Safety Reasoner for a detailed analysis.

The release was developed in collaboration with ROOST, a non-profit focused on open-source AI safety, which is launching a model community to explore best practices for these kinds of tools. This community-focused launch, complete with a permissive Apache 2.0 license, signals a clear intent to make sophisticated, policy-driven safety more accessible.