The promise of 4D world modeling—creating dynamic, editable digital twins of real-world scenes—has long been hampered by a fundamental bottleneck: data. Building high-fidelity 4D models typically requires specialized, multi-view camera setups or cumbersome, offline pre-processing stages, severely limiting the ability of these systems to generalize beyond curated lab environments.

A new paper from researchers at CASIA and CreateAI, titled NeoVerse: Enhancing 4D World Model with in-the-wild Monocular Videos, proposes a solution that fundamentally shifts the paradigm. NeoVerse is a versatile monocular 4D model designed to leverage the cheapest and most abundant data source available: in-the-wild monocular videos.

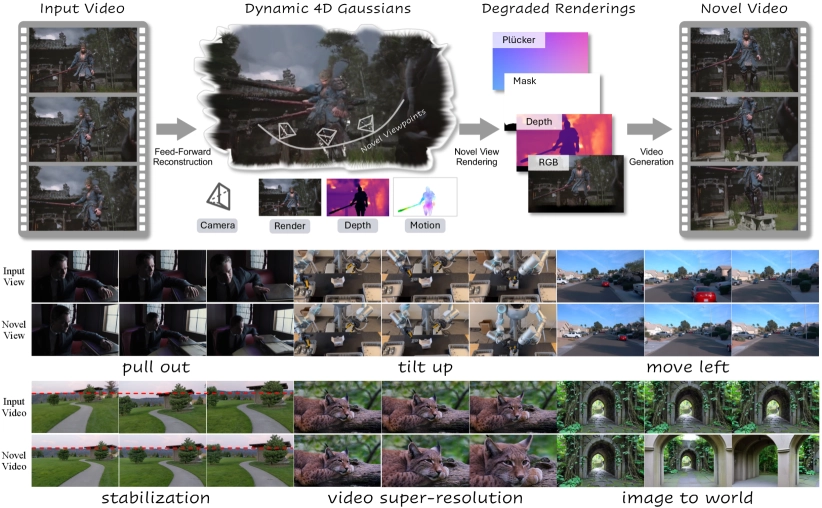

The core philosophy is scalability. Instead of relying on expensive multi-view data or heavy offline depth estimation, NeoVerse is built around a pose-free, feed-forward 4D Gaussian Splatting (4DGS) reconstruction model. This design allows the system to efficiently reconstruct dynamic scenes from sparse key frames in an online manner, making the entire pipeline scalable enough to train on over one million diverse video clips.

This efficiency translates directly to performance gains. While existing reconstruction-generation hybrid methods like TrajectoryCrafter require over 150 seconds for inference on an 81-frame video, NeoVerse completes the task in as little as 20 seconds, according to the researchers’ runtime evaluations on an A800 GPU.

The Scalability Breakthrough

The key technical challenge in using monocular video for 4D world modeling is generating the necessary training pairs. The generation model needs to learn how to turn low-quality, geometrically degraded renderings (simulating novel viewpoints) into high-quality, ground-truth video.

NeoVerse addresses this with two major innovations. First, it introduces bidirectional motion modeling within its 4DGS framework. This allows the model to accurately interpolate Gaussian primitives between sparse key frames, maintaining temporal coherence without needing to process every frame during the computationally intensive reconstruction phase.

Second, the team developed efficient online monocular degradation simulations. These techniques—including Visibility-based Gaussian Culling (to simulate occlusion patterns) and the Average Geometry Filter (to simulate flying-edge pixels and depth distortions)—allow the model to generate the necessary degraded rendering conditions on the fly. By simulating the artifacts inherent in imperfect reconstruction, the generation model learns to suppress them, leading to superior artifact suppression compared to competitors.

The result is a model that achieves state-of-the-art performance in both reconstruction and novel-trajectory video generation benchmarks. Crucially, NeoVerse demonstrates superior trajectory controllability—a feature often sacrificed by purely generation-based models like ReCamMaster—while maintaining high aesthetic quality, even for challenging, dynamic human activities.

This versatility means NeoVerse can handle a wide range of downstream applications, including video editing, stabilization, super-resolution, and precise simulation. By democratizing the data source for 4D world models, NeoVerse represents a significant step toward making high-fidelity digital content creation and embodied intelligence systems accessible outside of specialized labs. The researchers plan to make the source code publicly available.