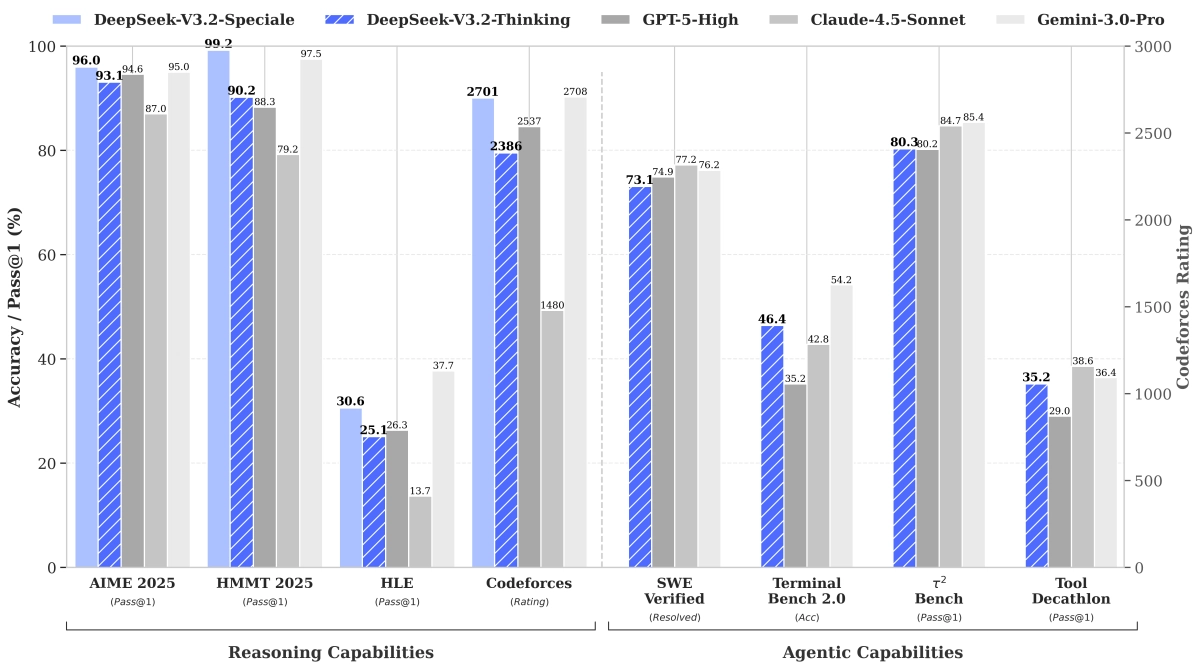

The DeepSeek V3.2 release signals a significant push in the open-source LLM race, not just chasing raw benchmark scores but specifically targeting agentic capabilities and complex reasoning. DeepSeek AI has dropped two variants: the general-purpose V3.2, claimed to hit GPT-5 level performance, and the powerhouse V3.2-Speciale, which they position against Gemini 3.0 Pro, even showcasing gold medal results in simulated 2025 Olympiads (IMO, IOI).

The technical report highlights three core innovations. First, DeepSeek Sparse Attention (DSA) tackles the long-context efficiency problem, moving attention complexity from quadratic to near-linear, a crucial step for real-world agent deployment where context windows balloon. Second, a massive, synthetically generated agent training dataset—1,800 environments and 85,000 instructions—underpins the new focus on tool-use.

The most interesting development for developers is the integration of "Thinking in Tool-Use." This means the model is explicitly trained to reason before and during tool execution, moving beyond simple function calling to more robust, multi-step agent workflows. The standard V3.2 model is available on the web and API, inheriting the usage pattern of its predecessor, V3.2-Exp.

However, the top-tier reasoning model, V3.2-Speciale, is API-only for now, likely due to its higher token consumption required for maxed-out reasoning. DeepSeek is transparent about this trade-off: power comes at a cost. They’ve even provided the final submissions from the simulated 2025 Olympiads for community verification, a bold move to prove their reasoning claims outside standard benchmarks.

The API structure for the Speciale variant is temporary, expiring at the end of 2025, suggesting this is a controlled research preview rather than a permanent production offering. Furthermore, the chat template has been updated to accommodate this new thinking/tool-use structure, requiring developers to adapt their encoding scripts, moving away from standard Jinja formats toward custom Python encoding tools provided by DeepSeek.

What this means for the industry is clear: the open-source community is now directly challenging proprietary leaders on complex, multi-step reasoning tasks essential for autonomous agents. While V3.2 aims to be the efficient daily driver, V3.2-Speciale is a statement piece demonstrating that with enough compute and novel architectural tweaks like DSA, open models can achieve frontier-level performance in specialized domains like competitive programming. The focus on agentic training data synthesis is perhaps the most replicable takeaway for other labs looking to build truly capable AI assistants.

The Agentic Leap: Reasoning Meets Tool Use

DeepSeek’s strategy with V3.2 appears to be a direct pivot toward building reliable AI agents. The introduction of DSA addresses the computational bottleneck that plagues long-context reasoning, making complex, multi-step tasks feasible without crippling latency or cost. By explicitly training the model to "think" within tool-use trajectories, DeepSeek is attempting to solve the brittle nature of current agent frameworks where reasoning often breaks down when interacting with external APIs or code execution environments. The fact that they are releasing the model weights under MIT suggests a commitment to pushing the entire open ecosystem forward, even if the bleeding-edge Speciale variant is currently gated behind a temporary API. This release solidifies reasoning parity as the new battleground, moving beyond simple instruction following.