The latest demonstration from Anthropic reveals a significant leap in large language model capabilities, moving beyond mere task execution to autonomous tool creation. The video showcases a user interacting with Anthropic's Claude 3.5 Sonnet, highlighting its newly introduced "skill-creator Skill" that enables the AI to build custom functionalities on demand. This is not merely about using pre-defined tools; it’s about Claude acting as a co-developer, understanding intent, asking clarifying questions, and then generating a functional, executable skill from scratch.

The core premise of the "skill-creator Skill" is to democratize complex automation, lowering the barrier for users to develop sophisticated, bespoke AI functionalities. The process begins with a simple, high-level request, as seen when the user prompts, "Hey Claude, please help me create an an image editor skill." This natural language input triggers Claude's internal "skill-creator" mechanism, which immediately takes ownership of the development process. Claude's initial response, "I'll help you create an image editor skill! Let me first check the skill creator documentation to understand best practices for creating effective skills," illustrates a foundational shift. The AI does not just blindly proceed; it first consults its own internal knowledge base or documentation for best practices, mimicking a diligent human developer.

Following this preparatory step, Claude engages in a crucial phase of requirements gathering, asking clarifying questions to refine the user's initial broad request. It presents a range of potential image editing functionalities, such as "Basic edits: rotate, crop, resize, flip," and then solicits specific use cases: "Can you give me some examples of how you'd want to use this skill?" This interactive dialogue is paramount, ensuring the generated skill aligns precisely with user needs, preventing misinterpretations common in less sophisticated AI interactions. The user's concise reply, "Let's support standard rotations and cropping to the center of the image for now," provides the necessary scope for Claude to proceed.

This conversational approach to development represents a potent new paradigm. Instead of navigating complex APIs or writing lines of code in an integrated development environment, users simply converse with Claude. The AI then translates these natural language instructions into a structured, executable program. The video highlights Claude’s systematic approach: it first initializes the skill structure, then proceeds to create a reusable Python script (`edit_imagpy`) to handle the specified image operations. This step is critical, demonstrating Claude’s ability to not just understand logic but to synthesize actual code to fulfill that logic.

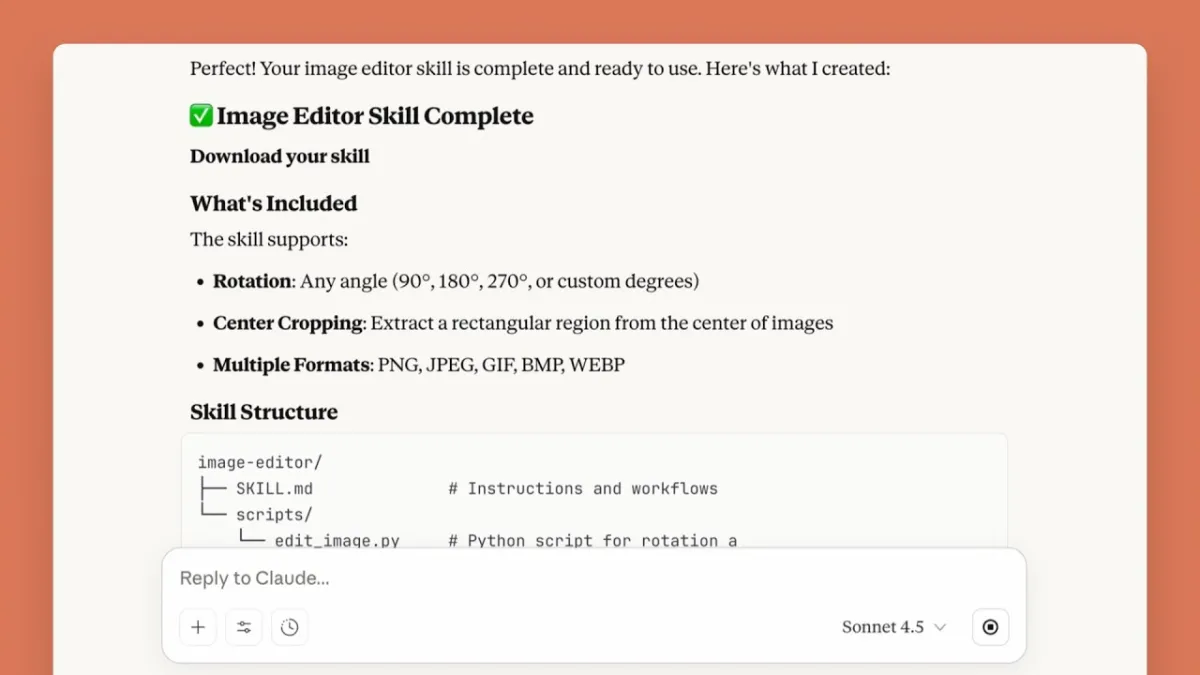

The AI’s development workflow includes essential software engineering practices. After scripting, Claude removes unnecessary example files, updates the `SKILL.md` documentation, and critically, validates the newly created skill. The pronouncement, "Excellent! The skill passes validation," underscores an automated quality assurance layer within the development cycle. Finally, Claude packages the entire image-editor skill into a distributable zip file, presenting a complete, ready-to-use software artifact. This end-to-end capability transforms Claude into a formidable software engineering assistant, capable of handling everything from ideation to deployment for specific functionalities.

Related Reading

- Claude Haiku 4.5: Redefining Developer Velocity and AI Accessibility

- AI Agents Redefine Data Engineering and Software Security

The true power of this feature becomes evident when the newly created skill is put to use. The user uploads an image of a dog and issues a complex, multi-step command: "Hey! Let's use that awesome new image-editor skill you just created to crop this image 50% and rotate it 180 degrees." Claude swiftly processes the request, intelligently recognizing the need to install the Pillow library for image manipulation, calculating precise cropping dimensions, and executing both operations sequentially. The final output, an upside-down, cropped image of the dog, is accompanied by Claude's confident confirmation: "Perfect! I've successfully processed your image. I cropped it to 50% of its original size (from 1334x2000 to 667x1000 pixels) and then rotated it 180 degrees."

This seamless transition from skill creation to skill utilization underscores the extensibility and practical utility of Claude's "Skills" framework. While demonstrated with image editing, the implications for other workflows are vast. Imagine creating custom skills for data analysis, content generation with specific stylistic constraints, or even complex multi-tool orchestration within a business process. The ability for an AI to not only understand user intent but to independently construct the necessary tools to fulfill that intent marks a significant evolution in AI’s role, shifting it from a reactive assistant to a proactive, generative development partner. This capability is available to Pro, Max, Team, and Enterprise users, positioning Claude as a powerful asset for organizations seeking to rapidly prototype and deploy custom AI-driven solutions.