The era of stateless AI interactions is rapidly drawing to a close, marked by Anthropic's latest stride with Claude: the introduction of persistent memory. This fundamental upgrade shifts the paradigm from a transactional tool to a truly adaptive, context-aware digital partner, promising to redefine how professionals engage with artificial intelligence.

Anthropic, a leading AI research and safety company, recently unveiled a significant enhancement to its Claude AI model, allowing it to retain and recall information across multiple conversations and sessions. This feature, simply termed "memory," addresses a critical pain point in current large language model (LLM) usage: the constant need to re-establish context. As outlined in the video accompanying the announcement, Claude can now remember how users work across web, desktop, and mobile applications, eliminating the inefficiency of repeating explanations or sifting through past interactions.

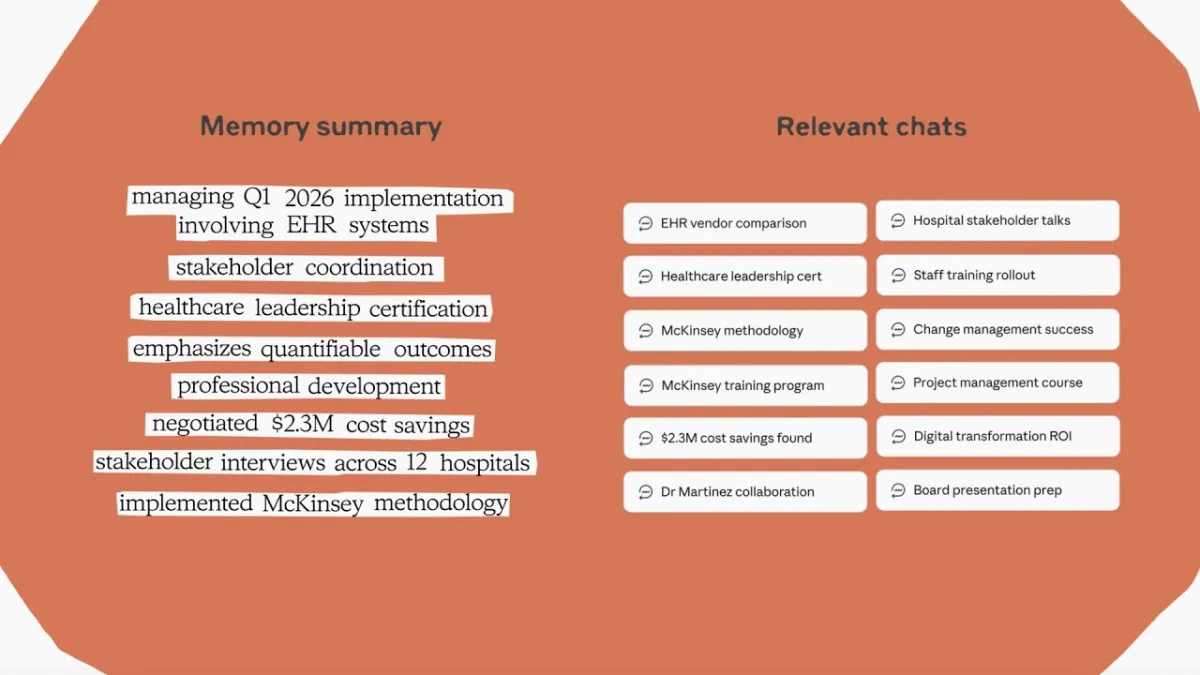

The implementation of memory is designed with user control at its core. The video demonstrates clear toggles for "Search and reference chats" and "Generate memory from chat history," empowering users to dictate the extent of Claude's recall. Once enabled, Claude actively synthesizes a "Memory summary" from past interactions, categorizing information into practical domains such as "Role & Work," "Current Projects," and even "Personal Content." This proactive synthesis creates a living profile of the user's ongoing tasks, preferences, and professional identity. For instance, a user's memory might include details like "Works as a healthcare transformation consultant leading digital strategy for Mountain View Healthcare's 12-hospital network" or "Planning spring 2026 trip to Japan."

This persistent understanding profoundly streamlines workflows. Imagine needing to compile a quarterly performance review. Instead of manually collating achievements or feeding the AI piecemeal data, a user can simply prompt, "Can you remind me of everything I've accomplished this quarter? Have to write a very overdue self review..." Claude then searches relevant chats, drawing upon its generated memory and specific past conversations to produce a comprehensive summary. "Perfect! I found our work discussions from this quarter. Here's everything you've accomplished," Claude responds, presenting a detailed breakdown of project leadership, financial impact, professional development, and team collaboration. The ability to then ask Claude to "structure by impact areas" for a compelling review demonstrates the immediate, practical utility of this deep contextual understanding.

This memory feature marks a pivotal step towards truly personalized AI. Previous LLMs, while powerful, operated largely as blank slates with each new interaction, requiring users to constantly re-educate them. Claude's memory changes this fundamental dynamic. It fosters a cumulative intelligence, allowing the AI to build a nuanced understanding of an individual's professional role, personal interests, communication style, and ongoing objectives. This isn't just about recalling facts; it's about forming a persistent, evolving model of the user, enabling more relevant, proactive, and anticipatory assistance. The integration of "Personal Content" into the memory summary highlights Anthropic's vision for a holistic AI companion, capable of assisting across both professional and personal spheres, reflecting the integrated nature of modern life.

The immediate benefit for founders, VCs, and AI professionals lies in a tangible boost to productivity and a seamless integration into existing workflows. The explicit promise of "No more re-explaining context or hunting through old chats—just pick up right where you left off" resonates deeply with anyone navigating complex projects and diverse information streams. For a startup founder, this could mean Claude instantly recalling details of past investor pitches, market analyses, or product development discussions, providing instant summaries or drafting follow-ups without repetitive input. For a defense analyst, it could involve recalling nuances of geopolitical events, past intelligence reports, or specific operational details, enabling faster, more informed decision-making. The AI evolves from a sophisticated search engine to a true co-pilot, reducing cognitive load and accelerating the pace of work by preserving critical context. This capability will be particularly valuable in environments where continuous, iterative work is the norm, allowing for continuity across tasks and projects.

Related Reading

- AI Agents: From Prediction to Autonomous Action

- Karpathy's Brutal LLM Assessment Ignites AI Progress Debate

- Decoupling AI Agents for Production-Ready Scalability

Anthropic's deliberate design choice to provide explicit user controls for memory generation and chat referencing is a crucial insight into their approach to responsible AI development. In an era increasingly concerned with data privacy and algorithmic transparency, giving users granular control over what information Claude retains and how it uses it builds essential trust. This isn't a passive data collection; it's an opt-in, managed personalization. For enterprise clients, this level of control will be paramount, offering assurances regarding sensitive business information and compliance. The ability to manage and even delete specific memories ensures that the power of personalization doesn't come at the cost of user autonomy, a critical factor for widespread adoption in professional settings. This careful balance between enhanced utility and user governance will likely become a benchmark for future AI product development.

Claude's memory feature represents more than just an incremental update; it signifies a qualitative leap in AI capability. It moves beyond the often-frustrating experience of a perpetually amnesiac assistant towards one that learns, adapts, and remembers. This transition positions Claude as a far more effective and indispensable tool for high-stakes professional environments, where efficiency, contextual understanding, and continuity are paramount. The implications for workflow optimization and personalized digital assistance are profound, setting a new standard for what users can expect from their AI counterparts.