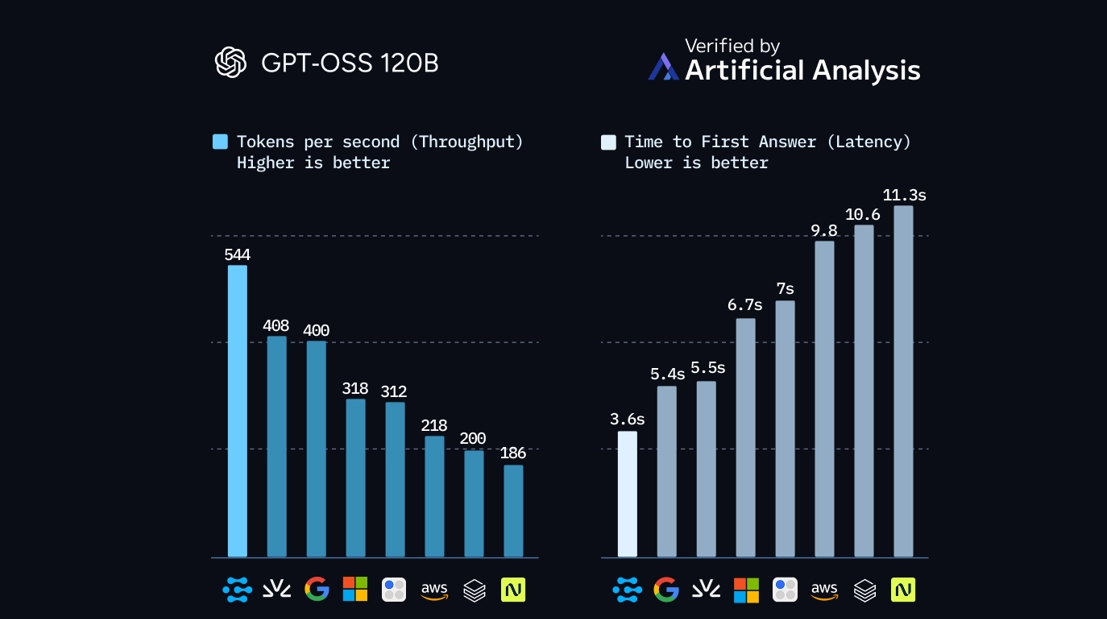

Clarifai’s latest benchmark on OpenAI’s GPT-OSS-120B model points to a quiet but important shift in AI infrastructure. Using its new Clarifai Reasoning Engine for agentic AI inference, the company recorded 544 tokens per second at a blended price of $0.16 per million tokens, as measured by independent firm Artificial Analysis. In the same benchmark, Clarifai outperformed all other providers tested, including vendors using custom inference silicon such as SambaNova and Groq.

The result doesn’t settle the GPU-versus-ASIC debate, but it does show that a well-optimized GPU stack can compete at the top end of large-model inference without custom hardware. For teams running agentic and reasoning-heavy workloads, it is a signal that software and serving architecture may now matter as much as chip choice.

Clarifai’s numbers build on earlier work. Its Compute Orchestration platform delivered 313 tokens per second on GPT-OSS-120B with a time-to-first-token (TTFT) of about 0.27 seconds, priced at the same $0.16 per million tokens. By October, the Reasoning Engine almost doubled throughput to 544 tokens per second, with TTFT increasing modestly to around 0.36 seconds. For interactive chat, that extra 90 milliseconds is noticeable but not critical; for multi-thousand-token reasoning traces, overall throughput dominates the user experience and cost equation.

The Reasoning Engine’s gains come from incremental, software-first work rather than a single feature. Clarifai uses adaptive kernel and scheduling strategies that adjust batching, memory reuse, and execution paths based on real workload patterns. Instead of relying on static configurations, the system learns from repeated request shapes common in agentic workflows: long chains of calls, similar prompt structures, and recurring tool patterns. Over time, these optimizations compound, particularly in environments where the same models and flows run at sustained volume.

A key detail is that Clarifai’s performance improvements do not appear to trade away accuracy. The company is still serving GPT-OSS-120B, not a pruned or heavily approximated variant. That matters for enterprises that want to push reasoning workloads into production without rewriting prompts or accepting degraded quality to hit performance targets.

Underneath this stack, Clarifai is running on dedicated GPUs from the Vultr GPU cloud for AI inference, rather than heavily virtualized multi-tenant instances. Using reserved GPU capacity rather than heavily shared instances helps keep performance predictable, which is important for the Reasoning Engine’s adaptive serving logic. In the Artificial Analysis benchmark, the combination of Clarifai’s software stack and this stable GPU environment is what shows up in the throughput and latency numbers.

In other words, the benchmark is as much about matching the serving software to a predictable GPU environment as it is about raw hardware. The GPUs themselves are not unusual; the way they are scheduled and exposed to Clarifai’s stack is what enables the tuning.

Artificial Analysis’ benchmark gives some context by grouping providers into rough performance tiers.

At the high end, Clarifai leads with 544 tokens per second. Fireworks follows at 482 tokens per second, also on an aggressively optimized GPU stack. Fireworks leans on custom batching and kernel-level tuning, and is priced at about $0.38 per million tokens. SambaNova comes in around 428 tokens per second with its Reconfigurable Dataflow Unit architecture, designed specifically for transformer-style workloads. Its price point is roughly $0.32 per million tokens. Both Fireworks and SambaNova demonstrate that there are multiple viable ways to reach high throughput—GPU-first, software-first in Fireworks’ case, and custom silicon in SambaNova’s.

A mid-range band includes Groq and the hyperscalers. Groq’s Language Processing Unit focuses on deterministic execution and software-managed memory, landing near the 270–315 tokens per second range at around $0.28 per million tokens. AWS Bedrock, Azure, and Google Vertex AI cluster between roughly 214 and 289 tokens per second, with prices in the $0.42 to $0.54 per million tokens range. These platforms emphasize breadth of services, global footprint, and compliance more than top-of-chart inference metrics, and the layering required to support those features introduces additional overhead.

At the lower end, providers like Nebius show throughput below 200 tokens per second, with one result around 87 tokens per second and notably high latency. For most production reasoning workloads, that level of performance will be a limiting factor regardless of per-token pricing.

The economics become clearer when throughput is considered alongside price. Clarifai’s position at 544 tokens per second and $0.16 per million tokens places it in what Artificial Analysis calls the “most attractive quadrant”: high output, relatively low cost. CompactifAI, for example, offers a lower per-token rate of roughly $0.10 per million tokens but delivers only about 124 tokens per second. For sustained workloads, the lower throughput translates into more GPU time consumed and potentially higher total cost, even with the cheaper unit price.

The hyperscalers, by contrast, charge three to four times Clarifai’s price while delivering roughly half the throughput in this specific benchmark. For organizations already committed to those platforms for data, governance, and internal tooling, that premium may be acceptable. For teams focused purely on inference cost and performance, it highlights the value of looking beyond the default cloud choices.

For practitioners, the main takeaway is not that one provider has permanently “won,” but that the performance frontier has moved up the stack. The difference between 544 tokens per second and 214 tokens per second on the same model is largely the difference in serving architecture: kernel fusion strategies, batching logic, memory reuse, scheduling paths, and how tightly the engine integrates with the underlying GPUs.

A practical playbook emerges from Clarifai’s results:

- Treat serving and orchestration as first-class optimization targets. Before changing models or hardware, teams can often gain more by revisiting batching, caching, and memory strategies.

- Prioritize predictable infrastructure over headline capacity. Dedicated GPUs and a simpler control plane reduce jitter that undermines fine-grained optimizations, especially in long agentic traces.

- Optimize against real workload patterns. Benchmarks are useful, but the heaviest gains show up when the serving stack is tuned to the actual mix of request sizes, sequence lengths, and concurrency in production.

- Consider custom silicon as an option, not a prerequisite. The latest benchmarks suggest that with enough software focus, standard GPUs on a well-behaved cloud can deliver performance that overlaps with ASIC-based systems for many workloads.

Clarifai is already offering to apply its Reasoning Engine techniques to customer-specific models, extending these approaches beyond gpt-oss-120B. Whether enterprises adopt that route or build their own stack, the direction of travel is similar: inference performance is becoming a software and architecture problem as much as a hardware one.