For nearly three years, the generative AI boom has been shadowed by the persistent whisper of a bubble. But according to new data, the enterprise is done whispering. It’s writing checks.

The Enterprise AI market is no longer a speculative field of pilots and proofs of concept; it is now a $37 billion industry, scaling faster than any software category in history. That figure, up 3.2x from 2024, signals a definitive boom, not a bubble, driven by companies prioritizing immediate productivity gains over long-term infrastructure bets, according to the latest State of Generative AI in the Enterprise report from Menlo Ventures.

The data reveals a chaotic, rapid transformation of the corporate tech landscape, where traditional rules of procurement and vendor dominance are being broken. Enterprises are overwhelmingly choosing to buy ready-made AI solutions rather than build them in-house (76% purchased vs. 24% built). More critically, the initial adoption is bypassing centralized IT departments entirely.

Product-led growth (PLG)—where individual employees start using a tool and drive adoption upward—accounts for 27% of all AI application spend. That is nearly four times the rate seen in traditional SaaS. When accounting for "shadow AI" usage (employees using personal accounts like ChatGPT Plus for work), the bottom-up adoption rate may be closer to 40%. This PLG flywheel is creating a new class of winners: AI-native startups.

Startups Are Winning the Application War

The $19 billion application layer—the user-facing software that leverages the underlying models—captured more than half of all enterprise AI spending in 2025. This is where the power dynamic has flipped most dramatically.

Startups now capture nearly $2 in revenue for every $1 earned by incumbents, holding 63% of the application market share. This is a staggering reversal from just last year, when incumbents still held the lead.

The reason is velocity. In high-growth areas like product and engineering, startups are out-executing larger competitors by shipping better features faster. The canonical example is code generation, the first true "killer use case" for generative AI, which now accounts for $4.0 billion in spending.

While GitHub Copilot had every structural advantage, AI-native competitors like Cursor captured significant share by being model-agnostic and quickly integrating frontier models like Claude Sonnet 3.5 the moment they launched. This product agility allowed them to win the ground game with developers, who then brought the tool into the enterprise.

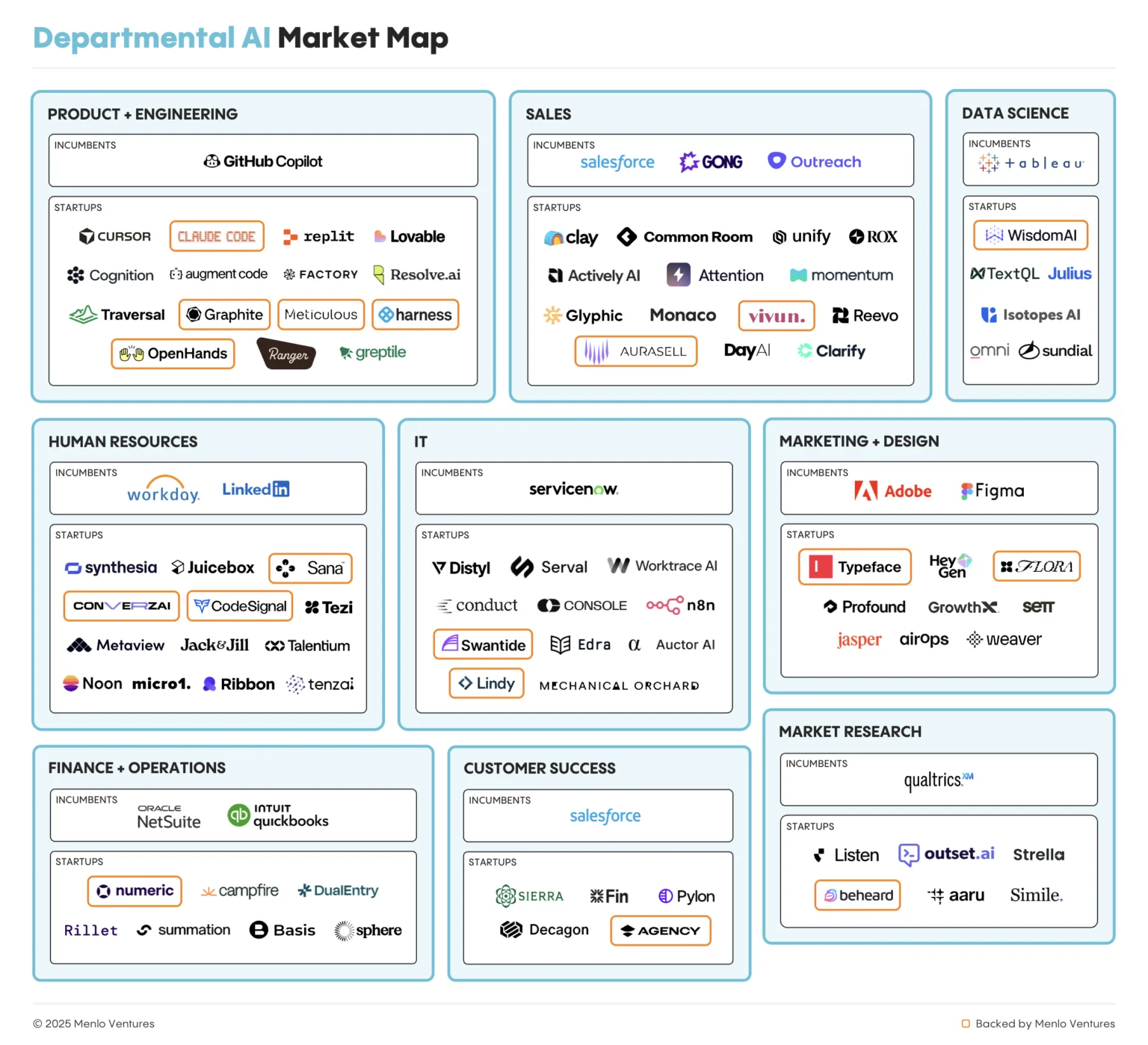

This pattern holds true across departments. In sales, startups are winning by attacking workflows that legacy systems like Salesforce do not own—research, personalization, and enrichment—positioning themselves to potentially disintermediate the system of record entirely. Only in areas requiring deep integration and reliability, like IT and data science, do incumbents maintain a lead.

The New Enterprise Stack

The application layer segments into three main areas: departmental, vertical, and horizontal AI.

Departmental AI, totaling $7.3 billion, is dominated by coding. The sharp jump in spending from $550 million in 2024 to $4 billion in 2025 reflects a shift in capability: models can now interpret entire codebases and execute multi-step tasks, moving coding from a point solution to an end-to-end automation category.

Vertical AI, a $3.5 billion market, is led unexpectedly by healthcare, which captures nearly half of all vertical spend ($1.5 billion). Driven by chronic staffing shortages and administrative burden, health systems are pouring money into solutions like ambient scribes, which reduce documentation time by over 50%.

Meanwhile, horizontal AI remains the largest category at $8.4 billion, dominated by general-purpose copilots like ChatGPT Enterprise and Microsoft Copilot, which account for 86% of the spend. True agent platforms—systems where an LLM plans and executes actions autonomously—still only account for 10% of this spend, revealing how early the technology remains.

This simplicity is reflected in the $18 billion infrastructure layer. Despite the hype around agents, most production architectures are still built around fixed-sequence workflows, relying heavily on prompt design and retrieval-augmented generation (RAG). The biggest infrastructure beneficiaries are incumbents like Databricks and Snowflake, which are extending trusted data platforms.

However, the foundation model landscape saw a seismic shift.

Anthropic has officially unseated OpenAI as the enterprise LLM leader. Anthropic now commands 40% of enterprise LLM spend, up from 24% last year. Over the same period, OpenAI’s share fell dramatically to 27% from 50% in 2023. Google also saw significant gains, rising to 21%.

Anthropic’s ascent is almost entirely driven by its remarkably durable dominance in the coding market, where it holds an estimated 54% market share. The release of Claude Sonnet 3.5 in mid-2024 triggered the category’s breakout, and subsequent releases like Claude Opus 4.5 continue to reset the high-water mark for code generation, proving that for frontier use cases, users are price-insensitive and will pay more for performance.

Looking ahead to 2026, the market is poised for further disruption. The report predicts that AI will exceed human performance in daily practical programming tasks, and that the rising autonomy of agents will force explainability and governance tools to go mainstream. For now, the message is clear: the enterprise AI market is not just growing, it is fundamentally reshaping how software is bought, built, and deployed, with speed and specialized performance winning over legacy distribution.