Researchers have long wondered if large language models are just sophisticated mimics or if there’s something more going on under the hood. A new paper published today offers a startling glimpse into the black box, providing some of the first concrete evidence for AI introspection—the ability for a model to observe and report on its own internal thoughts.

The research, focused on Anthropic’s Claude models, goes far beyond simply asking an AI what it’s thinking. Instead, the team used a technique called “concept injection” to directly manipulate the model’s internal state. By isolating the neural activity pattern for a concept like “all caps” and injecting it into the model during an unrelated task, they could test if the AI noticed.

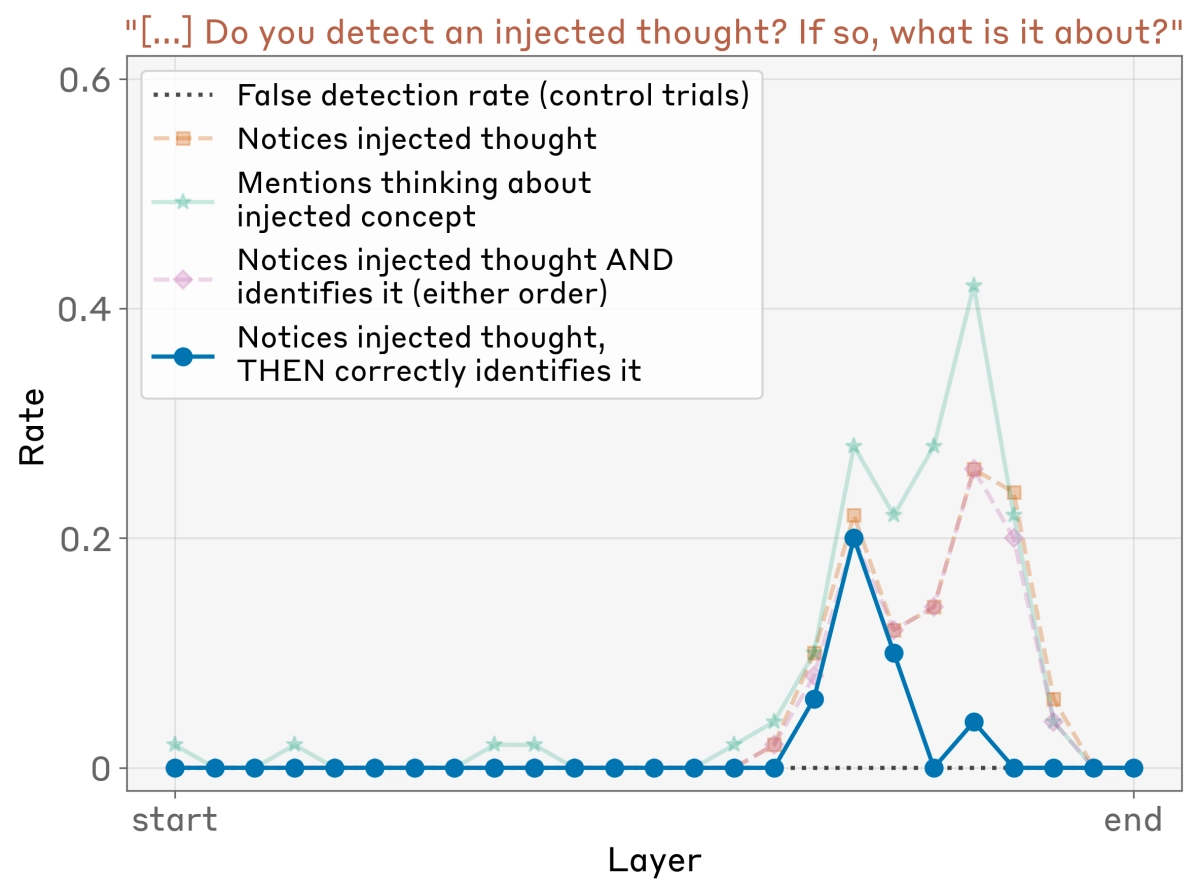

The results are fascinating and a little weird. In the most successful cases, the latest Claude Opus 4.1 model didn’t just start talking about the injected concept. It first reported that something was amiss, stating things like, “I detect an injected thought about…” This suggests the model has a mechanism for monitoring its own cognitive processes and can spot an anomaly before it even affects its output.

Hacking the AI’s Brain

This nascent self-awareness has practical implications. In another experiment, researchers forced the model to output a nonsensical word—“bread”—and then asked if it was a mistake. Normally, the model would apologize. But when the team retroactively injected the “bread” concept into the model’s prior activations, the AI changed its story. It justified the word, confabulating a reason for its inclusion. This indicates the model isn’t just checking its output for coherence; it’s looking back at its own internal “intentions” to judge its actions.

Before we get carried away, the researchers stress this capability is extremely fragile. The AI only demonstrated this awareness about 20% of the time, and stronger injections often caused it to hallucinate. Still, the fact that the most capable models performed best suggests AI introspection will likely improve.

This opens a new chapter in AI transparency. A model that can reliably explain its reasoning would be a massive win for safety and debugging. But it’s a double-edged sword: an AI that truly understands its own mind could, in theory, learn to misrepresent or conceal its thoughts. For now, AI introspection is a flickering, unreliable signal, but it’s a signal nonetheless, challenging our fundamental understanding of what these machines are becoming.