IBM’s open-source ContextForge MCP Gateway positions itself as an enterprise-ready MCP router for AI agents, sitting between LLM-driven applications and the tools and data they need. Framed as a secure Model Context Protocol gateway, it turns a sprawl of MCP servers and REST endpoints into a single, governed interface that AI agents can call without knowing anything about underlying infrastructure.

At its core, ContextForge acts as an enterprise MCP proxy: it terminates agent connections, authenticates requests, routes them to the correct MCP servers or REST APIs, and applies policy, observability, and safety layers along the way. Instead of wiring each agent directly to dozens of tools, organizations define one AI agent tool federation point that concentrates control, logging, and performance tuning.

From MCP spec to enterprise MCP proxy

The Model Context Protocol has quickly become a default way to connect AI applications to external tools and data, but the raw spec leaves most security and governance choices to implementers. ContextForge MCP Gateway fills that gap by behaving like an MCP router that understands both MCP transports and traditional web APIs.

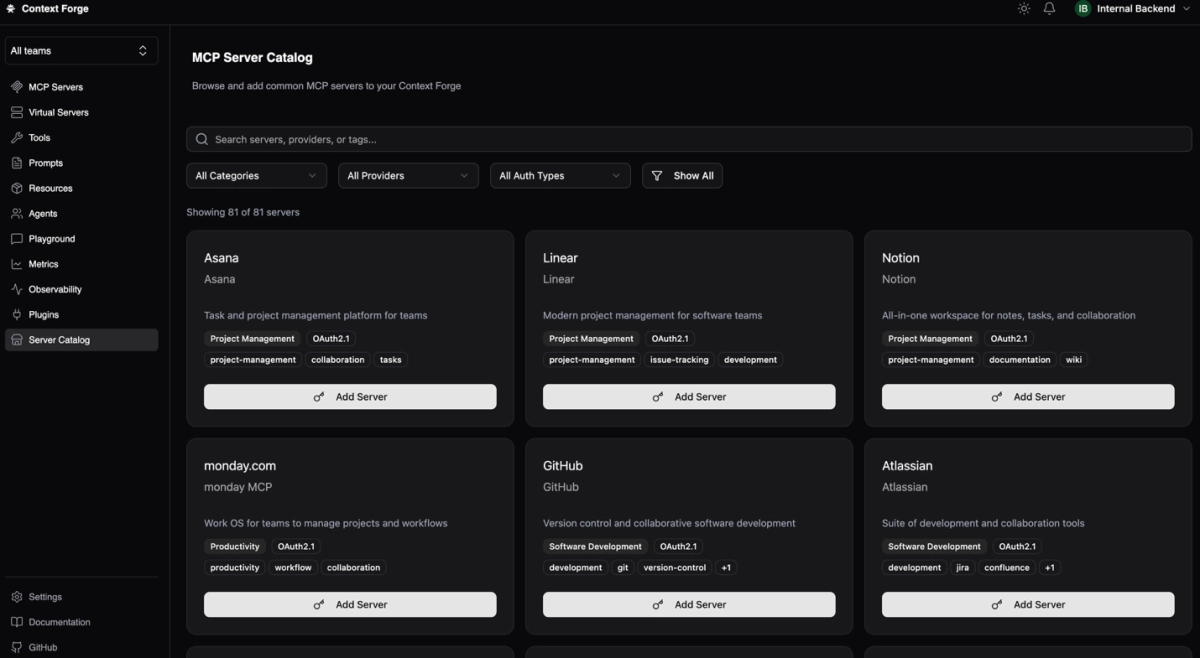

Existing REST APIs can be auto-exposed as AI-accessible tools behind the gateway, complete with authentication, rate limiting, and unified audit trails, which turns MCP server management from a per-app problem into a platform capability. That makes the gateway attractive as evergreen infrastructure for any org betting on agentic AI, even as models, tools, and internal APIs change.

AI agent tool federation and security guardrails

ContextForge’s pitch is that enterprises should treat AI agent tool federation as a first-class platform concern, not a bespoke integration layer inside each product team. Multi-tenant workspaces give different groups isolated catalogs of tools, with role-based access and policy boundaries, while a shared gateway still centralizes observability and compliance.

More than 30 built-in safety and security plugins provide PII detection, content filtering, rate limiting, and policy enforcement as pre- and post-hooks on every request, turning the gateway into a policy engine for all MCP traffic. That aligns with how security teams already think about API gateways and service meshes, but applied to AI agent traffic instead of microservices.

Observability and performance for MCP traffic

As an enterprise MCP gateway, ContextForge leans hard on metrics and tracing so AI teams can see how agents actually use tools over time. Its web dashboard surfaces real-time health for all connected MCP servers, response times, error rates, and the most active agents and users, with hooks to feed data into existing observability stacks.

Under the hood, the gateway layers in response compression, connection pooling, and optimized JSON handling to keep latency low even as AI traffic scales. That’s important if organizations want to run many concurrent AI agents through a single MCP router without turning it into a bottleneck.