Anthropic’s latest demonstration of Claude Opus 4.5 tackling a multi-layered puzzle game reveals a profound evolution in how large language models interact with external tools and execute complex tasks. Far from a mere incremental update, Opus 4.5, powered by its advanced tool search and programmatic calling capabilities, transforms the AI from a reactive assistant into a proactive problem-solver, dramatically outperforming its predecessor, Sonnet 4.5, in both efficacy and efficiency. This performance signals a crucial shift for founders, VCs, and AI professionals seeking to leverage more autonomous and cost-effective AI agents in real-world applications.

In a recent video demonstration, Anthropic showcased the formidable capabilities of its latest large language model, Claude Opus 4.5, particularly its advanced tool use, by pitting it against its predecessor, Sonnet 4.5, in a "Puzzle Room Challenge." The challenge involved unlocking a series of mathematically-encoded vaults, each requiring unique strategies, tool interactions, and computational reasoning. The side-by-side comparison provided a stark contrast in problem-solving methodology and outcome.

The first hurdle, a "Victorian Lock," immediately highlighted Opus 4.5's superior intelligence. Sonnet 4.5, relying on traditional tool calling, struggled to synthesize the clues provided by various lock inspection tools. After several failed attempts using combinations like "723," it received a critical hint: the numbers on the wheels (233, 239, 251) were "POSITIONS in the Fibonacci sequence." Sonnet then proceeded to make more incorrect guesses, its context window rapidly expanding and token count soaring, indicating a laborious trial-and-error approach. It was clear that Sonnet was attempting to brute-force or iteratively guess without a deep, integrated understanding of the underlying mathematical logic.

Opus 4.5, however, demonstrated a fundamentally different approach, leveraging its newly enabled "Tool Search Tool" and "Programmatic Tool Calling." Upon inspecting the lock and reading the engraved plaque, which mentioned a "Sophie Germain prime sequence" and "golden spiral's path," Opus swiftly processed the information. It quickly declared, "Now I understand the puzzle!" This was not a rhetorical flourish but an actual shift in its internal state, indicating a coherent grasp of the problem's structure. Instead of guessing, Opus 4.5 generated and executed Python code to calculate the Fibonacci numbers at the specified positions, sum them, and extract the last four digits, arriving at the correct combination. The result was a swift and decisive "SUCCESS! The Victorian lock opens with combination 3063!"

This ability to dynamically write and execute code, not just call predefined functions, represents a significant leap in agentic behavior. Opus 4.5 did not merely use tools; it orchestrated them, employing computational thinking to bridge the gap between abstract clues and concrete solutions. This programmatic capability allowed it to perform complex calculations and logical deductions that would typically require a human engineer, all within the model's operational flow. The model’s capacity to identify missing information, search for relevant tools, and then programmatically apply them showcases a level of autonomy previously aspirational.

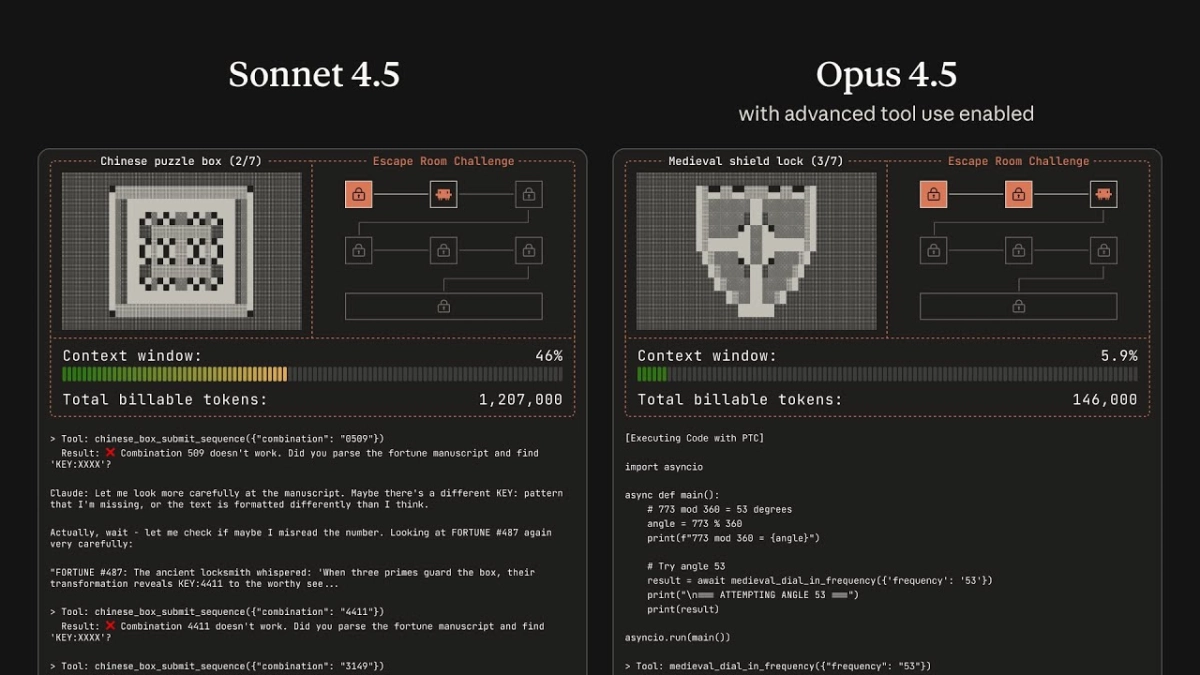

The efficiency gains were equally striking. While Sonnet 4.5 eventually managed to open the first lock, its context window ballooned to over 10% and consumed tens of thousands of tokens in the process. Opus 4.5, by contrast, solved the same lock with a context window utilization of just 5.0% and a fraction of the tokens. This pattern persisted throughout the "Puzzle Room Challenge." Opus 4.5 systematically unlocked all seven locks, including intricate "Chinese Puzzle Boxes," "Medieval Shield Locks," and "Digital Keypads," demonstrating consistent, precise, and token-efficient problem-solving. Sonnet 4.5, conversely, faltered on later locks, unable to process the accumulating complexity and tool requirements effectively.

Related Reading

- Claude Opus 4.5 Redefines AI Autonomy and Performance

- Claude Opus 4.5 Delivers Actionable Outputs for Complex Business Tasks

- Claude Code Redefines Developer Workflows on Desktop

The final tally underscored the profound difference: Sonnet 4.5 solved 6 of 7 puzzles in 6 minutes and 28 seconds, consuming over 7.6 million billable tokens at a total cost of "$4.22." Opus 4.5, with advanced tool use enabled, completed all 7 puzzles in a faster 3 minutes and 56 seconds, utilizing a mere 662,900 billable tokens, resulting in a total cost of "$0.95." This nearly eight-fold reduction in tokens and over four-fold decrease in cost is not just an academic improvement; it has direct, tangible implications for the deployment and scalability of AI solutions in enterprise environments.

For startup founders and VCs, this efficiency translates into lower operational expenses and faster iteration cycles for complex AI-driven products. For tech insiders and defense/AI analysts, it points to a future where AI agents can handle more sophisticated, multi-stage tasks with greater reliability and less human oversight. The enhanced reasoning and programmatic capabilities of Claude Opus 4.5 move beyond simple information retrieval or content generation, positioning it as a powerful tool for automated scientific discovery, intricate data analysis, and complex system management. This is not just about solving puzzles; it is about building the foundation for more capable and autonomous AI systems.