The quest for unprecedented speed in artificial intelligence has led Cerebras Systems to construct what it proudly proclaims as the world's fastest AI infrastructure, recently unveiled in Oklahoma. This monumental achievement, delivering an astonishing 44 ExaFLOPS of new compute power to customers, is not merely about raw processing might but represents a radical rethinking of semiconductor design, cooling, and power delivery that challenges decades of conventional wisdom in high-performance computing.

Matthew Berman of Forward Future recently toured this cutting-edge facility and spoke with Andrew Feldman, Co-Founder and CEO of Cerebras, and Billy Wooten, COO of Scale Data Centers, about the strategic decisions and technological breakthroughs that underpin this audacious endeavor. The conversation delved into everything from the surprising choice of location to the intricate engineering required to push the boundaries of AI acceleration.

Cerebras’ decision to build its flagship data center in Oklahoma City was a calculated one, moving beyond the traditional tech hubs. Feldman cited a confluence of factors: "reasonable labor costs," ample space for "build and expand," and crucially, "reasonably priced power." Beyond economics, the facility's design itself reflects its geographical reality. Constructed with reinforced concrete and engineered for tornado resilience, it ensures operational continuity in a region prone to severe weather, a critical consideration that even impacts the "cost of insurance," as Feldman noted. This foresight in physical infrastructure underscores a holistic approach to reliability.

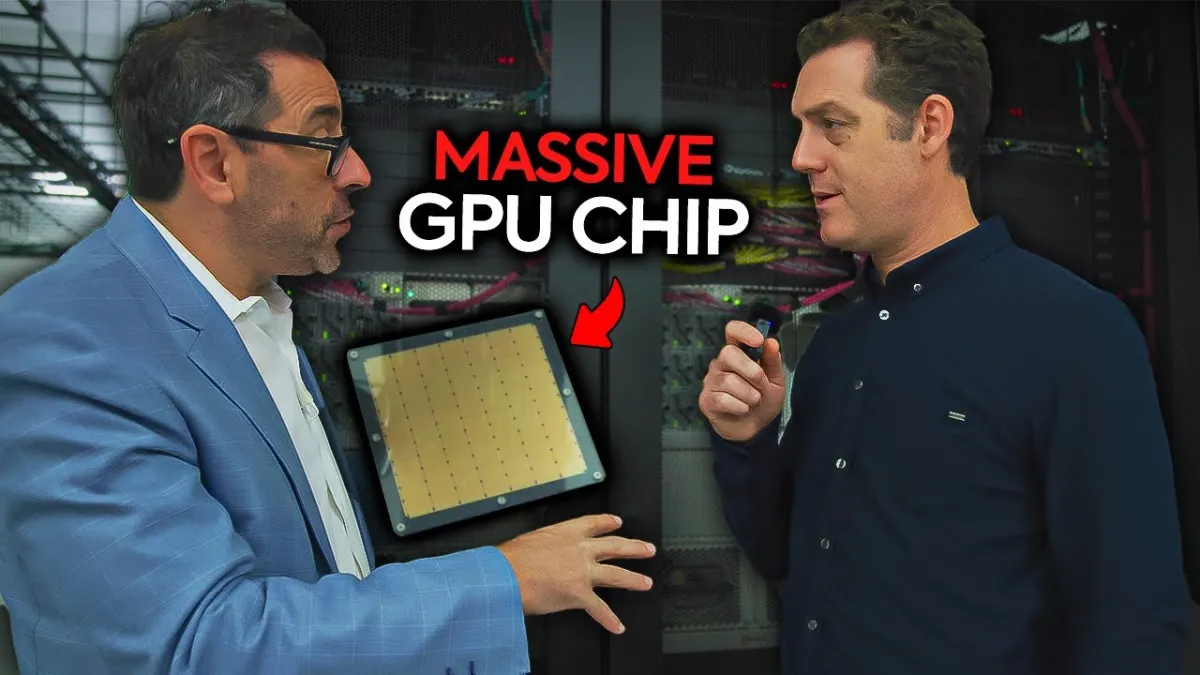

At the heart of Cerebras' speed advantage lies its groundbreaking Wafer-Scale Engine (WSE). Feldman dramatically illustrated its scale by presenting a chip the size of a dinner plate, measuring 46,250 square millimeters. In stark contrast, a traditional chip, considered large at 750 square millimeters, is roughly the size of a postage stamp. This immense scale allows Cerebras to integrate an entire wafer of processors into a single, monolithic chip, eliminating the latency-inducing communication between discrete chips that plagues conventional GPU-based systems.

The profound impact of this design is in memory access. "We have all our memory on the chip," Feldman explained, highlighting the critical difference. Traditional GPUs suffer from "off-chip latency" when data must travel between the processor and external memory. By keeping memory directly on the wafer, Cerebras achieves a staggering "2.5 thousand times faster at getting to data and then using it," a performance leap that fundamentally redefines AI inference capabilities.

Such unprecedented compute density generates enormous heat, with a single wafer drawing 18 kilowatts of power. To manage this, Cerebras employs a sophisticated liquid cooling system, an approach they pioneered in 2017. The data center utilizes a 6,000-ton liquid-cooled chiller plant, sending 42-degree chilled water to the servers. This water then absorbs heat, returning at approximately 70 degrees, to be re-cooled in an efficient closed-loop system. Billy Wooten explained that the water is deliberately warmed to 70 degrees before reaching the wafers to prevent condensation, a precise temperature management strategy crucial for system integrity.

Maintaining uninterrupted operation is paramount, leading to a robust power infrastructure. The primary power source is natural gas converted into electricity, supplemented by battery backups that bridge the gap for approximately five minutes until three massive 3-megawatt Caterpillar generators spin up. "This is how we're able to attain nearly perfect uptime," Feldman affirmed, emphasizing the redundancy and resilience built into the system. While other AI infrastructure providers often discuss "gigawatt scale" in terms of energy consumption, Cerebras focuses on "compute output," where their efficiency and unique architecture yield superior results.

Related Reading

- AI's Gold Rush: Disruption, Data Centers, and the New Economic Calculus

- Applied Digital CEO on $5 billion AI infrastructure lease with U.S.-based hyperscaler

- GE Vernova Powers AI Future Amidst Evolving Partnerships and Market Dynamics

The Cerebras commitment extends to domestic manufacturing, with all systems packaged and assembled in Milpitas, California. This strategic decision to maintain a U.S.-based hardware pipeline is seen as "an important part of being a good citizen in this economy," according to Feldman, aligning with broader national interests in technological independence. The rapid expansion continues, with a second data hall, OKC2, soon to be operational, adding another 20 ExaFLOPS – roughly ten times the capacity of the U.S. Department of Energy's largest supercomputer.

Cerebras’ journey was not without its trials. Feldman recounted a challenging 15-18 month period where, despite spending $8 million a month, the team struggled to solve a critical problem. Through persistent engineering and unwavering support from their board, they eventually achieved a breakthrough, overcoming a hurdle that "the best in the world over 75 years were unable to solve." This triumph underscores the sheer magnitude of their innovation. Looking ahead, Feldman expressed particular excitement for AI's potential in medicine and education. He envisions AI drastically reducing the 17-19 year drug design process to under a decade and fundamentally transforming the way children are taught, offering a "very different opportunity" for societal advancement.