The transition from the era of brute-force scaling to a more nuanced regime of inference-optimal architecture is perhaps best exemplified by the emergence of Dynamic Large Concept Models, or DLCM. For several years, the artificial intelligence industry has operated under what many researchers call the token tax, a fundamental inefficiency where standard transformer architectures allocate the same amount of computational power to every single token regardless of its complexity or information density. Whether a model is processing a highly predictable function word like the or a semantically dense transition in a logical proof, the depth and floating-point operations applied remain identical. This compute-blind approach ignores the reality that language is inherently non-uniform, with long spans of predictable text interspersed with sparse but critical transitions where new ideas are introduced. By challenging this token-uniform paradigm, DLCM introduces a hierarchical framework that effectively separates the process of identifying what to think about from the actual process of reasoning, marking a significant milestone in the development of more efficient and capable large language models.

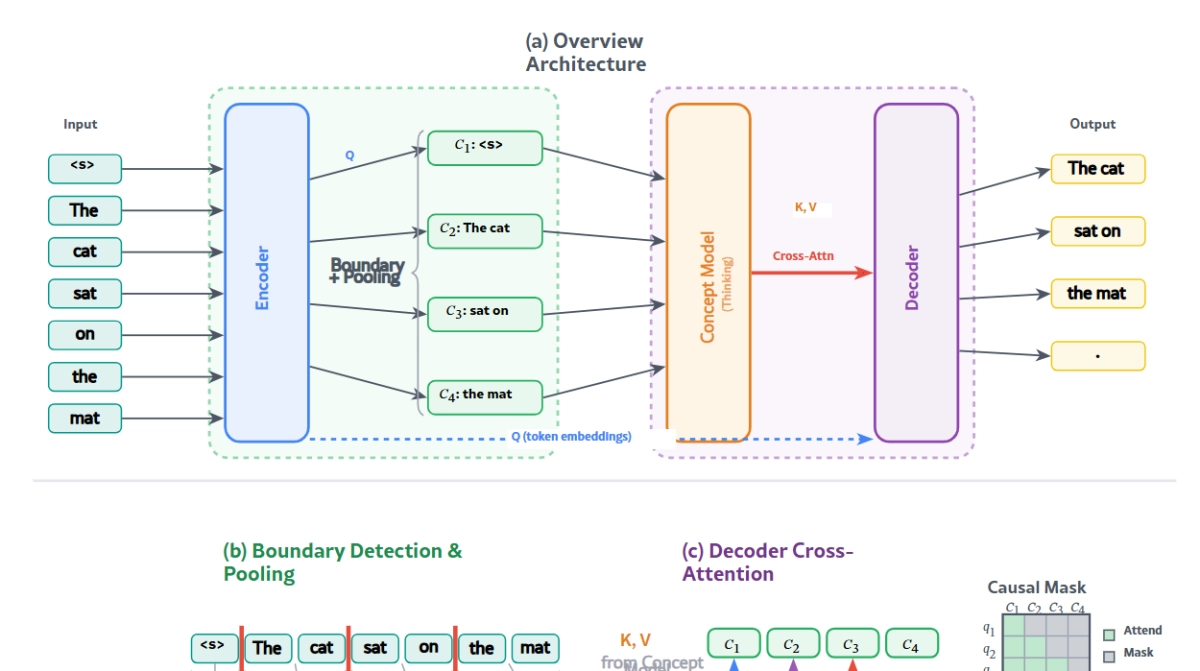

At the core of the DLCM framework is a four-stage pipeline designed to mirror the abstract way in which humans actually process information. Unlike standard models that treat text as a flat stream of individual tokens, DLCM begins with a lightweight causal transformer encoder that extracts fine-grained representations of the raw input. These representations then feed into a dynamic segmentation mechanism, often referred to as a global parser, which identifies semantic boundaries directly from the latent space rather than relying on rigid linguistic rules or punctuation. Once these variable-length segments or concepts are identified, the model performs deep reasoning within a compressed concept space using a high-capacity transformer backbone. Finally, a decoder reconstructs the specific token predictions by attending to these enriched concept representations through a causal cross-attention mechanism. This design allows the model to shift its computational resources away from redundant token-level processing and toward high-level semantic reasoning, ensuring that the majority of inference effort is spent on the parts of the sequence that actually require it.

The primary breakthrough that sets DLCM apart from previous attempts at concept-level modeling is the implementation of a learned, end-to-end boundary detector. Earlier frameworks often relied on fixed human priors, such as sentence-level segmentation, which limited the model's ability to adapt its granularity to different tasks or domains. In contrast, the DLCM global parser uses a normalized dissimilarity measure between adjacent tokens to detect semantic breaks. To ensure stability during training and a controllable compression ratio, the researchers introduced an auxiliary loss function that enforces a target average of tokens per concept across a global batch. This allows the model to be flexible on a per-sequence basis, compressing repetitive or predictable content like code syntax more aggressively while allocating more concepts to dense technical prose or complex reasoning steps. This content-adaptive approach ensures that the model maintains a high level of information retention within a fixed computational budget.

To support the development of these heterogeneous architectures, the researchers also introduced a new compression-aware scaling law that extends the traditional Chinchilla framework. This law mathematically disentangles token-level capacity, concept-level reasoning capacity, and the compression ratio, allowing developers to principledly allocate compute under fixed FLOP constraints. By modeling the interaction between total parameters and data size alongside the compression factor, the scaling law provides a predictable path for building larger and more efficient models. Furthermore, to stabilize the training of modules with different widths, the team developed a decoupled maximal update parametrization. They discovered that because the token-level components and the concept-level backbone serve different functions and have different dimensions, their learning rates must be adjusted independently. This decoupling ensures that the effective learning rate for each component scales inversely with its specific width, enabling zero-shot hyperparameter transfer from small proxy models to large-scale deployments.

The empirical results of this architectural shift are quite significant, demonstrating that DLCM achieves superior performance on reasoning-intensive benchmarks while maintaining comparable inference costs to standard models. On a suite of zero-shot tasks, the model showed substantial gains in categories such as common sense and logical reasoning, where the ability to focus on semantic transitions is most valuable. Interestingly, the model exhibits what the researchers describe as a U-shaped loss profile. By analyzing the loss distribution across relative positions within a concept, they found that DLCM is exceptionally proficient at modeling tokens at the boundaries of semantic units. While it trades off some fine-grained precision on internal tokens that are often highly predictable, it gains a substantial advantage on the structurally critical tokens that mark the introduction of new ideas. This strategic reallocation of capacity explains why the model performs so well on complex downstream tasks despite not showing uniform improvement across every single token.

From an engineering perspective, the researchers were careful to ensure that the hierarchical design of DLCM did not come at the expense of hardware efficiency. Implementing irregular attention patterns across variable-length concepts can often lead to significant overhead on modern GPUs. To solve this, the team utilized a concept replication strategy that expands concept features to match token positions, allowing them to leverage highly optimized kernels like Flash Attention with Variable Length. This approach achieved speedups of up to 1.73 times on long sequences compared to more flexible but less optimized attention mechanisms. For developers and startups, this means that DLCM is not just a theoretical advancement but a practical one that can be served efficiently in production environments. By proving that reasoning-capable models can be built using compressed latent spaces, DLCM provides a clear roadmap for the next generation of cost-effective AI agents that can handle complex planning and abstraction without the prohibitive costs associated with traditional token-level scaling.