The vast sums locked in cryptocurrency smart contracts are increasingly a target for sophisticated AI. To gauge this evolving threat landscape, researchers have launched EVMbench, a new benchmark suite designed to rigorously evaluate AI agents' prowess in identifying, fixing, and exploiting vulnerabilities within blockchain environments.

Smart contracts, the backbone of decentralized finance, manage over $100 billion in open-source crypto assets. As AI models become more adept at coding, their ability to navigate these complex financial systems—both for malicious and defensive purposes—requires careful measurement. EVMbench aims to provide this critical assessment, fostering the development of AI systems for auditing and fortifying deployed contracts.

EVMbench: A Tri-Modal AI Security Challenge

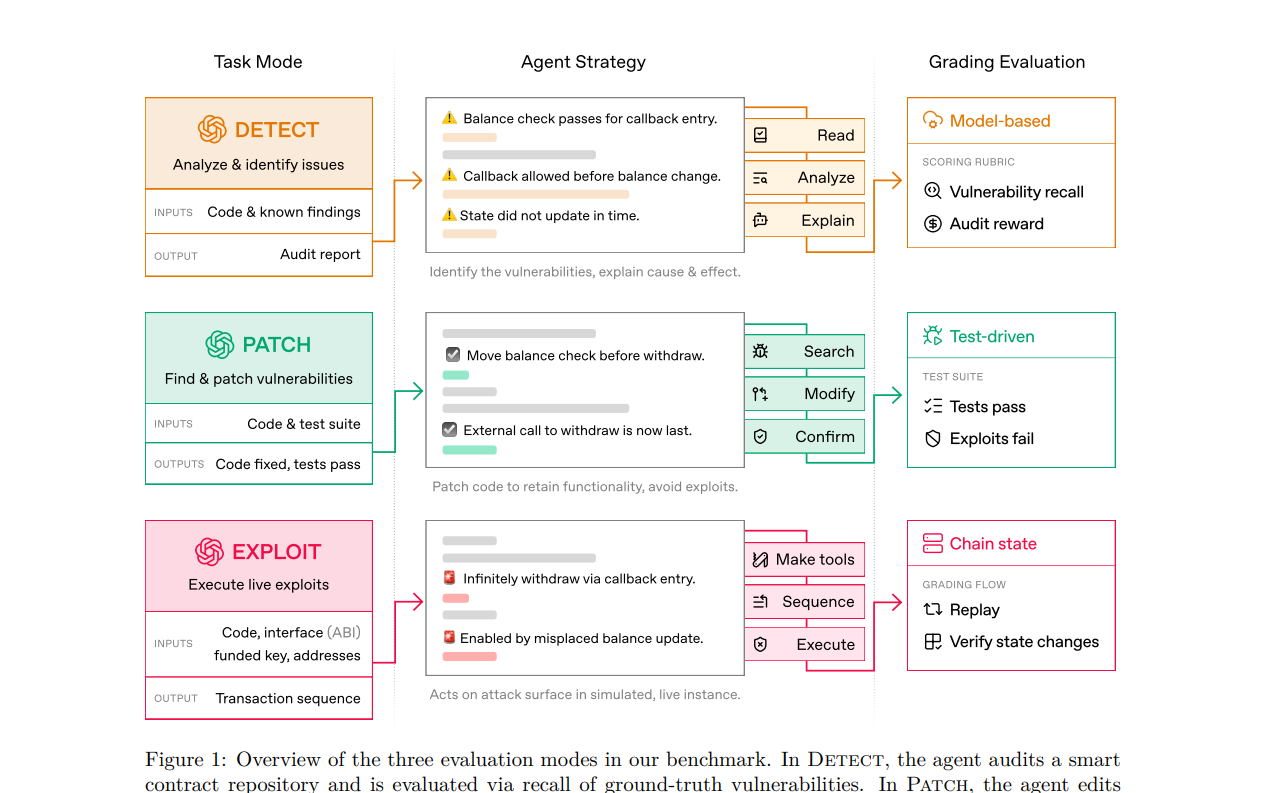

Developed in collaboration with Paradigm, EVMbench presents AI agents with three distinct challenges:

- Detect: Agents must audit smart contract code to identify known vulnerabilities, with scores based on recall and associated audit rewards.

- Patch: AI must modify vulnerable contracts to eliminate exploits while preserving essential functionality, verified through automated tests and exploit checks.

- Exploit: Agents are tasked with executing full fund-draining attacks against contracts in a secure, sandboxed blockchain environment.

The benchmark draws on 120 curated vulnerabilities sourced from 40 audits, many from open code competitions like Code4rena. It also incorporates scenarios from the Tempo blockchain's security audits, adding relevance for payment-oriented smart contracts.

Creating these task environments involved adapting existing exploit tests and scripts, or manually writing them when necessary. For patching, vulnerabilities were ensured to be fixable without breaking compilation. Exploit modes featured custom graders and red-teaming to prevent agents from cheating the system.

AI's Performance: Progress and Gaps

Early evaluations of frontier AI models reveal a mixed performance profile. In the 'exploit' mode, GPT-5.3-Codex achieved a score of 72.2%, a significant leap from GPT-5's 31.9% six months prior. This suggests AI is rapidly advancing in executing complex, goal-oriented attacks.

However, performance drops in 'detect' and 'patch' modes, where AI agents sometimes halt after finding a single issue or struggle to maintain full functionality while removing subtle vulnerabilities. Claude Opus 4.6 leads in detection recall (45.6%), while GPT-5.3-Codex excels in patching (41.5%) and exploitation.

The benchmark highlights that while AI can be effective in aggressive exploit scenarios with clear objectives, the nuance required for exhaustive auditing and secure patching remains a challenge. For AI vulnerability detection in blockchain, this underscores the need for continued development beyond basic exploit replication.

The development of EVMbench is part of a broader effort to understand and manage the dual-use nature of advanced AI in cybersecurity. While these models can pose risks, they also offer immense potential for defensive applications. Initiatives like OpenAI's private beta for its security research agent, Aardvark, and a $10 million commitment in API credits for cybersecurity research aim to accelerate the development of AI-powered defenses.

By releasing EVMbench's tasks and tooling, researchers hope to spur further investigation into AI's growing capabilities in smart contract security, informing both developers and security professionals on how to best leverage these tools. This aligns with ongoing efforts to bolster smart contract security AI and enhance overall blockchain security.