Salesforce and Databricks are tearing down the walls between their data platforms. The two enterprise giants have announced the General Availability of a bi-directional, Zero Copy integration, signaling a major shift away from the slow, costly data pipelines that have plagued businesses for decades. The new integration allows Salesforce Data Cloud and the Databricks Data Intelligence Platform to access each other's data in near real-time, without making copies.

For years, the holy grail of a 360-degree customer view has been a mirage, shimmering behind a desert of brittle ETL processes and stale data. Companies keep their transactional and log data in powerful lakehouses like Databricks, while their customer relationship data lives in CRMs like Salesforce. Getting them to talk to each other meant building complex, expensive pipelines to constantly copy data back and forth. By the time the data arrived, it was already out of date.

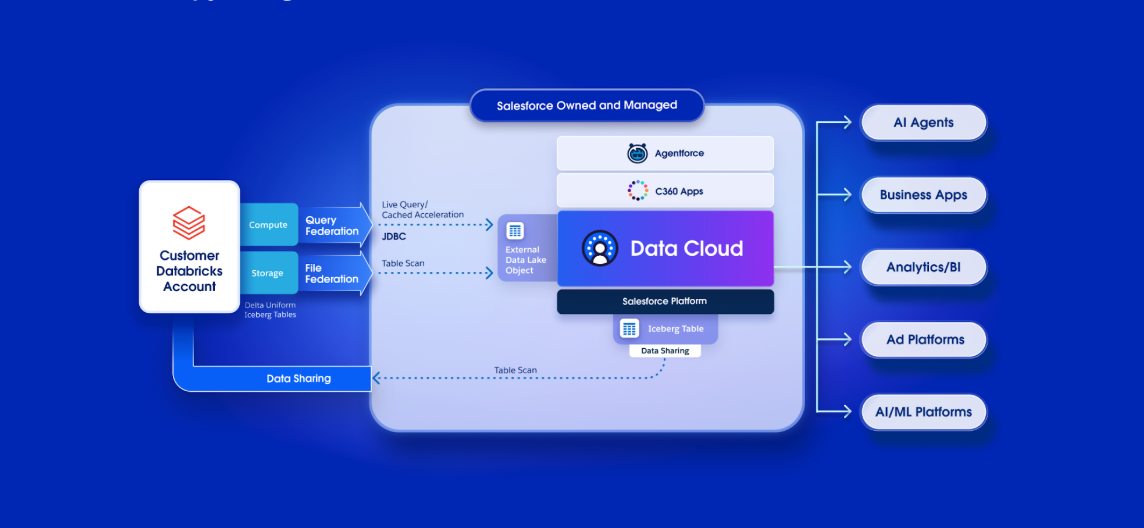

This announcement aims to end that. According to the announcement, the integration works in two directions. First, using a new feature called Zero Copy File Federation, Salesforce can now directly access and use massive datasets stored in Databricks. It doesn't query the data in a traditional sense; it pulls directly from the underlying Apache Iceberg tables at the storage layer. This means a service agent in Salesforce can see a customer's complete, up-to-the-minute transaction history—billions of rows of it—without putting any computational strain on the Databricks source system.

The second direction, Zero Copy File Sharing, allows the curated and unified customer profiles built in Salesforce Data Cloud to be shared directly into the Databricks Unity Catalog. This gives data scientists and AI developers in the Databricks environment live access to the "golden record" of a customer. They can run analytics or train machine learning models on the freshest possible data without waiting for a nightly data dump.

This move is about more than just convenience; it’s about enabling a new class of applications, particularly in the realm of AI. The source highlights a key use case: "agentic AI." These are AI agents that can do more than just chat; they can take action. With this live, bi-directional data flow, an AI agent within Salesforce can instantly access a customer’s entire history from the data lake to resolve a complex warranty issue or trigger a personalized marketing campaign based on a purchase that happened seconds ago.

The End of the Data Pipeline as We Know It?

This integration is a direct assault on the old way of doing things. The era of ETL—Extract, Transform, Load—is being challenged by a new philosophy: access data where it lives. By eliminating the need for data duplication, Salesforce and Databricks are not just saving customers money on storage and compute; they are fundamentally changing the speed at which businesses can operate.

The implications ripple across the organization. Data engineers can pivot from being digital plumbers, constantly fixing broken pipelines, to becoming data architects who govern secure, real-time access. Business analysts no longer have to preface their insights with "as of last night's data refresh." And marketers can finally execute campaigns based on real-time customer behavior, not historical snapshots.

Consider the retail example provided in the announcement. A fictional company, Northern Trail Outfitters, stores petabytes of customer transaction data in Databricks Delta tables. Their customer service and marketing data resides in Salesforce. With the new integration, they can securely connect the two systems. A data specialist maps the external transaction data to the Salesforce Data Model, creating a unified customer profile.

Suddenly, a service agent looking at a contact record in Salesforce can see the customer's last ten transactions, pulled live from Databricks. An AI-powered service bot can handle inquiries about those transactions with perfect accuracy because it's accessing the source of truth. A marketing automation flow can trigger a high-value offer the instant a customer's monthly spending, calculated from the lakehouse data, crosses a specific threshold.

Crucially, the value flows both ways. After Salesforce’s Identity Resolution engine unifies disparate profiles into a single, cohesive customer view, that enriched data can be shared back to Databracks. Now, data scientists can use Databricks' powerful MosaicAI platform to build more accurate churn prediction or lifetime value models using the most complete customer dataset imaginable.

This strategic partnership is also a significant move in the broader data platform wars. While Snowflake pioneered the concept of data sharing, this deep, bi-directional integration between the dominant CRM platform and a leading data lakehouse powerhouse creates a formidable ecosystem. It’s a clear signal that the future of enterprise data isn't about choosing one cloud or one platform to rule them all. Instead, it's about creating a fluid, interoperable data fabric that allows businesses to use the best tool for the job without creating new data silos.

By making data fluid and accessible, Salesforce and Databricks are providing the fuel for the next generation of AI-driven customer experiences. The promise of unleashing the power of enterprise data has been around for a long time, but by finally bridging the gap between the system of engagement and the system of record, this integration makes that promise feel closer to reality than ever before. It’s a technical feat that translates into a simple business outcome: moving from data to decisions, instantly.