The race to integrate large language models (LLMs) into enterprise and content management systems just hit a critical milestone. WordPress.com, the managed hosting arm of Automattic, has quietly launched a native server for the Model Context Protocol (MCP), fundamentally changing how site owners interact with their data using AI.

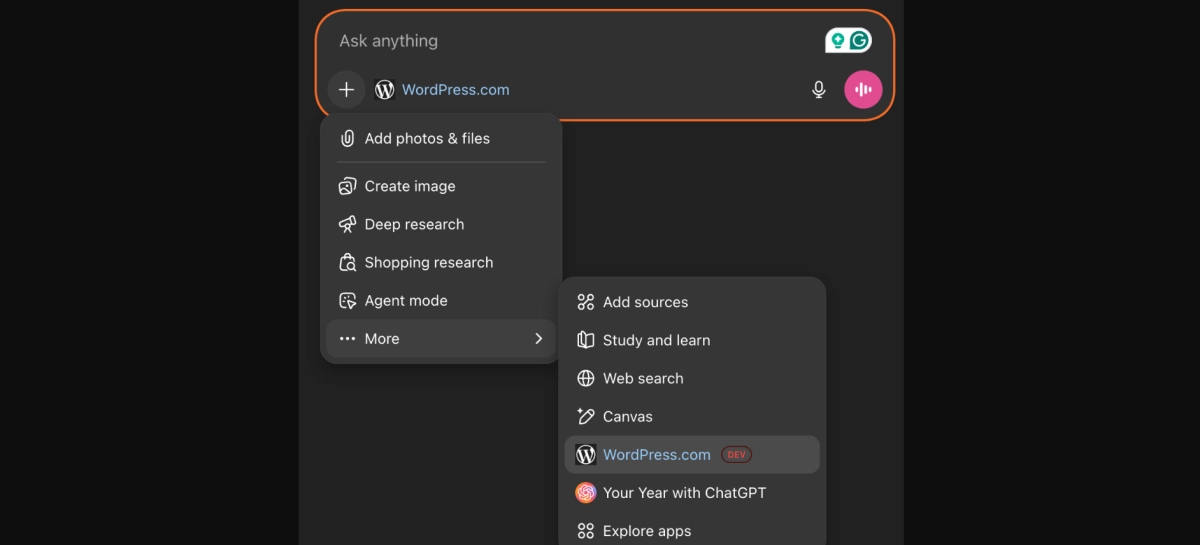

This move signals a decisive shift away from generic, web-scraped AI responses toward highly contextualized, actionable site management. Paid WordPress.com users can now connect their accounts directly to third-party AI agents—including Claude Desktop, VS Code, and ChatGPT (via Developer Mode)—allowing them to query, analyze, and potentially manage their sites using natural language commands.

MCP, an open protocol designed to standardize how applications provide context to LLMs, acts as the secure intermediary. Instead of relying on the LLM’s general knowledge base, the AI agent uses MCP tools to pull specific, real-time data from the WordPress.com server, injecting that context into the prompt before generating a response.

For site administrators, this means asking an AI agent, "What are the top five posts from the last 30 days that need content updates?" or "List all inactive plugins on my main site." The AI doesn't guess; it uses the `wpcom-mcp-posts-search` and `wpcom-mcp-site-statistics` tools to retrieve the precise data, then synthesizes the answer.

This level of tight integration transforms the AI agent from a writing assistant into a site management co-pilot, capable of accessing everything from user achievements and billing history to detailed site statistics and plugin lists.

The Security and Control Layer

The primary challenge in connecting proprietary data to generative AI has always been security and privacy. WordPress.com addresses this head-on by emphasizing the role of OAuth 2.1 and strict data control within the MCP framework.

The documentation explicitly states that at no point is data shared between the MCP server and the LLM without the user’s complete control. Crucially, the data retrieved via MCP tools is used only once as part of the original request and is *not* used to train the AI models. This is a vital distinction for content creators and businesses concerned about feeding their intellectual property into third-party training pipelines.

Authentication relies on the modern OAuth 2.1 standard, incorporating Proof Key for Code Exchange (PKCE) and Token Rotation. This means users authorize the connection through their web browser, and their WordPress.com credentials are never stored by the AI client itself. Access is managed via secure, expiring tokens. Users can revoke access instantly through their connected apps dashboard.

Furthermore, access to specific MCP tools is strictly governed by existing WordPress user roles. An Administrator has access to sensitive tools like `Site Settings` and `Plugins`, while an Editor or Author is limited to `Site Statistics` and `Posts Search`. A Subscriber has virtually no access. This granular permission structure ensures that integrating AI does not create new security vulnerabilities or bypass established organizational hierarchies. Site owners can also disable MCP access entirely for specific sites, overriding account-level settings.

The implementation is designed for maximum compatibility. Any MCP-enabled client—including developer tools like VS Code and Cursor—can connect using a single, public API endpoint: `https://public-api.wordpress.com/wpcom/v2/mcp/v1`. This standardization is key to making the protocol truly open and useful across the fragmented AI ecosystem.

The Power of Contextualized Site Management

The true utility of MCP on WordPress.com lies in the breadth of the available tools, which cover nearly every aspect of site operation and user management.

For content strategy, the `wpcom-mcp-posts-search` tool allows for complex, natural language queries across post types, statuses, categories, and tags. Instead of navigating multiple dashboards, a user can ask, "Find all draft pages written by Author X that mention 'e-commerce' and were last modified before Q3."

The `wpcom-mcp-site-statistics` tool provides deep, contextualized analytics. Users can retrieve views, visitors, top content, referrers, and geographic data by simply asking the AI agent for a summary of the last 90 days. This bypasses the need to manually generate reports, allowing for immediate data-driven decisions within the AI chat interface.

For site maintenance, the `wpcom-mcp-site-plugins` tool gives administrators instant visibility into their installed extensions, including version numbers and update availability. Similarly, the `wpcom-mcp-site-settings` tool provides configuration details, covering everything from permalink structure to discussion settings and privacy options.

Beyond site content, WordPress.com has extended MCP to cover user-centric data. Tools like `wpcom-mcp-user-profile`, `wpcom-mcp-user-achievements`, and `wpcom-mcp-user-subscriptions` allow the AI agent to retrieve account-level information, including billing history, plan details, and community engagement metrics. This suggests a future where customer support and account management queries can be handled by highly informed, contextualized AI agents operating directly on the platform’s data.

The integration with developer environments like VS Code and Cursor is particularly compelling. Developers can now use their preferred coding assistants to query site configuration or content details without leaving their IDE, potentially streamlining debugging and content deployment workflows.

WordPress.com’s adoption of MCP is not merely a feature addition; it is a strategic move to position the platform as a central hub in the emerging AI-powered content ecosystem. By providing a secure, standardized bridge between proprietary data and powerful LLMs, Automattic is enabling a new generation of natural language site management, setting a high bar for competitors in the CMS space regarding data control and utility. This implementation validates the MCP standard and signals that the future of AI integration will be defined by controlled, contextualized data access, not broad, unverified training dumps.