The era of the simple text generator is officially over.

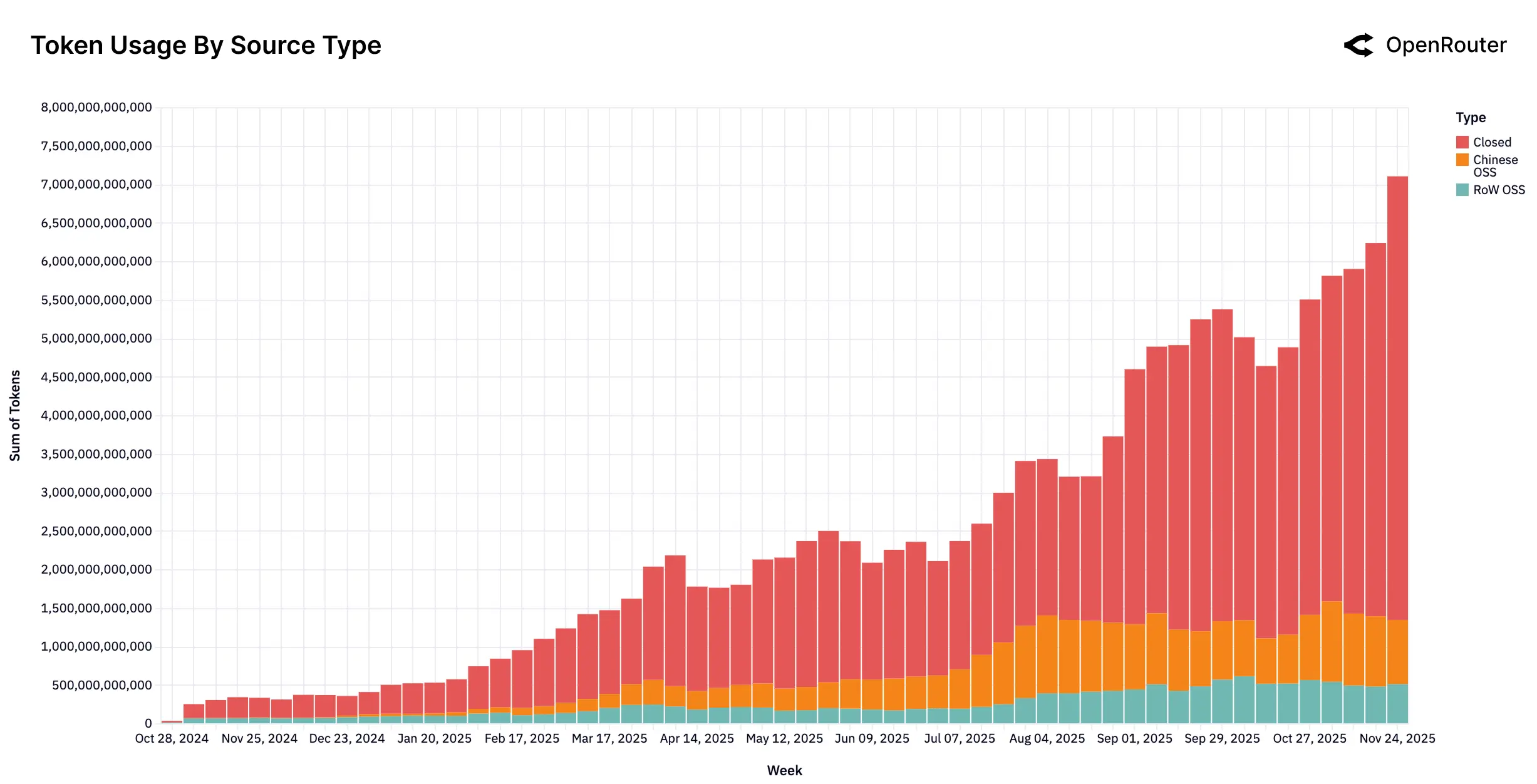

A massive new empirical study, analyzing over 100 trillion tokens of real-world usage data, confirms that the large language model market has fundamentally shifted from single-pass text generation to complex, multi-step agentic inference. The report, released by OpenRouter and a16z, paints a picture of a hyper-specialized, multi-polar ecosystem where proprietary models still lead, but open-source competitors—particularly those emerging from China—are rapidly carving out massive, sticky market segments.

The pivotal moment, according to the analysis, was the launch of OpenAI’s o1 in late 2024. Since then, models optimized for deep reasoning, like o1 and Gemini Pro, have exploded to account for over 50% of all token usage. This isn't just a minor technical tweak; it reflects a complete change in how users interact with AI.

Users are no longer typing simple questions into a chat box. They are feeding models entire codebases, documentation sets, and complex multi-step instructions. The average prompt length has ballooned fourfold, now exceeding 6,000 tokens. This complexity is driven almost entirely by programming tasks, which have surged from 11% to become the dominant professional category, consuming over half of all token volume.

This shift means LLMs are increasingly functioning as the core reasoning engine within automated workflows, rather than as conversational partners. The sharp rise in tool-calling—where models autonomously decide to use external functions or APIs—is the clearest indicator that AI is moving out of the browser tab and into the infrastructure layer.

The Open-Source Split and the Chinese Surge

While proprietary giants like OpenAI and Anthropic maintain market dominance, the open-weight (OSS) ecosystem has matured significantly, now capturing roughly 30% of total traffic. But the OSS market isn't a monolith; it’s deeply bifurcated by use case and geography.

Open models are primarily used for two things: Roleplay (accounting for 52% of their usage) and Programming. This debunks the long-held myth that LLMs are purely productivity tools; the demand for high-quality, creative, and often uncensored interaction is a massive volume driver, rivaling professional coding in scale.

Perhaps the most significant competitive development is the emergence of Chinese open-source models. DeepSeek and Qwen have seen explosive growth, occasionally capturing 30% of weekly volume on the OpenRouter platform. These models are iterating at a breakneck pace, directly challenging Western models on capability and efficiency. DeepSeek, in particular, has become the dominant player in the Roleplay and casual chat categories, while Anthropic and xAI remain heavily skewed toward the high-stakes Programming and Tech sectors.

This competitive pressure is forcing developers to adopt a "medium is the new small" strategy. The study notes a preference for highly capable, medium-sized models (around 70 billion parameters) that offer maximum capability without the prohibitive latency or cost of the largest frontier models.

The global market is also rapidly decentralizing. North America now accounts for less than 50% of total AI spend, a significant drop. Asia’s share has more than doubled, jumping from 13% to 31%. This geographical shift is intrinsically linked to the rise of efficient, low-cost models.

Cost dynamics have settled into two distinct segments, demonstrating surprisingly inelastic demand at the high end. Users are willing to pay a substantial premium for mission-critical reliability and performance from models like Claude 3.7 and GPT-4. These are the "Premium Leaders."

Conversely, the "Efficient Giants"—models like DeepSeek V3 and Gemini Flash—capture massive volume by aggressively optimizing for low pricing. They might not be the absolute best performers, but their cost-efficiency makes them indispensable for high-throughput, non-mission-critical tasks.

This segmentation underscores the central conclusion of the report: the LLM market is now a multi-model ecosystem. Users are sophisticated routers, dynamically directing tasks based on a complex matrix of cost, speed, and specialized capability. No single model rules all workloads.

The study also identifies a powerful retention mechanism dubbed the "Cinderella Glass Slipper" effect. Foundational Cohorts—early users who found a specific model that perfectly solved their unique, sticky problem—do not churn easily. Once a model fits that "Glass Slipper" workload, users demonstrate deep retention, often ignoring cheaper or slightly newer alternatives.

This retention dynamic means that models launching today must be truly "frontier" or specialized to build these foundational cohorts. Models that launch merely as incremental improvements fail to establish this sticky fit and suffer high churn rates. The future of AI is defined not just by raw intelligence, but by the ability to be the perfect, specialized reasoning engine for a complex, multi-step system.