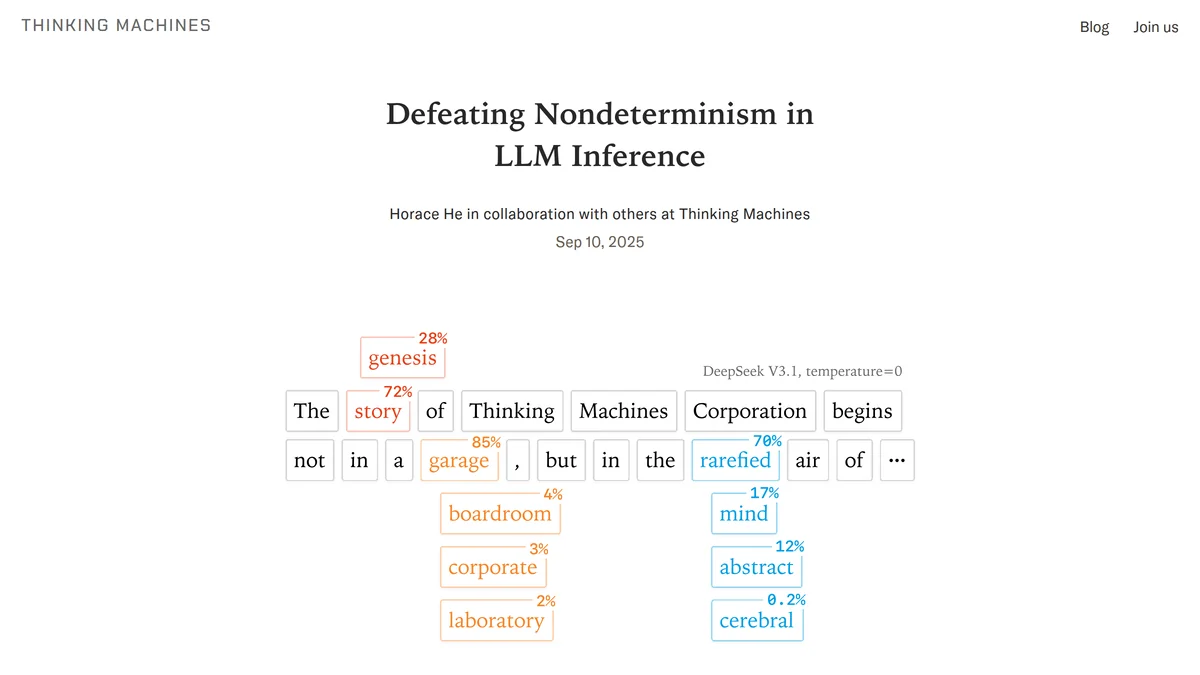

If you’ve ever asked an AI the same question twice and gotten a different answer, you’ve encountered LLM inference nondeterminism. For years, the tech community has pointed to a seemingly obvious culprit: the chaotic, parallel nature of GPUs. The "concurrency + floating point" hypothesis suggests that since GPUs perform calculations in a slightly different order each time, tiny rounding errors in floating-point math cascade into different results. It’s a plausible theory, but it’s not the whole story.

According to a deep-dive analysis by Thinking Machines Lab, this common explanation misses the true source of the problem. While floating-point non-associativity—the fact that `(a + b) + c` isn't always equal to `a + (b + c)` with computer numbers—is the underlying mechanism for numerical differences, it doesn’t explain the randomness. The researchers point out that the individual operations in an LLM’s forward pass, like a matrix multiplication, are actually deterministic. Run the same `torch.mm(A, B)` operation a thousand times on a GPU, and you’ll get the exact same bit-for-bit result every time. So if the core components are deterministic, why is the final output not?

The real culprit is a far more systemic issue: a lack of "batch invariance."

When you send a query to an LLM service like ChatGPT, your request doesn't run in a vacuum. It gets bundled together with requests from other users into a "batch" to be processed efficiently. The size of that batch is constantly changing based on server load. From your perspective as a user, the number of other people using the service at that exact moment is completely random. This is where the problem begins.

The underlying software kernels that perform the heavy lifting—matrix multiplications, attention calculations, and normalization—are highly optimized. A kernel running a large batch of, say, 256 requests might use a completely different internal strategy and order of operations than one running a small batch of just 8 requests. While the math for each element in the batch *should* be independent, these optimization strategies mean the result for your specific query can change depending on the size of the batch it was processed in.

In essence, the inference server itself is technically deterministic. If you could perfectly replicate the exact same batch of user requests, it would produce the same output. But for an individual user, the other requests are an uncontrollable, nondeterministic variable. The system’s lack of batch invariance, combined with the nondeterministic nature of server load, is the true source of LLM inference nondeterminism. It’s not just a GPU quirk; it’s a systems-level problem that affects AI running on CPUs and TPUs as well.

The Path to Determinism

Defeating this nondeterminism requires re-engineering the fundamental building blocks of a transformer to be "batch-invariant"—meaning they must produce the exact same output for a given input, regardless of what other data is in the batch alongside it. This is a significant engineering challenge that forces developers to trade peak performance for consistency.

The Thinking Machines Lab report breaks down the three main operations that need to be fixed: RMSNorm, matrix multiplication, and attention.

For RMSNorm and matrix multiplication, the standard approach is "data-parallel," where each core on the GPU handles a separate piece of the batch. This works well for large batches. But when the batch size is too small to keep all the GPU cores busy, engineers typically switch to a "split-reduction" strategy (like Split-K matmuls), where multiple cores collaborate on a single calculation. This keeps the GPU saturated but changes the order of operations, breaking batch invariance. The solution is to force the kernel to use a single, consistent strategy for all batch sizes, even if it means leaving parts of the GPU idle and sacrificing some performance on smaller batches.

Attention is the most complex challenge. Its calculations depend not only on the batch size but also on the sequence length and how the inference engine processes it (e.g., chunked prefill for long prompts). To achieve true invariance, the system must ensure that the key-value (KV) cache is always structured identically before the attention kernel runs. This prevents the processing strategy from altering the mathematical outcome.

Forcing this consistency often means forgoing the most specialized, hyper-optimized kernels for every possible scenario. Instead, developers must choose a single, robust kernel configuration that works across all shapes and sizes. The researchers note this can lead to a performance hit of around 20% in some cases compared to standard libraries like cuBLAS, but it’s the necessary price for reproducibility.

Achieving truly deterministic LLM inference is more than an academic exercise. It’s a critical step for scientific research, where reproducibility is paramount. It’s also essential for enterprise applications in fields like finance and healthcare, where reliable, verifiable, and identical outputs are a requirement, not a feature. This work demystifies the root cause of AI’s unpredictability and lays out a clear, albeit challenging, blueprint for building language models we can truly depend on.