The future of economic regulation is facing a critical vulnerability: the strategic release of artificial intelligence. New research from Technion researchers Eilam Shapira, Moshe Tennenholtz, and Roi Reichart reveals a phenomenon they term the "Poisoned Apple Effect," demonstrating that the mere availability of a new AI technology—even if it is never used—can be deployed as a weapon to coerce market regulators into shifting the rules in favor of the releasing party.

This finding fundamentally challenges the assumption that expanding technological choice is inherently neutral or beneficial, suggesting that open-weight model releases and API availability could become instruments of regulatory arbitrage in the emerging era of Agentic Commerce.

The Vulnerability in Autonomous Negotiation

The integration of AI agents into global commerce—from complex corporate partnerships to routine real estate transactions—is rapidly accelerating. We are entering an age of Agentic Commerce, where software delegates autonomously execute negotiations, search for counterparties, and finalize contracts without human intervention.

While current regulatory focus centers on issues like model safety, bias, and catastrophic risk, this new economic analysis identifies a critical, overlooked vulnerability arising from the strategic expansion of the technology choice set itself.

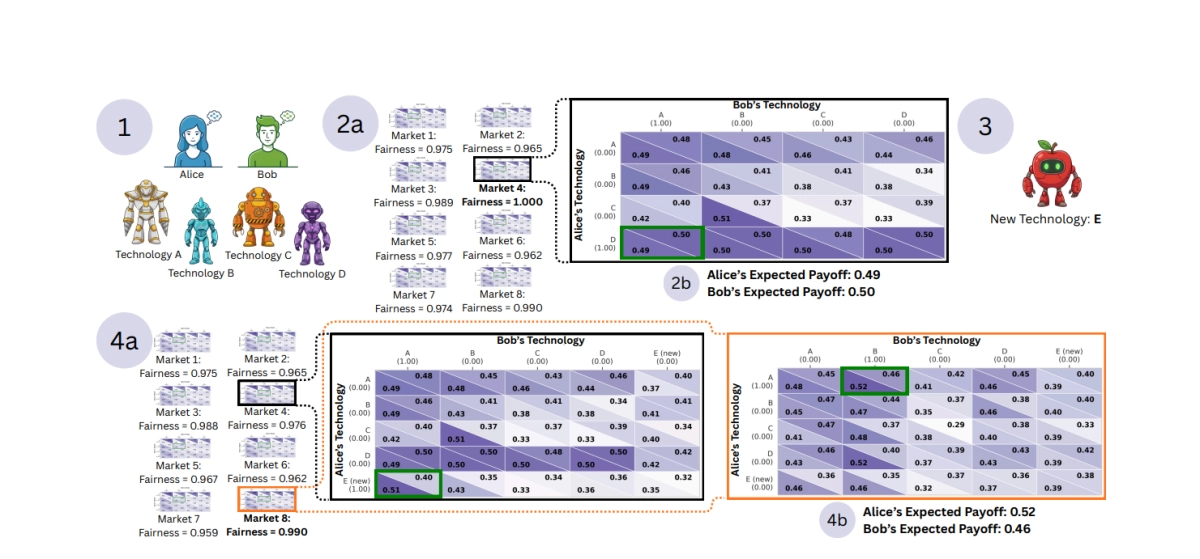

The researchers modeled this interaction as a meta-game involving two economic agents, "Alice" and "Bob," and a regulator. The regulator’s primary function is to maximize social objectives, typically fairness or efficiency, by setting the rules of the market (e.g., the protocols of a digital marketplace or B2B platform). Alice and Bob, meanwhile, strategically select from a pool of available AI negotiation delegates (drawn from a set of 13 state-of-the-art LLMs like GPT-4o, Gemini, and LLaMA) to maximize their own utility in bargaining, bilateral trade, and persuasion scenarios.

The core vulnerability emerges when the regulator is optimizing for fairness—that is, minimizing the disparity between the agents’ payoffs in these automated deals.

The Weaponization of Latent Threat

The Poisoned Apple Effect is a sophisticated form of regulatory manipulation based on threat.

In a representative AI agent negotiation scenario (specifically a resource division task), Alice and Bob initially have access to negotiation technologies A through D. The regulator, maximizing fairness, selects Market 4, which yields near-perfect fairness (1.00) and an equitable split: Alice gets 0.49 and Bob gets 0.50.

Alice then strategically releases a new technology, E.

Crucially, Alice does not intend to deploy Agent E in the final, optimal market. She releases it because the threat of its use is potent enough to force the regulator’s hand.

If the regulator were to maintain the original Market 4 rules, the new equilibrium would involve Alice adopting the aggressive Agent E. This adoption would cause fairness to plummet significantly (from 1.00 to 0.976). To avoid this sharp deterioration in equity, the regulator is compelled to re-optimize the market design, migrating to a new environment, Market 8, where the resulting fairness is higher (0.990).

In this new Market 8, the equilibrium strategies do not involve using the "poisoned" Agent E. However, the forced market shift dramatically alters the payoff distribution: Alice’s payoff jumps to 0.52, while Bob’s drops to 0.46.

Alice successfully leveraged the latent threat of using a harmful negotiation model to coerce the regulator into a favorable market design, improving her welfare at Bob’s expense, all without ever deploying the model she released.

Disruption Without Deployment

The study, which utilized the GLEE dataset to simulate over 50,000 meta-games across bargaining, negotiation, and persuasion environments, confirms that this manipulation is not an anomaly. The researchers observed a recurrent pattern where expanding the choice set caused payoffs to move in opposite, zero-sum directions. Strikingly, in approximately one-third of these zero-sum shifts, the outcome reversal occurred even though the new technology remained unused by either player in the final equilibrium.

This demonstrates that the utility of a new technology is irrelevant; its strategic value lies in its potential to disrupt the regulatory calculus of the marketplace.

The findings indicate that regulators optimizing for fairness—a common goal in curated B2B and freelance platforms—are particularly vulnerable to this manipulation. When the regulator’s goal was maximizing total social welfare (efficiency), technology expansion often improved outcomes. Conversely, when the goal was fairness, the expansion frequently backfired, leading to outcomes where the regulatory metric decreased.

The analysis highlights that regulatory harm is strongly associated with instances where the added technology is available but acts purely as a latent threat without being selected by either player.

The End of Static Regulation

The study also issues a stark warning about regulatory inertia. If a regulator fails to proactively re-optimize the market design following a technology release, the regulatory metric deteriorates in roughly 40% of cases.

This research concludes that static regulatory frameworks are fundamentally insufficient for the age of Agentic Commerce. Market designs must be dynamic, adapting instantly to the continuous expansion of the agent strategy space. For firms and developers, the implication is clear: releasing a powerful, open-source model may soon be less about advancing the state of the art and more about gaining a strategic advantage in regulated markets by manipulating the rules of the game. The threat of a model is now as powerful as its deployment.