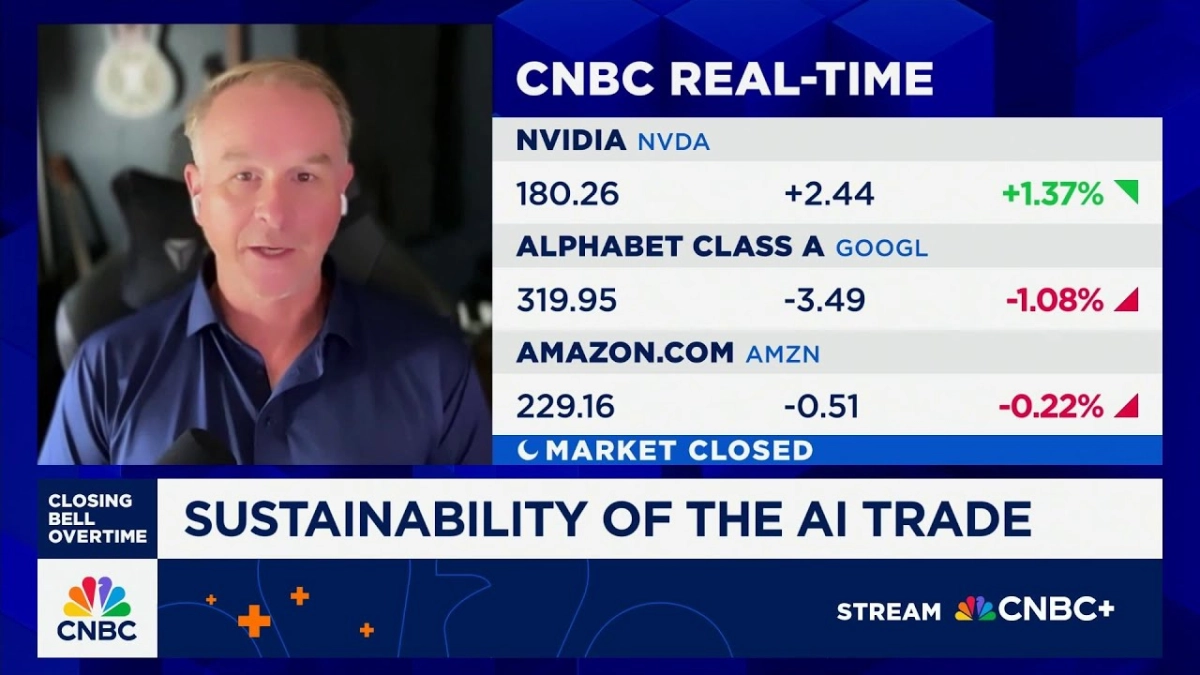

The perceived "AI chip race" between tech giants like Alphabet (Google) and Nvidia is less a head-to-head sprint and more a strategic segmentation of an expanding market. While Google's custom Tensor Processing Units (TPUs) excel at highly specialized tasks for its internal services, Nvidia's Graphics Processing Units (GPUs) maintain a commanding lead for broader, third-party AI workloads due to their architectural flexibility and extensive ecosystem. This fundamental divergence in approach defines the competitive landscape, as articulated by Ben Bajarin, CEO of Creative Strategies, during a recent CNBC "Closing Bell Overtime" interview.

Bajarin spoke with the CNBC host about where Google and Nvidia are truly competing, and where their strategies diverge. The discussion was notably prompted by recent speculation regarding Google potentially selling its specialized AI chips, TPUs, to Meta for its own AI needs, raising questions about Google's broader market ambitions. However, Bajarin quickly clarified that such a move doesn't necessarily signal a direct competitive threat to Nvidia's core business.

Google's TPUs are, by design, highly optimized for the company's vast internal AI infrastructure. "The first customer for TPUs is primarily Google," Bajarin noted, explaining that these chips are "built for their services—everything from YouTube to Search to Gemini as we see today, and it runs very, very well on that." This internal optimization allows Google to tailor hardware precisely to its unique, massive-scale AI demands, driving efficiency and performance for its proprietary applications.

In stark contrast, Nvidia's GPUs thrive on their general-purpose nature. They offer a level of programmability and flexibility that appeals to a wide array of developers and enterprises operating across diverse cloud environments. This broad applicability has allowed Nvidia to cultivate an expansive software ecosystem, becoming the de facto standard for AI development and deployment outside of the hyperscalers' internal operations.

The CNBC host aptly employed a compelling metaphor: "It's like if Google's supplying a specially raised beef cow to McDonald's for the Quarter Pounder... That doesn't mean steak houses and everybody else is going to buy the same cow." Bajarin agreed, underscoring that Nvidia offers a more standardized, adaptable "beef" suitable for varied culinary needs, rather than a highly specific, custom-bred product.

This distinction highlights a core insight: the specialized nature of custom ASICs like TPUs is incredibly efficient when the software is "highly optimized for it." However, this often limits their appeal to first-party users. The question, according to Bajarin, is whether "third parties in public clouds start to adopt these more than they do Nvidia GPUs, and that's not a dynamic we see today." The barrier to entry for custom ASICs is the significant engineering effort required to optimize software for a specific chip architecture, a commitment most third parties are unwilling or unable to make.

Other cloud providers, such as Amazon Web Services (AWS) with its Trainium and Inferentia chips, pursue similar custom silicon strategies. While these chips offer cost and performance benefits for specific workloads within AWS's ecosystem, they too face the challenge of attracting widespread third-party adoption. Google, in fact, is often cited as the most successful at implementing this custom ASIC strategy for its AI accelerators, further cementing the idea that these chips primarily serve the internal needs of their creators.

Related Reading

- Google's AI Surge Reshapes Hyperscaler Battle, Challenges Nvidia Narrative

- Google's AI Chip Ascent Signals Healthy Competition, Not Bubble Burst

- Google's AI Stack Redefines the Race

Despite these internal efforts by hyperscalers, the overall AI industry is experiencing what Bajarin termed a "gigacycle," representing "one of the largest dollar TAM expansions we've ever seen." With projections of a $700 billion to $1 trillion market by 2030, there's immense capital flowing into AI infrastructure. Currently, Nvidia is positioned to capture the "vast majority" of this expansion, thanks to its entrenched position and broad appeal.

Looking ahead, the discussion touched upon the potential emergence of an "AI middleware" layer. This would function like a universal translator, allowing enterprises to run AI workloads across different cloud providers and their respective specialized chips without needing to custom-tune software for each. Such a development would offer the financial benefits of efficiency on a given cloud while providing the programming flexibility of not having to rewrite for every distinct architecture. While this multi-cloud, multi-AI cloud deployment is a desired future state for many enterprises, Bajarin concluded that "we're still what feels like a long way off from that reality." The current landscape remains dominated by the practicalities of architectural compatibility and the established developer ecosystem, favoring general-purpose solutions for most market participants.