The rise of AI agents—bots that shop, search, and consume content on behalf of human users—has rendered traditional web security obsolete. The simple classification of traffic into “good” or “bad” bots is collapsing under the weight of automation that requires identity, intent, and, increasingly, a payment plan.

According to David Sénécal, writing for Akamai, the industry is now scrambling to build a new foundation for web interaction, moving away from blanket blocking toward cryptographic verification and mandatory monetization. This shift defines the emerging field of Agentic bot management.

The core problem is transparency. When an AI agent interacts with a website, the site owner loses the opportunity to generate leads, serve ads, or understand the end user’s true intent. To solve this, a new wave of identity protocols is emerging to provide agents with digital passports.

These protocols include Web Bot Authentication (Web Bot Auth), which uses HTTP Message Signatures to verify known bots; Know Your Agent (KYA), which builds on KYC/KYB models to provide robust identity verification for agents; and Visa’s Trusted Agent Protocol (TAP), designed specifically to enable secure agentic commerce.

These standards rely on encrypted JSON Web Tokens (JWTs) that the agent must present with every request. The token doesn’t just prove the agent is legitimate; it carries crucial context about the request, the intended use of the data, and, critically, the identity of the human user who initiated the request. This is the only way for site owners to regain the transparency lost when the AI agent replaced the direct human visit.

The New Economics of Agentic Bot Management

For publishers and media organizations, the shift is existential. AI agents scrape content to generate answers, bypassing the ad revenue that traditionally funds the site. The old strategy—blocking all AI bots—is proving unsustainable and counterproductive.

Instead, Agentic bot management is pivoting toward monetization. Platforms like Skyfire and TollBit are partnering with bot detection services to enforce content licensing. Access is no longer free; AI operators are encouraged to register and pay for the data they consume.

The challenge, however, is pricing. Should content be priced based on the typical site CPM, or should it use a dynamic model factoring in freshness, popularity, and exclusivity? If every publisher sets a wildly different price, AI platforms may simply limit their compliance, undermining the entire system. Broad adoption and standardized pricing are key to making this new economic model viable.

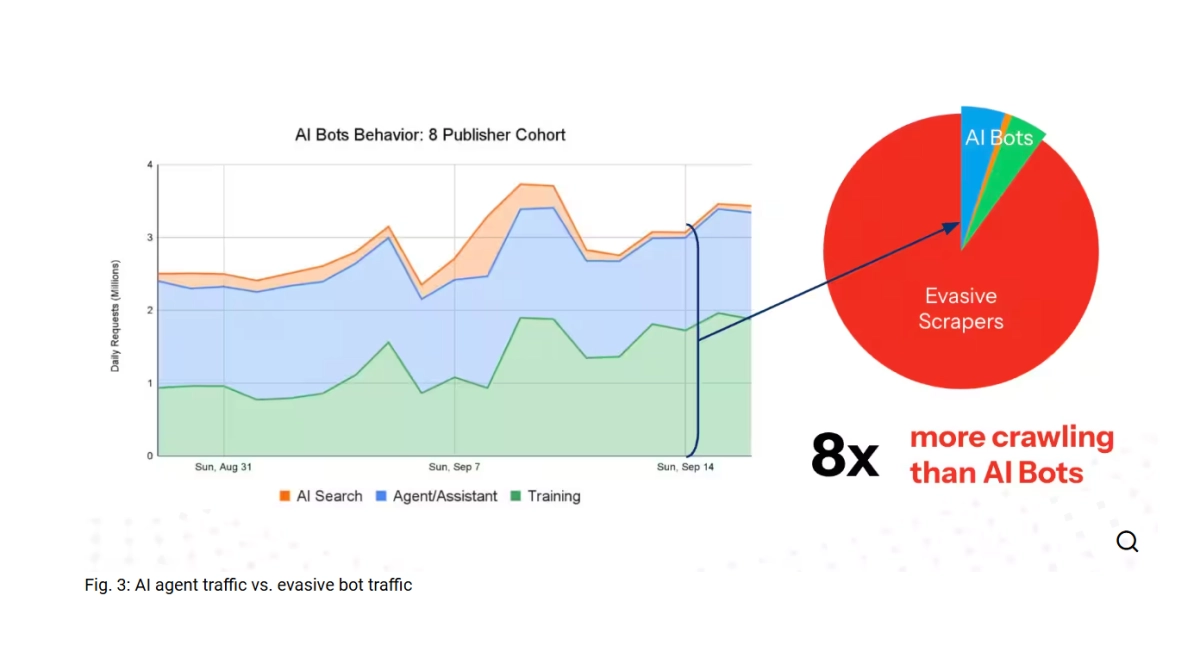

While the industry focuses heavily on managing compliant AI agents from Google, OpenAI, and Meta, the real threat remains the evasive, unverified scrapers. Akamai data shows that for a subset of publishers, the volume of web scraping activity detected by advanced methods was eight times that of compliant AI bots.

This means that publishers who strictly block compliant AI agents—believing they are protecting their data—are still leaking massive amounts of content to sophisticated, evasive scraping platforms that ignore robots.txt directives and employ advanced detection evasion techniques.

Agentic bot management must therefore be comprehensive. It’s not enough to focus on the "good" agents; sites must deploy advanced detection to counter the high volume of "bad" automation that will simply outsource data collection to avoid new authentication and payment requirements.

AI agents are here to stay, and they are disrupting everything from ad revenue to fraud prevention. The new protocols—Web Bot Auth, KYA, and TAP—represent a necessary, if complex, step toward redefining how identity and trust function in an increasingly automated internet ecosystem.