Researchers from Sophia University have introduced the Single-Stream Image-to-Image Translation (SSIT) model, a groundbreaking advancement in efficient image translation. Traditional models powered by Generative Adversarial Networks (GANs) require high computational power, limiting their use to advanced hardware. The SSIT model addresses this challenge by significantly reducing computational demands, making it viable for devices such as smartphones. This advancement has wide-ranging applications in fields like digital art, design, and autonomous technology.

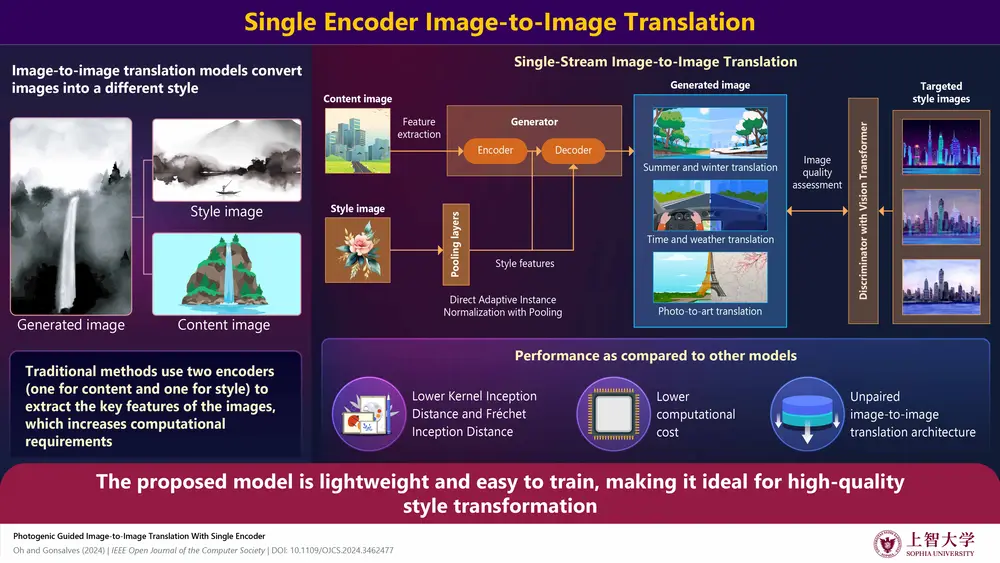

Unlike traditional models that use two encoders to process content and style images separately, the SSIT model employs a single encoder to extract spatial features such as shapes and layouts from content images. It utilizes Direct Adaptive Instance Normalization with Pooling (DAdaINP) to capture style details like colors and textures more efficiently. By combining these features, the model generates high-quality image transformations with lower computational costs, preserving both content and style integrity.

The researchers tested the SSIT model on three tasks: seasonal transformations (e.g., summer to winter), photo-to-art conversions (e.g., turning landscapes into artistic styles like Picasso or Monet), and time/weather translations for driving scenarios (e.g., day to night or sunny to rainy conditions). In all tasks, the SSIT model outperformed five other GAN-based models in generating high-quality images with greater fidelity to target styles, as measured by Fréchet Inception Distance and Kernel Inception Distance scores.

The SSIT model’s efficiency stems from its unique design, which includes convolution layers for content extraction and pooling layers for style extraction. Adversarial training, supported by a Vision Transformer-based discriminator, ensures the generated images closely resemble real-world target styles. These innovative features allow the SSIT model to achieve significant reductions in GPU usage and computational overhead while maintaining superior performance.

This development democratizes image translation, enabling broader access to advanced capabilities on everyday devices without the need for expensive hardware or cloud services. The SSIT model paves the way for accessible, high-quality image transformations, benefiting professionals and enthusiasts in digital art, design, autonomous systems, and scientific research.