The infrastructure layer beneath the current AI boom is getting expensive and fragmented. Runware, a startup aiming to simplify the entire deployment process, announced a $50 million Series A round today, led by Dawn Capital. Their pitch is simple but ambitious: provide one unified AI API for all models, promising massive performance gains and cost reductions for developers scaling intelligent applications.

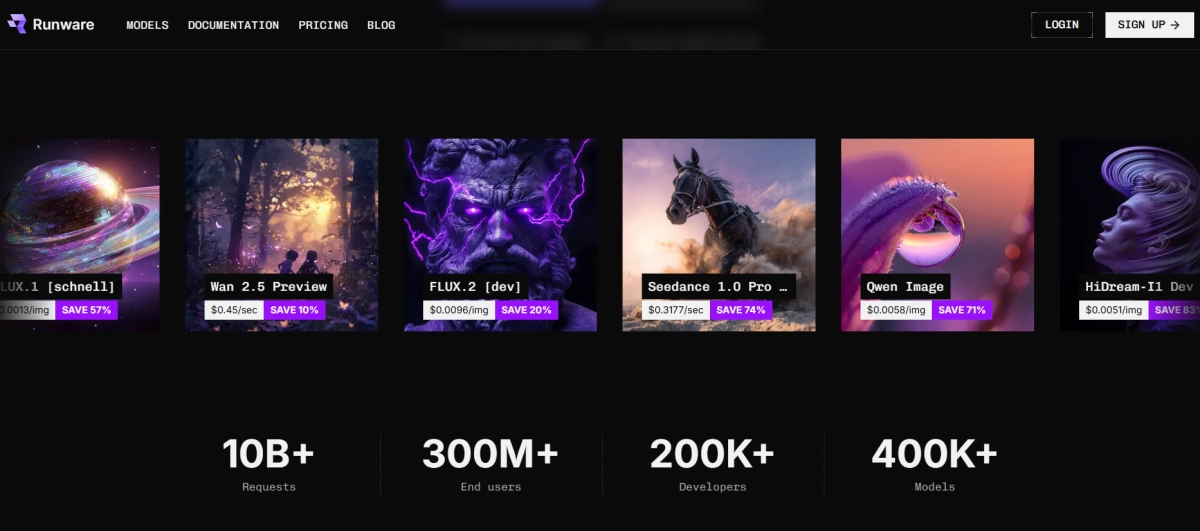

Since its founding in 2023, Runware has quickly become a significant backbone, powering over 10 billion generations for hundreds of thousands of developers. The company argues that most teams deploying AI at scale face three major constraints: fragmented model access, slow inference that breaks user experience, and costs that increase faster than adoption.

Runware’s solution combines custom AI inference hardware with its proprietary Sonic Inference Engine, claiming up to a ten times lower pricing structure and faster performance than standard data center setups. This approach has already attracted major customers, including Wix, Quora, and Freepik, who rely on the platform to power image, video, and audio generation for millions of users.

The core of the offering is the unified AI API, which currently aggregates nearly 300 model classes and hundreds of thousands of model variants behind one consistent schema. This abstraction layer allows developers to A/B test, route, or swap models—whether open or closed source—with minimal code changes.

The economic argument is compelling: Runware promises 10x better price and performance for open source models and 10 to 40 percent savings even on closed source foundational models. For open source models specifically, they claim a 30 to 40 percent speed improvement compared with other inference platforms.

Decentralizing the AI Compute Stack

With the AI inference market projected to hit $70 billion by 2028, Runware is aggressively scaling its platform. A major goal is to deploy all two million-plus new AI models from Hugging Face onto the platform by the end of 2026, making every model available through that single API endpoint.

Crucially, they are also building out "inference PODs"—modular compute units that can be deployed rapidly (in weeks, not years) wherever power is cheap and available. This strategy bypasses traditional, slow data center buildouts, placing compute closer to the end-user and aligning with local regulatory needs.

As CTO Flaviu notes, they are building infrastructure for speed, cost-effectiveness, and redundancy. Ultimately, the goal, according to COO Ioana, is to give teams a single API that removes the need to juggle providers or commit to massive minimums, allowing developers to "focus on product."